I am having an issue with a calculation in one of my Teradata queries. I am multiplying two numbers by each other but i am getting a «Numeric overflow occurred during computation.» error when running the query. I ran a type on both fields and they are DECIMAL(18,15) and DECIMAL(18,9). I tried casting them both to DECIMAL(18,18) when i do the division but its still throwing errors. Here is the calculation. UNITS is the 18,15 and PRICE is the 18,9. Can anyone please give me any tips on how to resolve this?

cast(UNITS as DECIMAL(18,18))* cast(PRICE as DECIMAL(18,18)) as NEW_CALC

Thanks,

Craig

asked May 13, 2015 at 16:50

You use wrong datatypes, a DECIMAL(18,15) means 18 digits, 15 of them fractional, so the maximum value is 999.999999999999999.

And when you multiply two decimals, the number of fractional digits adds up, NEW_CALC results in 38 fractional digits. Do a TYPE(cast(UNITS as DECIMAL(18,18))* cast(PRICE as DECIMAL(18,18))).

This will work:

cast(UNITS as DECIMAL(38,15))* PRICE

But you better change the column’s datatype to something like (18,2) or (18,4), I don’t think anyone uses more than 4 digits for prices.

answered May 13, 2015 at 17:06

dnoethdnoeth

59.4k4 gold badges38 silver badges56 bronze badges

1

Numeric overflow in Teradata occurs while handling numeric values in computation or transformation. Numeric overflow occurs when you load value which is bigger than the range for numeric datatype. You can solve the error by casting the column to bigger datatype. Maximum possible value is decimal(38,0).

Topics Covered

Numeric overflow occurs because of 3 common reasons:

- When you load a value which is more than the permissible range of the column datatype

- When you load a value from bigger data type column into smaller data type column

- When you aggregate column value and it exceeds the possible maximum value of column datatype

Let’s dive deep into the problem statement and I will make sure that you know everything about the error which includes cause, reason and of course solution to numeric overflow error.

In Teradata, numeric values are

- BYTEINT

- SMALLINT

- INTEGER

- BIGINT

- DECIMAL/NUMERIC

- FLOAT/REAL/DOUBLE PRECISION

So whenever you get this error I want you to think about these columns and identify the columns used in query which are defined as above mentioned data type.

Important point to understand here is the storage space & permissible range of values for each column defined in the above table.

We will continue our discussion on this numeric overflow computation with an example now. So let’s create a table with some sample columns of number datatype and run some queries to reproduce the error and see the solution.

create volatile table chk_num_comp ( col_byteint byteint, col_smallint smallint, col_integer integer, col_bigint bigint ) on commit preserve rows;

| DataType | Storage (BYTE) | Range |

|---|---|---|

| BYTEINT | 1 | -128 to 127 |

| SMALLINT | 2 | -32768 to 32767 |

| INTEGER | 4 | -2147483648 to 2147483647 |

| BIGINT | 8 | -9223372036854775808 to 9223372036854775807 |

It is very important to understand the allowed value or range for any numeric datatype especially if you are fixing numeric computation error. Now you cannot remember the range all the time but you can quickly calculate it if required. For example BYTEINT occupies 1 BYTE i.e. 8 bits. So the range should be 2^8 ie. 256. But in Teradata, unlike some other RDBMS all the numeric datatypes are signed by default and you cannot change it. So the number should support negative values along with positive value. Hence the range becomes 2^7 for negative values & 2^7 for positive values. To calculate it using query , you can try below query:

select power(2,7)*-1 as min_value, power(2,7)-1 as max_value;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

min_value max_value

------------------------------ ------------------------------

-128 127

Min value has multiplication with -1 to get negative value in output. For Max value we have subtracted 1 to consider 0 as the value too. Similarly for other data type also you can calculate the permissible range.

select power(2,15)*-1 as min_value, power(2,15)-1 as max_value;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

min_value max_value

------------------------------ ------------------------------

-32768 32767

BTEQ -- Enter your SQL request or BTEQ command:

select power(2,31)*-1 as min_value, power(2,31)-1 as max_value;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

min_value max_value

------------------------------ ------------------------------

-2147483648 2147483647

BTEQ -- Enter your SQL request or BTEQ command:

select power(2,63)*-1 as min_value, power(2,63)-1 as max_value;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

min_value max_value

------------------------------ ------------------------------

-9223372036854776000 9223372036854775999

Now the BIGINT range shown above is not right and seems like some rounding issue with the output and correct value for BIGINT is -9223372036854775808 to 9223372036854775807

Let’s load a row into the table with maximum value for all the 4 datatype.

insert into chk_num_comp VALUES (127,32767,2147483647,9223372036854775807);

*** Insert completed. One row added.

*** Total elapsed time was 1 second.

BTEQ -- Enter your SQL request or BTEQ command:

SELECT * FROM chk_num_comp;

*** Query completed. One row found. 4 columns returned.

*** Total elapsed time was 1 second.

col_byteint col_smallint col_integer col_bigint

----------- ------------ ----------- --------------------

127 32767 2147483647 9223372036854775807

Whenever you will try to load a value which is more than permissible range mentioned in above table you will get some error. Now let’s see the possible error cases we can encounter.

Case 1: Load the value more than permissible range for datatype

insert into chk_num_comp VALUES (128,32767,2147483647,9223372036854775807);

*** Failure 3520 A constant value in a query is not valid for column col_byteint.

Statement# 1, Info =0

*** Total elapsed time was 1 second.

In the above example, we tried to insert «128» for byteint which gave the error. So if you don’t know the range for BYTEINT this error could be confusing because 128 is a valid number but not in range for BYTEINT column.

How to Fix not valid constant value in teradata ?

If the source value is correct, then change the datatype of the column to accommodate bigger values accordingly.

Case 2: Load the value from bigger number datatype to smaller number datatype

In this example, we will try to load byteint column from a bigint column and see what will happen.

insert into chk_num_comp (col_byteint) SELECT col_bigint FROM chk_num_comp;

*** Failure 2617 Overflow occurred computing an expression involving chk_num_comp.col_bigint

Statement# 1, Info =0

*** Total elapsed time was 1 second.

We got the overflow error. But does this mean we will always get the error when we will load a smaller number datatype from a bigger number datatype? That’s not true. If the value in the bigger column is in permissible range then smaller datatype shall be able to store it. Let’s see an example below in which we will delete the table and load a dummy row in it.

delete chk_num_comp; *** Delete completed. One row removed. *** Total elapsed time was 1 second. BTEQ -- Enter your SQL request or BTEQ command: insert into chk_num_comp(1,2,3,4); *** Insert completed. One row added. *** Total elapsed time was 1 second. BTEQ -- Enter your SQL request or BTEQ command: insert into chk_num_comp (col_byteint) SELECT col_bigint FROM chk_num_comp; *** Insert completed. One row added. *** Total elapsed time was 1 second.

So you can see the value in BIGINT column was «4» which is in permissible range for BYTEINT column. Hence it was able to load it successfully.

How to Fix Overflow occurred computing an expression in Teradata ?

It is ok to store values coming from bigger datatype into smaller datatype as long as the value is in permissible range. It is a good tuning technique which optimised storage space. However if you do expect bigger values then you must change the column datatype accordingly.

Case 3: Numeric overflow during Aggregation

In this we will see some of the common aggregate functions like count, sum, average and how we can handle this error during computation. Let’s delete and load dummy rows into the table for this example.

delete chk_num_comp; *** Delete completed. One row removed. *** Total elapsed time was 1 second. BTEQ -- Enter your SQL request or BTEQ command: insert into chk_num_comp(1,1,1,1); *** Insert completed. One row added. *** Total elapsed time was 1 second. BTEQ -- Enter your SQL request or BTEQ command: insert into chk_num_comp VALUES (127,32767,2147483647,9223372036854775807); *** Insert completed. One row added. *** Total elapsed time was 1 second.

Let’s start with the sum operation first. So for byteint column we have 2 rows with value 127 & 1. If we do sum of it then the result is 128 which is more than permissible range. So will it throw the error ? Let’s see with below example.

SELECT sum(col_byteint) AS sum_col_byteint FROM chk_num_comp;

*** Query completed. One row found. One column returned.

*** Total elapsed time was 1 second.

sum_col_byteint

---------------

128

I don’t see any error above. Why ? I will explain it but let’s run the SUM function for SMALLINT & INTEGER column first before we find the reason behind success & failure during SUM operation.

SELECT sum(col_smallint) AS sum_col_smallint FROM chk_num_comp;

*** Query completed. One row found. One column returned.

*** Total elapsed time was 1 second.

sum_col_smallint

----------------

32768

BTEQ -- Enter your SQL request or BTEQ command:

SELECT sum(col_integer) AS sum_col_integer FROM chk_num_comp;

*** Failure 2616 Numeric overflow occurred during computation.

Statement# 1, Info =0

*** Total elapsed time was 1 second.

So we can see above that for SMALLINT column , we got the output. But for INTEGER we got the numeric overflow error. But why ? And the answer is in the return type of SUM function which is INTEGER.

So when the sum function added both rows with value 2147483647 + 1 , the output was more than permissible range of INTEGER column. Hence the numeric overflow error. So what about BIGINT ? For BIGINT, the return type of SUM function is BIGINT and if the calculation surpasses the BIGINT range then the same error will come for BIGINT column as well. Let’s see the example below for BIGINT.

SELECT sum(col_bigint) AS sum_col_bigint FROM chk_num_comp;

*** Failure 2616 Numeric overflow occurred during computation.

Statement# 1, Info =0

*** Total elapsed time was 1 second.

To check the return type of the SUM function, we can run below query and confirm it.

delete chk_num_comp;

*** Delete completed. 2 rows removed.

*** Total elapsed time was 1 second.

BTEQ -- Enter your SQL request or BTEQ command:

insert into chk_num_comp(1,1,1,1);

*** Insert completed. One row added.

*** Total elapsed time was 1 second.

BTEQ -- Enter your SQL request or BTEQ command:

SELECT sum(col_byteint) AS sum_col_byteint , TYPE(sum_col_byteint) FROM chk_num_comp;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

sum_col_byteint Type(sum_col_byteint)

--------------- ---------------------------------------

1 INTEGER

BTEQ -- Enter your SQL request or BTEQ command:

SELECT sum(col_smallint) AS sum_col_smallint , TYPE(sum_col_smallint) FROM chk_num_comp;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

sum_col_smallint Type(sum_col_smallint)

---------------- ---------------------------------------

1 INTEGER

BTEQ -- Enter your SQL request or BTEQ command:

SELECT sum(col_integer) AS sum_col_integer , TYPE(sum_col_integer) FROM chk_num_comp;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

sum_col_integer Type(sum_col_integer)

--------------- ---------------------------------------

1 INTEGER

BTEQ -- Enter your SQL request or BTEQ command:

SELECT sum(col_bigint) AS sum_col_bigint , TYPE(sum_col_bigint) FROM chk_num_comp;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

sum_col_bigint Type(sum_col_bigint)

-------------------- ---------------------------------------

1 BIGINT

How to fix Numeric Overflow occurred during computation in Teradata ?

You may want to explicit cast the datatype to bigger datatype while applying SUM function in order to avoid the numeric overflow error during computation. Let’s see the example below for BIGINT column.

delete chk_num_comp;

*** Delete completed. 2 rows removed.

*** Total elapsed time was 1 second.

BTEQ -- Enter your SQL request or BTEQ command:

insert into chk_num_comp VALUES (1,1,1,1);

*** Insert completed. One row added.

*** Total elapsed time was 1 second.

BTEQ -- Enter your SQL request or BTEQ command:

insert into chk_num_comp VALUES (127,32767,2147483647,9223372036854775807);

*** Insert completed. One row added.

*** Total elapsed time was 1 second.

BTEQ -- Enter your SQL request or BTEQ command:

select col_bigint from chk_num_comp;

*** Query completed. 2 rows found. One column returned.

*** Total elapsed time was 1 second.

col_bigint

--------------------

1

9223372036854775807

BTEQ -- Enter your SQL request or BTEQ command:

SELECT sum(CAST(col_bigint AS decimal(38,0))) AS sum_col_integer , TYPE(sum_col_integer) FROM chk_num_comp;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

sum_col_integer Type(sum_col_integer)

---------------------------------------- ---------------------------------------

9223372036854775808. DECIMAL(38,0)

BTEQ -- Enter your SQL request or BTEQ command:

SELECT sum(CAST(col_bigint AS decimal(38,0) FORMAT 'Z(38)9')) AS sum_col_integer , TYPE(sum_col_integer) FROM chk_num_comp;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

sum_col_integer Type(sum_col_integer)

--------------------------------------- ---------------------------------------

9223372036854775808 DECIMAL(38,0)

In the last query , I have applied FORMAT to ignore the decimal point «.» which was coming in the output. So in this manner you can fix the error related with numeric overflow in SUM & AVERAGE aggregate function.

For COUNT you can just cast the count function to overcome this error in place of column. See the example below:

--wrong way as the output is still INTEGER type.

SELECT count(CAST(col_integer AS bigint)) AS count_col_integer , TYPE(count_col_integer) FROM chk_num_comp;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

count_col_integer Type(count_col_integer)

----------------- ---------------------------------------

2 INTEGER

BTEQ -- Enter your SQL request or BTEQ command:

--correct way as the output is now in BIGINT type.

SELECT CAST(count(col_integer) AS bigint) AS count_col_integer , TYPE(count_col_integer) FROM chk_num_comp;

*** Query completed. One row found. 2 columns returned.

*** Total elapsed time was 1 second.

count_col_integer Type(count_col_integer)

-------------------- ---------------------------------------

2 BIGINT

the conversion of the varchar value overflowed an int column

If you are storing numeric values in varchar column then you must be very careful while converting it into numeric data type like integer. If the value is more than the range allowed for the numeric column then it will result in overflow error.

Very common example is the mobile number. In many tables I have seen mobile number is stored in varchar column. However if it is converted to integer it may throw overflow error. Integer max value is 2147483647 and mobile number are generally 10 digit numbers. So be careful while converting varchar value into int column to avoid overflow error.

That’s it guys. This is all I planned to cover in this post. Now you know the reason behind the numeric overflow error and the solution to it as well. Let me know if you see any more case in the comment box below and I will be happy to cover it too.

If you prefer to watch video then check the video below:

Contents

- 1 Numeric Datatypes in Teradata

- 1.1 Numeric overflow error (code = 2616) in Teradata

- 1.2 Resolution: Up size the decimal column in Teradata

Numeric Datatypes in Teradata

Teradata provides the multiple numeric data types for the columns and it is listed below

- Byte Int – Represents a signed binary integer value in the range -128 to 127.

- Small Int – Represents a signed binary integer value in the range -32768 to 32767.

- Integer – Represents a signed, binary integer value from -2,147,483,648 to 2,147,483,647.

- Big Int – Represents a signed, binary integer value from -9,223,372,036,854,775,808 to 9,223,372,036,854,775,807.

- Float/Double – Represent values in sign/magnitude form ranging from 2.226 x 10-308 to 1.797 x 10308.

- Number – Represents a numeric value with optional precision(range is from 1 to 38) and scale limitations.

- Decimal – Represents a decimal number of n digits( range is from 1 through 38), with m(the number of fractional digits) of those n digits to the right of the decimal point.

If we try to insert the larger values than the allowed/specified size of the numeric column, Teradata will throw the numeric overflow error. Lets see the numeric overflow error for Decimal column with example.

Example : Target table ==> Banking_DB.Customer

|

CREATE SET TABLE Banking_DB.CUSTOMER ,FALLBACK , NO BEFORE JOURNAL, NO AFTER JOURNAL, CHECKSUM = DEFAULT, DEFAULT MERGEBLOCKRATIO, MAP = TD_MAP1 ( Cust_Id INTEGER, Cust_Name CHAR(20) CHARACTER SET LATIN NOT CASESPECIFIC, Join_Date DATE FORMAT ‘YYYY/MM/DD’, Mobile_Number INTEGER, Login_Count DECIMAL(8,0) ) UNIQUE PRIMARY INDEX ( Cust_Id ); |

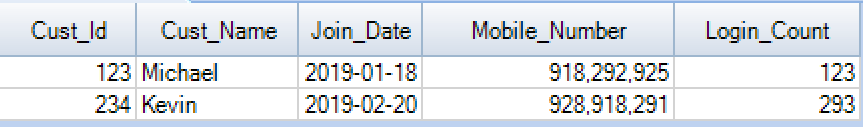

Here the customer table is created in the Banking database. The table contains a column as Login_count that specified to Decimal(8,0). If we try to insert the value more than 8 digits for Login_count, It will throw the Numeric overflow error.Initially the table contains the two records as below.

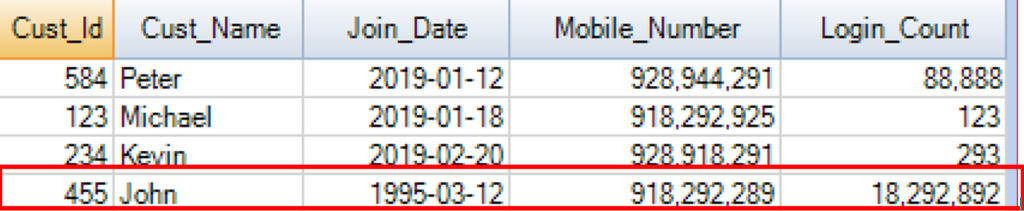

Example : Source table ==> Banking_DB.Customer_old

The source table Customer_old contains the old and new customer details with login count. The structure and the values of the table has mentioned below.

|

CREATE MULTISET TABLE Banking_DB.customer_old ,FALLBACK , NO BEFORE JOURNAL, NO AFTER JOURNAL, CHECKSUM = DEFAULT, DEFAULT MERGEBLOCKRATIO, MAP = TD_MAP1 ( Cust_Id INTEGER, Cust_Name CHAR(20) CHARACTER SET LATIN NOT CASESPECIFIC, Join_Date DATE FORMAT ‘YYYY/MM/DD’, Mobile_Number INTEGER, Login_Count DECIMAL(8,0)) NO PRIMARY INDEX ; |

The insert query is trying to select and insert the record with the login count as 18292892 + 19292929982 for the customer id=455. Since the login count value is exceeds the 8 digits during computation in the SELECT CASE statement, it is throwing the numeric overflow error as below.

|

INSERT INTO BANKING_DB.CUSTOMER SELECT CUST_ID, CUST_NAME, JOIN_DATE, MOBILE_NUMBER, CASE WHEN CUST_NAME=‘John’ THEN LOGIN_COUNT+19292929982 — adding some values to the login count column ELSE 0 END AS LOGIN_COUNT FROM BANKING_DB.CUSTOMER_OLD WHERE CUST_ID = 455; |

Output of the insert statement

*** INSERT Failed 2616 Numeric overflow occurred during computation. ***

Resolution: Up size the decimal column in Teradata

We can up size the Login_count column from Decimal(8,0) to Decimal(38,0) in the Target table that will resolve the numeric overflow error during computation.Lets up size the column in Customer table and run the insert query again

|

DROP TABLE BANKING_DB.CUSTOMER; CREATE SET TABLE PP_SCRATCH.CUSTOMER ,FALLBACK , NO BEFORE JOURNAL, NO AFTER JOURNAL, CHECKSUM = DEFAULT, DEFAULT MERGEBLOCKRATIO, MAP = TD_MAP1 ( Cust_Id INTEGER, Cust_Name CHAR(20) CHARACTER SET LATIN NOT CASESPECIFIC, Join_Date DATE FORMAT ‘YYYY/MM/DD’, Mobile_Number INTEGER, Login_Count DECIMAL(38,0)) — Upsized the column to Decimal(38,0) UNIQUE PRIMARY INDEX ( Cust_Id ); |

The record for cust_id=455 has inserted into the customer table and it contains the Login_count value with more than 8 digits.

Recommended Articles

- Performance tuning using Collect Statistics in Teradata table with examples

Loading Application…

Tracking Consent

PDFs

Site Feedback

Help

In Teradata normally we come across this error while selecting column value which is too large to be accommodated in requested datatype.

In below example we tried to count a table which has more than 6 billion records, so certainly the count would return result in integer and Standard max value for integer data type is 2147483647. so whenever selected value exceeds this range it will throw this error.

select count(*) from bm_tb.cust_priscription;

SELECT Failed. 2616: Numeric overflow occurred during computation.

In this case to get the proper result we need to cast the returned integer value to float or decimal.

select count(*) (float) from bm_tb.cust_priscription;

6,806,668,046.00

Or

select count(*) (DECIMAL(12,0)) from bm_tb.cust_priscription;

6,806,668,046

You can try this demo to understand integer limit in SQLA:

SELECT CAST( 2147483647 AS INT)

Result: 2147483647

Then try to select integer value greater than the integer limit.

SELECT CAST( 2147483648 AS INT)

Result: «SELECT Failed. 2616: Numeric overflow occurred during computation.»