To protect users and Google systems from abuse, applications that use OAuth and Google Identity have certain quota restrictions based on the risk level of the OAuth scopes an app uses. These limits include the following:

- A new user authorization rate limit that limits how quickly your application can get new users.

- A total new user cap. To learn more, see the Unverified apps page.

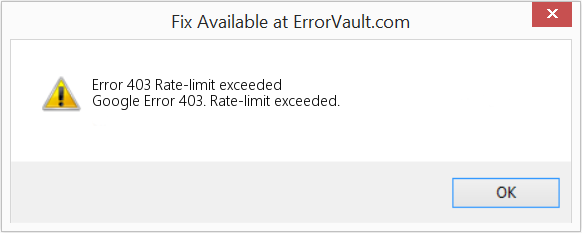

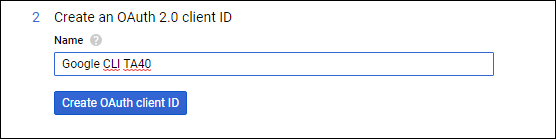

When an application exceeds the rate limit, Error 403: rate_limit_exceeded is displayed to users, like in the screenshot below:

Application developers

As a developer of an application, you can view the current user authorization grant rate (or token grant rate) in the Google API Console OAuth consent screen page before your application displays this error.

If you see that your application will reach the rate limit soon via the Google API Console or see this error being displayed, you should take action to improve your application’s user experience. You can request a rate limit quota increase for the application. Please expect a response in 5 business days.

OAuth rate limit quotas

|

Applicable apps |

Quota |

Appeal |

|

|---|---|---|---|

|

New user authorization rate limit |

Apps that request access to user data, including verified apps |

Dependent on application history, developer reputation, and riskiness |

Request a rate limit quota increase |

To learn more about the total new user cap, see the Unverified apps page.

Was this helpful?

How can we improve it?

Likely something to do with pytorch=1.9:

- Works with python=3.7 and pytorch=1.7

Python 3.7.10 | packaged by conda-forge | (default, Feb 19 2021, 16:07:37) [GCC 9.3.0] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import torch >>> torch.__version__ '1.7.0' >>> model = torch.hub.load('pytorch/vision:v0.10.0', 'resnet50', pretrained=True, force_reload=True) Downloading: "https://github.com/pytorch/vision/archive/v0.10.0.zip" to /home/ml/farleylai/.cache/torch/hub/v0.10.0.zip

- Works with python3.9 and pytorch=1.7.1 and 1.8.1.

Python 3.9.5 (default, Jun 4 2021, 12:28:51) [GCC 7.5.0] :: Anaconda, Inc. on linux Type "help", "copyright", "credits" or "license" for more information. import torch >>> torch.__version__ '1.7.1' >>> model = torch.hub.load('pytorch/vision:v0.10.0', 'resnet50', pretrained=True, force_reload=True) Downloading: "https://github.com/pytorch/vision/archive/v0.10.0.zip" to /home/ml/farleylai/.cache/torch/hub/v0.10.0.zip

Python 3.9.5 (default, Jun 4 2021, 12:28:51) [GCC 7.5.0] :: Anaconda, Inc. on linux Type "help", "copyright", "credits" or "license" for more information. >>> import torch >>> torch.__version__ '1.8.1' >>> model = torch.hub.load('pytorch/vision:v0.10.0', 'resnet50', pretrained=True, force_reload=True) Downloading: "https://github.com/pytorch/vision/archive/v0.10.0.zip" to /home/ml/farleylai/.cache/torch/hub/v0.10.0.zip

- Failed with python=3.9 and pytorch=1.9

Python 3.9.5 (default, Jun 4 2021, 12:28:51) [GCC 7.5.0] :: Anaconda, Inc. on linux Type "help", "copyright", "credits" or "license" for more information. >>> import torch >>> torch.__version__ '1.9.0' >>> model = torch.hub.load('pytorch/vision:v0.10.0', 'resnet50', pretrained=True, force_reload=True) Traceback (most recent call last): File "<stdin>", line 1, in <module> File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/site-packages/torch/hub.py", line 362, in load repo_or_dir = _get_cache_or_reload(repo_or_dir, force_reload, verbose) File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/site-packages/torch/hub.py", line 162, in _get_cache_or_reload _validate_not_a_forked_repo(repo_owner, repo_name, branch) File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/site-packages/torch/hub.py", line 124, in _validate_not_a_forked_repo with urlopen(url) as r: File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/urllib/request.py", line 214, in urlopen return opener.open(url, data, timeout) File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/urllib/request.py", line 523, in open response = meth(req, response) File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/urllib/request.py", line 632, in http_response response = self.parent.error( File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/urllib/request.py", line 561, in error return self._call_chain(*args) File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/urllib/request.py", line 494, in _call_chain result = func(*args) File "/home/ml/farleylai/miniconda3/envs/sinet39/lib/python3.9/urllib/request.py", line 641, in http_error_default raise HTTPError(req.full_url, code, msg, hdrs, fp) urllib.error.HTTPError: HTTP Error 403: rate limit exceeded

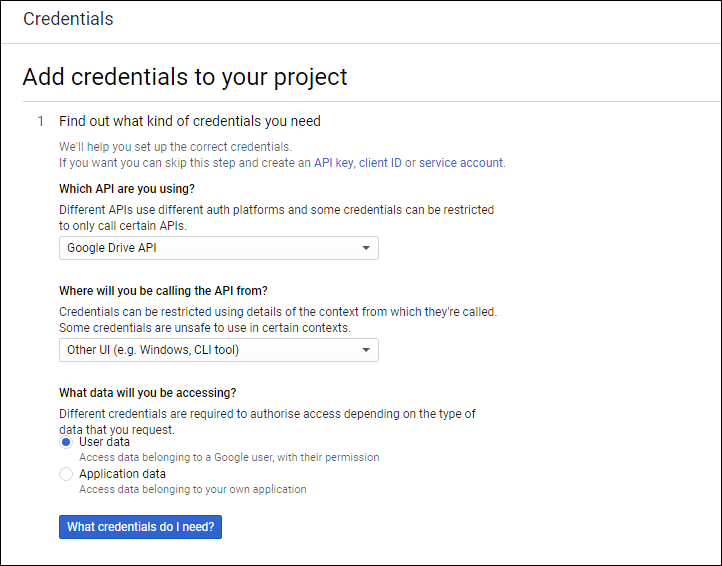

The Google Drive API returns 2 levels of error information:

- HTTP error codes and messages in the header.

- A JSON object in the response body with additional details that can help you

determine how to handle the error.

Drive apps should catch and handle all errors that might be encountered when

using the REST API. This guide provides instructions on how to resolve specific

API errors.

Resolve a 400 error: Bad request

This error can result from any one of the following issues in your code:

- A required field or parameter hasn’t been provided.

- The value supplied or a combination of provided fields is invalid.

- You tried to add a duplicate parent to a Drive file.

- You tried to add a parent that would create a cycle in the directory graph.

Following is a sample JSON representation of this error:

{

"error": {

"code": 400,

"errors": [

{

"domain": "global",

"location": "orderBy",

"locationType": "parameter",

"message": "Sorting is not supported for queries with fullText terms. Results are always in descending relevance order.",

"reason": "badRequest"

}

],

"message": "Sorting is not supported for queries with fullText terms. Results are always in descending relevance order."

}

}

To fix this error, check the message field and adjust your code accordingly.

Resolve a 400 error: Invalid sharing request

This error can occur for several reasons. To determine the limit that has been

exceeded, evaluate the reason field of the returned JSON. This error most

commonly occurs because:

- Sharing succeeded, but the notification email was not correctly delivered.

- The Access Control List (ACL) change is not allowed for this user.

The message field indicates the actual error.

Sharing succeeded, but the notification email was not correctly delivered

Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "invalidSharingRequest",

"message": "Bad Request. User message: "Sorry, the items were successfully shared but emails could not be sent to email@domain.com.""

}

],

"code": 400,

"message": "Bad Request"

}

}

To fix this error, inform the user (sharer) they were unable to share because

the notification email couldn’t be sent to the email address they want to share

with. The user should ensure they have the correct email address and that it can

receive email.

The ACL change is not allowed for this user

Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "invalidSharingRequest",

"message": "Bad Request. User message: "ACL change not allowed.""

}

],

"code": 400,

"message": "Bad Request"

}

}

To fix this error, check the sharing settings of the Google Workspace domain to which the file belongs. The settings might

prohibit sharing outside of the domain or sharing a shared drive might not be

permitted.

Resolve a 401 error: Invalid credentials

A 401 error indicates the access token that you’re using is either expired or

invalid. This error can also be caused by missing authorization for the

requested scopes. Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "authError",

"message": "Invalid Credentials",

"locationType": "header",

"location": "Authorization",

}

],

"code": 401,

"message": "Invalid Credentials"

}

}

To fix this error, refresh the access token using the long-lived refresh token.

If this fails, direct the user through the OAuth flow, as described in

API-specific authorization and authentication information.

Resolve a 403 error

An error 403 occurs when a usage limit has been exceeded or the user doesn’t

have the correct privileges. To determine the specific type of error, evaluate

the reason field of the returned JSON. This error occurs for the following

situations:

- The daily limit was exceeded.

- The user rate limit was exceeded.

- The project rate limit was exceeded.

- The sharing rate limit was exceeded.

- The user hasn’t granted your app rights to a file.

- The user doesn’t have sufficient permissions for a file.

- Your app can’t be used within the signed in user’s domain.

- Number of items in a folder was exceeded.

For information on Drive API limits, refer to

Usage limits. For information on Drive folder limits,

refer to

Folder limits in Google Drive.

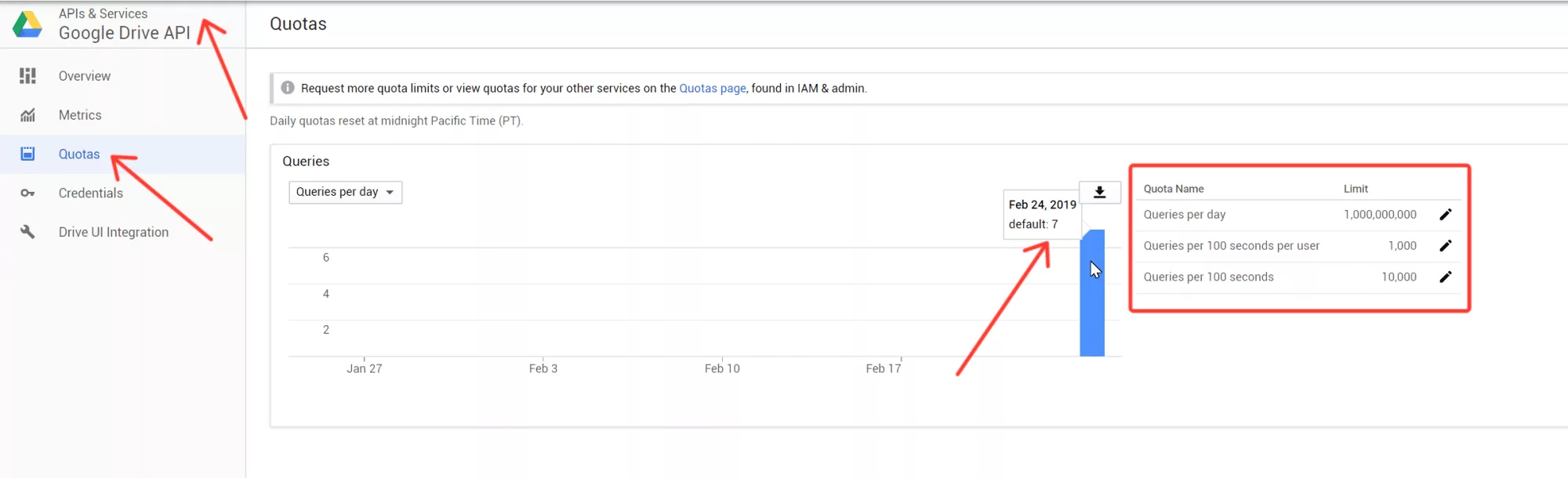

Resolve a 403 error: Daily limit exceeded

A dailyLimitExceeded error indicates the courtesy API limit for your project

has been reached. Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "usageLimits",

"reason": "dailyLimitExceeded",

"message": "Daily Limit Exceeded"

}

],

"code": 403,

"message": "Daily Limit Exceeded"

}

}

This error appears when the application’s owner has set a quota limit to cap

usage of a particular resource. To fix this error,

remove any usage caps for the «Queries per day» quota.

Resolve a 403 error: User rate limit exceeded

A userRateLimitExceeded error indicates the per-user limit has been reached.

This might be a limit from the Google API Console or a limit from the Drive

backend. Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "usageLimits",

"reason": "userRateLimitExceeded",

"message": "User Rate Limit Exceeded"

}

],

"code": 403,

"message": "User Rate Limit Exceeded"

}

}

To fix this error, try any of the following:

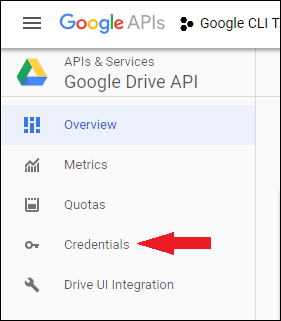

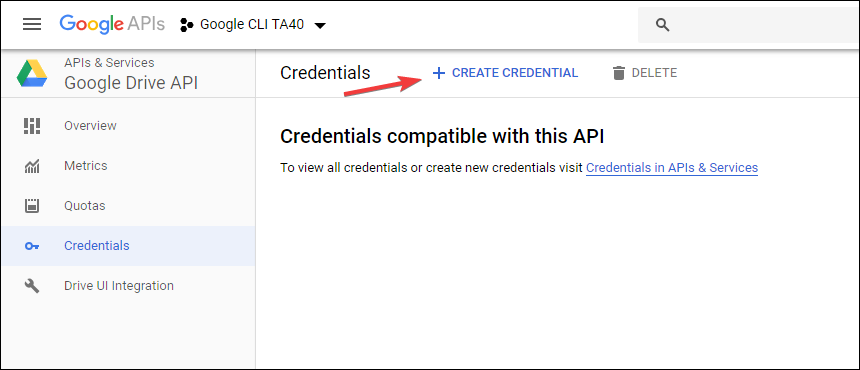

- Raise the per-user quota in the Google Cloud project. For more information,

request a quota increase.

- If one user is making numerous requests on behalf of many users of a Google Workspace account, consider a

service account with domain-wide delegation

using the

quotaUserparameter. - Use exponential backoff to retry the

request.

For information on Drive API limits, refer to

Usage limits.

Resolve a 403 error: Project rate limit exceeded

A rateLimitExceeded error indicates the project’s rate limit has been reached.

This limit varies depending on the type of requests. Following is the JSON

representation of this error:

{

"error": {

"errors": [

{

"domain": "usageLimits",

"message": "Rate Limit Exceeded",

"reason": "rateLimitExceeded",

}

],

"code": 403,

"message": "Rate Limit Exceeded"

}

}

To fix this error, try any of the following:

- Raise the per-user quota in the Google Cloud project. For more information,

request a quota increase. - Batch requests to make fewer

API calls. - Use exponential backoff to retry the

request.

Resolve a 403 error: Sharing rate limit exceeded

A sharingRateLimitExceeded error occurs when the user has reached a sharing

limit. This error is often linked with an email limit. Following is the JSON

representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"message": "Rate limit exceeded. User message: "These item(s) could not be shared because a rate limit was exceeded: filename",

"reason": "sharingRateLimitExceeded",

}

],

"code": 403,

"message": "Rate Limit Exceeded"

}

}

To fix this error:

- Do not send emails when sharing large amounts of files.

- If one user is making numerous requests on behalf of many users of a Google Workspace account, consider a

service account with domain-wide delegation

using the

quotaUserparameter.

Resolve a 403 error: Storage quota exceeded

A storageQuotaExceeded error occurs when the user has reached their storage

limit. Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"message": "The user's Drive storage quota has been exceeded.",

"reason": "storageQuotaExceeded",

}

],

"code": 403,

"message": "The user's Drive storage quota has been exceeded."

}

}

To fix this error:

-

Review the storage limits for your Drive account. For more information,

refer to

Drive storage and upload limits -

Free up space

or get more storage from Google One.

Resolve a 403 error: The user has not granted the app {appId} {verb} access to the file {fileId}

An appNotAuthorizedToFile error occurs when your app is not on the ACL for the

file. This error prevents the user from opening the file with your app.

Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "appNotAuthorizedToFile",

"message": "The user has not granted the app {appId} {verb} access to the file {fileId}."

}

],

"code": 403,

"message": "The user has not granted the app {appId} {verb} access to the file {fileId}."

}

}

To fix this error, try any of the following:

- Open the Google Drive picker

and prompt the user to open the file. - Instruct the user to open the file using the

Open with context menu in the Drive

UI of your app.

You can also check the isAppAuthorized field on a file to verify that your app

created or opened the file.

Resolve a 403 error: The user does not have sufficient permissions for file {fileId}

A insufficientFilePermissions error occurs when the user doesn’t have write

access to a file, and your app is attempting to modify the file. Following is

the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "insufficientFilePermissions",

"message": "The user does not have sufficient permissions for file {fileId}."

}

],

"code": 403,

"message": "The user does not have sufficient permissions for file {fileId}."

}

}

To fix this error, instruct the user to contact the file’s owner and request

edit access. You can also check user access levels in the metadata retrieved by

files.get and display a read-only UI when

permissions are missing.

Resolve a 403 error: App with id {appId} cannot be used within the authenticated user’s domain

A domainPolicy error occurs when the policy for the user’s domain doesn’t

allow access to Drive by your app. Following is the JSON representation of this

error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "domainPolicy",

"message": "The domain administrators have disabled Drive apps."

}

],

"code": 403,

"message": "The domain administrators have disabled Drive apps."

}

}

To fix this error:

- Inform the user the domain doesn’t allow your app to access files in Drive.

- Instruct the user to contact the domain Admin to request access for your

app.

Resolve a 403 error: Number of items in folder was exceeded

A numChildrenInNonRootLimitExceeded error occurs when the limit for a folder’s

number of children (folders, files, and shortcuts) has been exceeded. There’s a

500,000 item limit for folders, files, and shortcuts directly in a folder. Items

nested in subfolders don’t count against this 500,000 item limit. For more

information on Drive folder limits, refer to

Folder limits in Google Drive

.

Resolve a 403 error: Number of items created by account was exceeded

An activeItemCreationLimitExceeded error occurs when the limit for the number

of items, whether trashed or not, created by this account has been exceeded. All

item types, including folders, count towards the limit.

Resolve a 404 error: File not found: {fileId}

The notFound error occurs when the user doesn’t have read access to a file, or

the file doesn’t exist.

{

"error": {

"errors": [

{

"domain": "global",

"reason": "notFound",

"message": "File not found {fileId}"

}

],

"code": 404,

"message": "File not found: {fileId}"

}

}

To fix this error:

- Inform the user they don’t have read access to the file or the file doesn’t

exist. - Instruct the user to contact the file’s owner and request permission to the

file.

Resolve a 429 error: Too many requests

A rateLimitExceeded error occurs when the user has sent too many requests in a

given amount of time.

{

"error": {

"errors": [

{

"domain": "usageLimits",

"reason": "rateLimitExceeded",

"message": "Rate Limit Exceeded"

}

],

"code": 429,

"message": "Rate Limit Exceeded"

}

}

To fix this error, use

exponential backoff to retry the request.

Resolve a 5xx error

A 5xx error occurs when an unexpected error arises while processing the request.

This can be caused by various issues, including a request’s timing overlapping

with another request or a request for an unsupported action, such as attempting

to update permissions for a single page in a Google Site instead of the site

itself.

To fix this error, use

exponential backoff to retry the request.

Following is a list of 5xx errors:

- 500 Backend error

- 502 Bad Gateway

- 503 Service Unavailable

- 504 Gateway Timeout

After the recent Thunderbird update to version 91.2.1, I was asked to sign in again. After signing in, I was told that the app has reached its sign-in rate limit and the developer has to increase it. The full message is as follows:

Authorization Error

Error 403: rate_limit_exceeded

This app has reached its sign-in rate limit for now.

Google limits how quickly an app can get new users. You can try signing in again later or ask the developer (provider-for-google-calendar@googlegroups.com) to increase this app's sign-in rate limit.

If you are the developer for this app, you can request a sign-in rate limit increase.

These are the (censored) displayed request details:

login_hint:

hl: en-GB

response_type: code

redirect_uri: urn:ietf:wg:oauth:2.0:oob:auto

client_id: <maybe an identifying string?>.apps.googleusercontent.com

access_type: offline

scope: https://www.googleapis.com/auth/calendar https://www.googleapis.com/auth/tasks

403 userRateLimitExceeded is basically flood protection. Your application can make a max of 10 requests a second for your user. User is defined as IP address unless you send QuotaUser along with your request.

The per-user limit from the Developer Console has been reached.

{

"error": {

"errors": [

{

"domain": "usageLimits",

"reason": "userRateLimitExceeded",

"message": "User Rate Limit Exceeded"

}

],

"code": 403,

"message": "User Rate Limit Exceeded"

}

}

403 rateLimitExceeded is the same thing just with a different name. Why there are two cant tell you.

{

"error": {

"errors": [

{

"domain": "usageLimits",

"message": "Rate Limit Exceeded",

"reason": "rateLimitExceeded",

}

],

"code": 403,

"message": "Rate Limit Exceeded"

}

}

In both cases you should Implement Exponential backoff and try the request again Just slower.

В этой статье вы узнаете как убрать ошибку rate_limit_exceeded, которая появляется в почтовом клиенте Thunderbird.

Приятного чтения!

Заказывайте у нас разработку сайтов, настройку и сопровождение контекстной рекламы, поисковое продвижение и добавление организаций на карты.

Исходные данные:

- Thunderbird 91.8.0 (64-битный)

Описание проблемы

При синхронизации почты в Thunderbird гугл аккаунты требуют ввести логин и пароль от аккаунта gmail. После вода данных выскакивает ошибка:

Authorization Error

Error 403: rate_limit_exceeded

This app has reached its sign-in rate limit for now.

Google limits how quickly an app can get new users. You can try signing in again later or ask the developer (provider-for-google-calendar@googlegroups.com) to increase this app’s sign-in rate limit.

If you are the developer for this app, you can request a sign-in rate limit increase.

При просмотре подробностей:

login_hint: xxxxxxx.yyyyyyyyyy@gmail.com

hl: de

response_type: code

redirect_uri: urn:ietf:wg:oauth:2.0:oob:auto

client_id: .apps.googleusercontent.com

access_type: offline

scope: https://www.googleapis.com/auth/calendar https://www.googleapis.com/auth/tasks

Смысл проблемы

Суть проблемы в календарях аккаунтов gmail. Запросы с почтовой программы для синхронизации календарей аккаунтов вызывают превышение лимита.

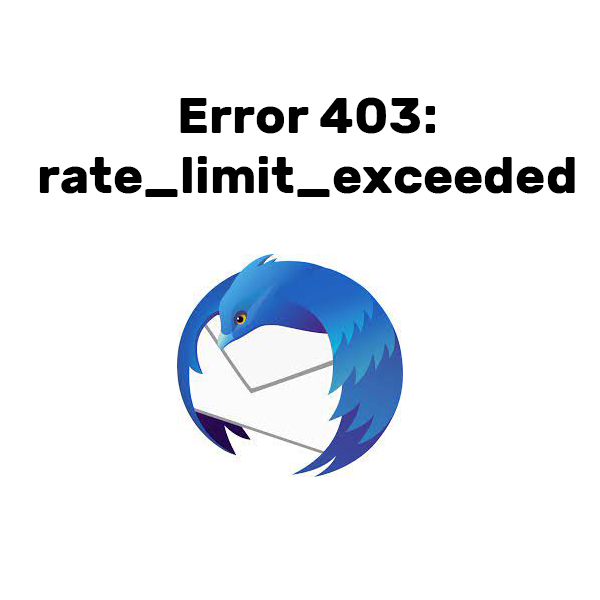

Решение проблемы

- Заходим в гугл аккаунт с которого почтовый клиент Thunderbird собирает почту. Для этого переходим на google.by (.com, .ru). Входим в наш аккаунт. Нажимаем на на 9 серых точек в правом верхнем углу (приложения Гугл) и выбираем там Календари (Calendars):

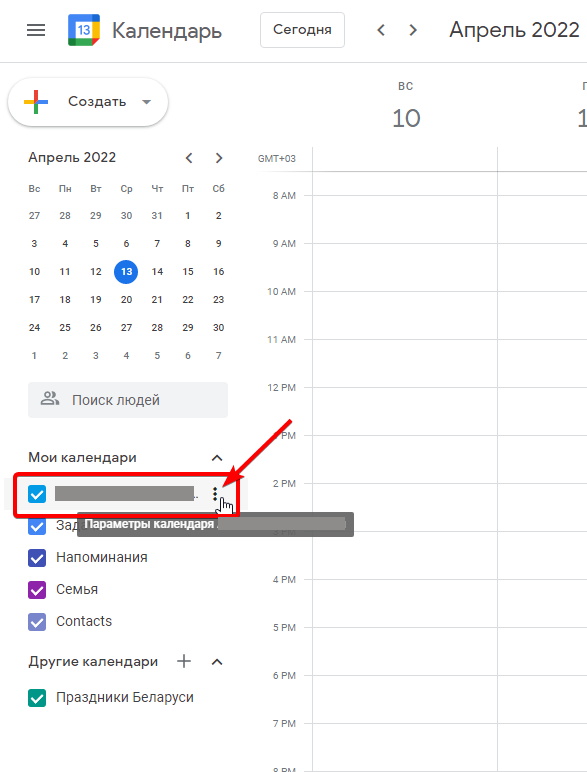

2. Слева внизу в закладке «Мои календари» (My calendars) выбрать календарь с название как логин аккаунта и нажать на три серых точки:

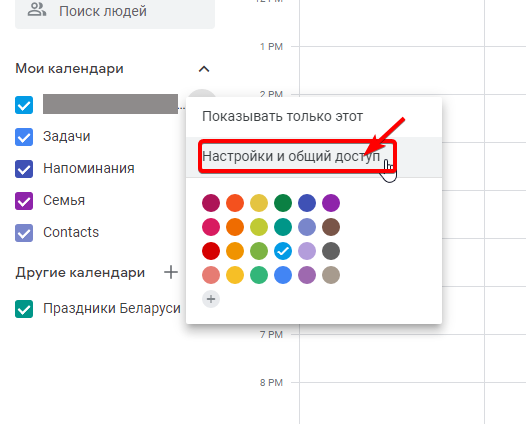

3. В появившемся меню выбираем «Настройки и общий доступ» (Settings and sharing):

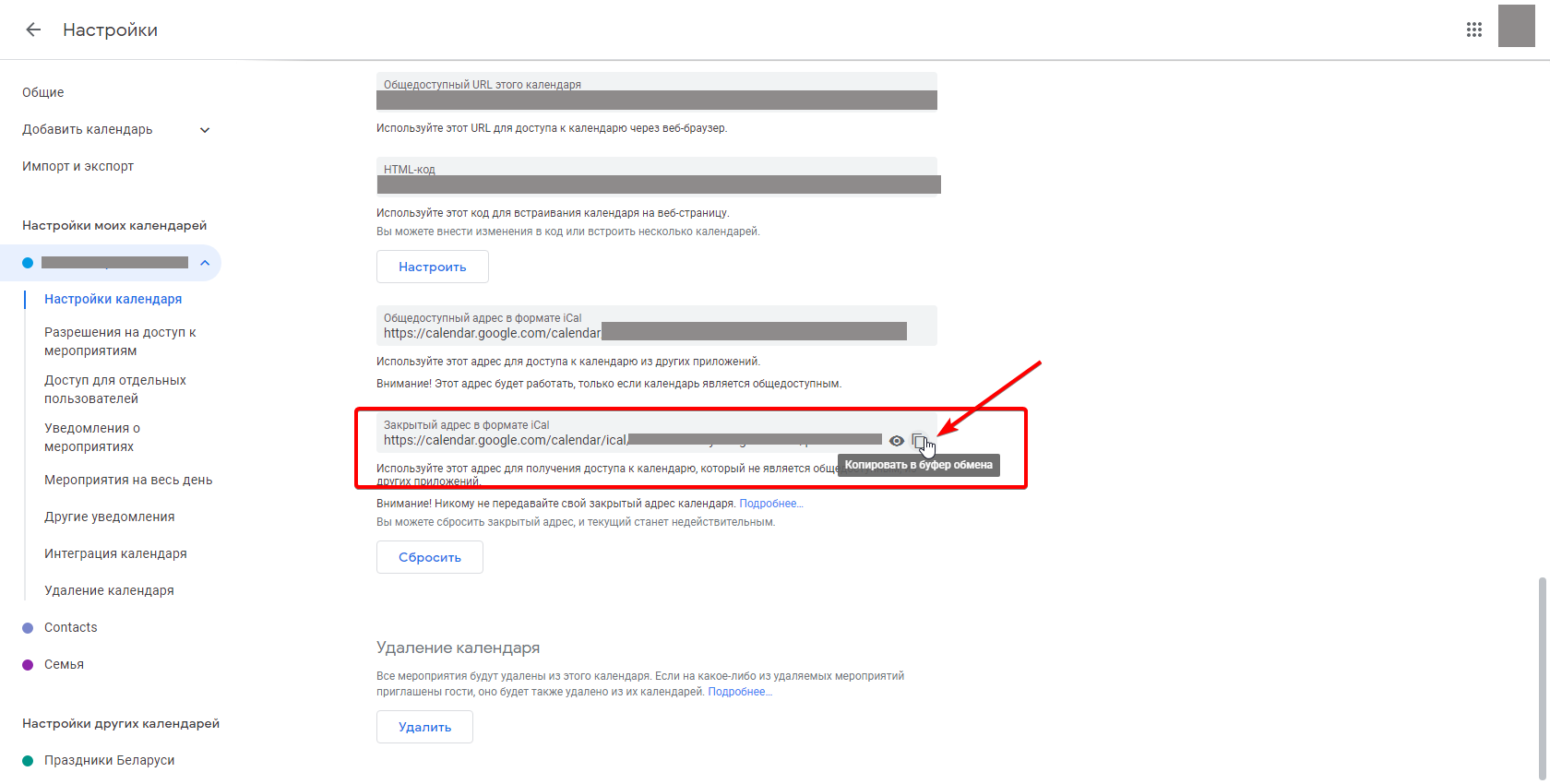

4. На странице настроек спускаемся в самый низ и ищем пункт настроек «Закрытый адрес в формате iCal» (Secret address in iCal format). Он изначально будет скрыт. Нажимаете на иконку «Копировать» (Copy)

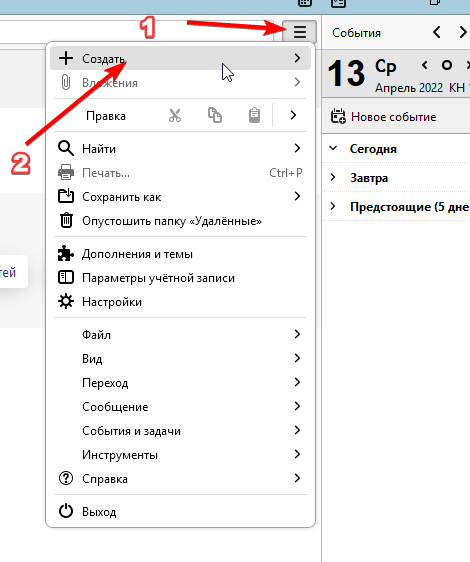

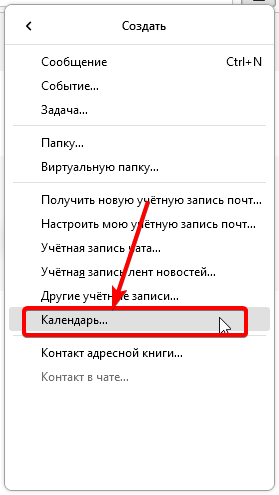

5. Далее открываете Thunderbird и в правом верхнем углу нажимаете на три полосы и далее «Создать»:

6. Выбираем «Календарь…»

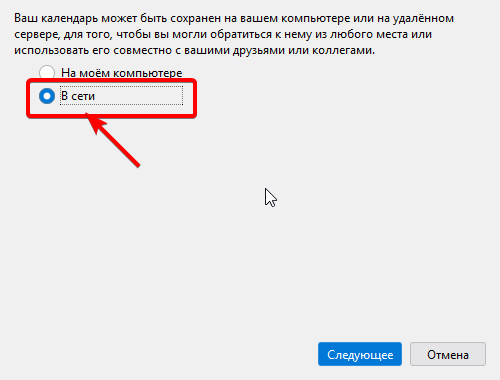

7. Выбираем «В сети»

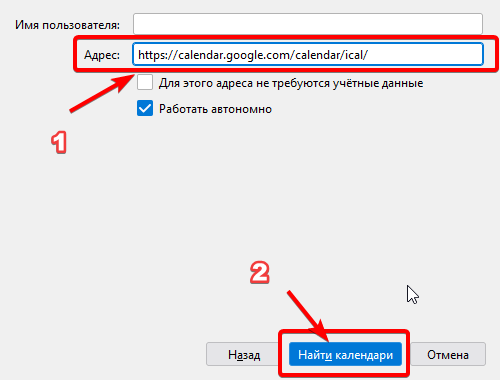

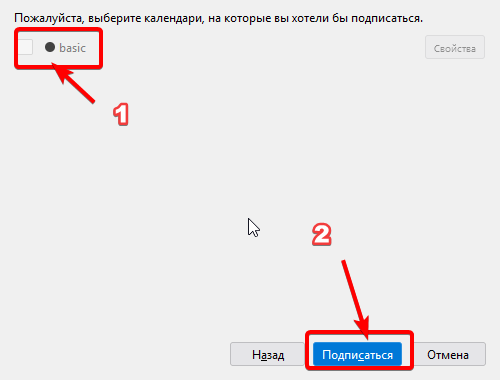

8. Вставляем в поле «Адрес» адрес который мы скопировали в пункте 4 этой инструкции и нажимаем «Найти календарь»

9. Выбираем наш календарь и нажимаем «Подписаться».

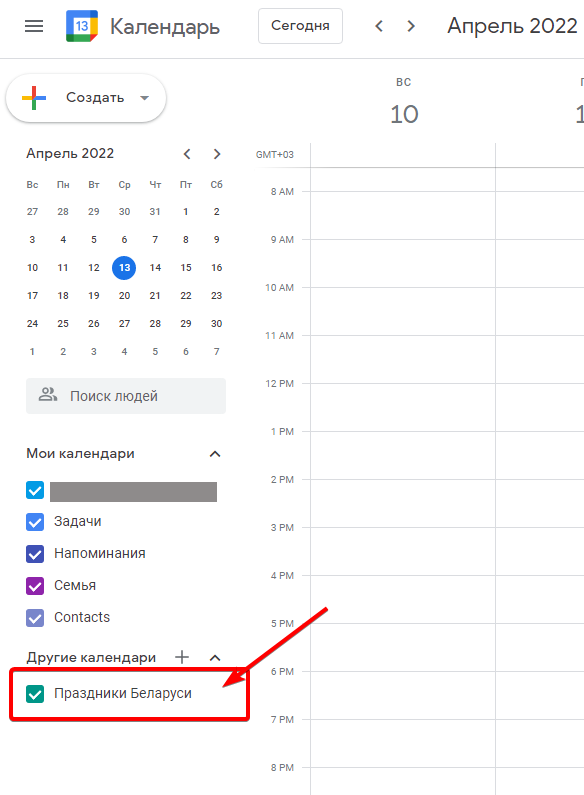

10. Возвращаемся в календари аккаунта гугл, и проделываем пункты со 2-го по 9-й для календаря «Праздники Беларуси»

11. Ошибка получения почты исправлена.

Похожие

The Google Drive API returns 2 levels of error information:

- HTTP error codes and messages in the header.

- A JSON object in the response body with additional details that can help you

determine how to handle the error.

Drive apps should catch and handle all errors that might be encountered when

using the REST API. This guide provides instructions on how to resolve specific

API errors.

Resolve a 400 error: Bad request

This error can result from any one of the following issues in your code:

- A required field or parameter hasn’t been provided.

- The value supplied or a combination of provided fields is invalid.

- You tried to add a duplicate parent to a Drive file.

- You tried to add a parent that would create a cycle in the directory graph.

Following is a sample JSON representation of this error:

{

"error": {

"code": 400,

"errors": [

{

"domain": "global",

"location": "orderBy",

"locationType": "parameter",

"message": "Sorting is not supported for queries with fullText terms. Results are always in descending relevance order.",

"reason": "badRequest"

}

],

"message": "Sorting is not supported for queries with fullText terms. Results are always in descending relevance order."

}

}

To fix this error, check the message field and adjust your code accordingly.

Resolve a 400 error: Invalid sharing request

This error can occur for several reasons. To determine the limit that has been

exceeded, evaluate the reason field of the returned JSON. This error most

commonly occurs because:

- Sharing succeeded, but the notification email was not correctly delivered.

- The Access Control List (ACL) change is not allowed for this user.

The message field indicates the actual error.

Sharing succeeded, but the notification email was not correctly delivered

Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "invalidSharingRequest",

"message": "Bad Request. User message: "Sorry, the items were successfully shared but emails could not be sent to email@domain.com.""

}

],

"code": 400,

"message": "Bad Request"

}

}

To fix this error, inform the user (sharer) they were unable to share because

the notification email couldn’t be sent to the email address they want to share

with. The user should ensure they have the correct email address and that it can

receive email.

The ACL change is not allowed for this user

Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "invalidSharingRequest",

"message": "Bad Request. User message: "ACL change not allowed.""

}

],

"code": 400,

"message": "Bad Request"

}

}

To fix this error, check the sharing settings of the Google Workspace domain to which the file belongs. The settings might

prohibit sharing outside of the domain or sharing a shared drive might not be

permitted.

Resolve a 401 error: Invalid credentials

A 401 error indicates the access token that you’re using is either expired or

invalid. This error can also be caused by missing authorization for the

requested scopes. Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "authError",

"message": "Invalid Credentials",

"locationType": "header",

"location": "Authorization",

}

],

"code": 401,

"message": "Invalid Credentials"

}

}

To fix this error, refresh the access token using the long-lived refresh token.

If this fails, direct the user through the OAuth flow, as described in

API-specific authorization and authentication information.

Resolve a 403 error

An error 403 occurs when a usage limit has been exceeded or the user doesn’t

have the correct privileges. To determine the specific type of error, evaluate

the reason field of the returned JSON. This error occurs for the following

situations:

- The daily limit was exceeded.

- The user rate limit was exceeded.

- The project rate limit was exceeded.

- The sharing rate limit was exceeded.

- The user hasn’t granted your app rights to a file.

- The user doesn’t have sufficient permissions for a file.

- Your app can’t be used within the signed in user’s domain.

- Number of items in a folder was exceeded.

For information on Drive API limits, refer to

Usage limits. For information on Drive folder limits,

refer to

Folder limits in Google Drive.

Resolve a 403 error: Daily limit exceeded

A dailyLimitExceeded error indicates the courtesy API limit for your project

has been reached. Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "usageLimits",

"reason": "dailyLimitExceeded",

"message": "Daily Limit Exceeded"

}

],

"code": 403,

"message": "Daily Limit Exceeded"

}

}

This error appears when the application’s owner has set a quota limit to cap

usage of a particular resource. To fix this error,

remove any usage caps for the «Queries per day» quota.

Resolve a 403 error: User rate limit exceeded

A userRateLimitExceeded error indicates the per-user limit has been reached.

This might be a limit from the Google API Console or a limit from the Drive

backend. Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "usageLimits",

"reason": "userRateLimitExceeded",

"message": "User Rate Limit Exceeded"

}

],

"code": 403,

"message": "User Rate Limit Exceeded"

}

}

To fix this error, try any of the following:

- Raise the per-user quota in the Google Cloud project. For more information,

request a quota increase.

- If one user is making numerous requests on behalf of many users of a Google Workspace account, consider a

service account with domain-wide delegation

using the

quotaUserparameter. - Use exponential backoff to retry the

request.

For information on Drive API limits, refer to

Usage limits.

Resolve a 403 error: Project rate limit exceeded

A rateLimitExceeded error indicates the project’s rate limit has been reached.

This limit varies depending on the type of requests. Following is the JSON

representation of this error:

{

"error": {

"errors": [

{

"domain": "usageLimits",

"message": "Rate Limit Exceeded",

"reason": "rateLimitExceeded",

}

],

"code": 403,

"message": "Rate Limit Exceeded"

}

}

To fix this error, try any of the following:

- Raise the per-user quota in the Google Cloud project. For more information,

request a quota increase. - Batch requests to make fewer

API calls. - Use exponential backoff to retry the

request.

Resolve a 403 error: Sharing rate limit exceeded

A sharingRateLimitExceeded error occurs when the user has reached a sharing

limit. This error is often linked with an email limit. Following is the JSON

representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"message": "Rate limit exceeded. User message: "These item(s) could not be shared because a rate limit was exceeded: filename",

"reason": "sharingRateLimitExceeded",

}

],

"code": 403,

"message": "Rate Limit Exceeded"

}

}

To fix this error:

- Do not send emails when sharing large amounts of files.

- If one user is making numerous requests on behalf of many users of a Google Workspace account, consider a

service account with domain-wide delegation

using the

quotaUserparameter.

Resolve a 403 error: Storage quota exceeded

A storageQuotaExceeded error occurs when the user has reached their storage

limit. Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"message": "The user's Drive storage quota has been exceeded.",

"reason": "storageQuotaExceeded",

}

],

"code": 403,

"message": "The user's Drive storage quota has been exceeded."

}

}

To fix this error:

-

Review the storage limits for your Drive account. For more information,

refer to

Drive storage and upload limits -

Free up space

or get more storage from Google One.

Resolve a 403 error: The user has not granted the app {appId} {verb} access to the file {fileId}

An appNotAuthorizedToFile error occurs when your app is not on the ACL for the

file. This error prevents the user from opening the file with your app.

Following is the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "appNotAuthorizedToFile",

"message": "The user has not granted the app {appId} {verb} access to the file {fileId}."

}

],

"code": 403,

"message": "The user has not granted the app {appId} {verb} access to the file {fileId}."

}

}

To fix this error, try any of the following:

- Open the Google Drive picker

and prompt the user to open the file. - Instruct the user to open the file using the

Open with context menu in the Drive

UI of your app.

You can also check the isAppAuthorized field on a file to verify that your app

created or opened the file.

Resolve a 403 error: The user does not have sufficient permissions for file {fileId}

A insufficientFilePermissions error occurs when the user doesn’t have write

access to a file, and your app is attempting to modify the file. Following is

the JSON representation of this error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "insufficientFilePermissions",

"message": "The user does not have sufficient permissions for file {fileId}."

}

],

"code": 403,

"message": "The user does not have sufficient permissions for file {fileId}."

}

}

To fix this error, instruct the user to contact the file’s owner and request

edit access. You can also check user access levels in the metadata retrieved by

files.get and display a read-only UI when

permissions are missing.

Resolve a 403 error: App with id {appId} cannot be used within the authenticated user’s domain

A domainPolicy error occurs when the policy for the user’s domain doesn’t

allow access to Drive by your app. Following is the JSON representation of this

error:

{

"error": {

"errors": [

{

"domain": "global",

"reason": "domainPolicy",

"message": "The domain administrators have disabled Drive apps."

}

],

"code": 403,

"message": "The domain administrators have disabled Drive apps."

}

}

To fix this error:

- Inform the user the domain doesn’t allow your app to access files in Drive.

- Instruct the user to contact the domain Admin to request access for your

app.

Resolve a 403 error: Number of items in folder was exceeded

A numChildrenInNonRootLimitExceeded error occurs when the limit for a folder’s

number of children (folders, files, and shortcuts) has been exceeded. There’s a

500,000 item limit for folders, files, and shortcuts directly in a folder. Items

nested in subfolders don’t count against this 500,000 item limit. For more

information on Drive folder limits, refer to

Folder limits in Google Drive

.

Resolve a 403 error: Number of items created by account was exceeded

An activeItemCreationLimitExceeded error occurs when the limit for the number

of items, whether trashed or not, created by this account has been exceeded. All

item types, including folders, count towards the limit.

Resolve a 404 error: File not found: {fileId}

The notFound error occurs when the user doesn’t have read access to a file, or

the file doesn’t exist.

{

"error": {

"errors": [

{

"domain": "global",

"reason": "notFound",

"message": "File not found {fileId}"

}

],

"code": 404,

"message": "File not found: {fileId}"

}

}

To fix this error:

- Inform the user they don’t have read access to the file or the file doesn’t

exist. - Instruct the user to contact the file’s owner and request permission to the

file.

Resolve a 429 error: Too many requests

A rateLimitExceeded error occurs when the user has sent too many requests in a

given amount of time.

{

"error": {

"errors": [

{

"domain": "usageLimits",

"reason": "rateLimitExceeded",

"message": "Rate Limit Exceeded"

}

],

"code": 429,

"message": "Rate Limit Exceeded"

}

}

To fix this error, use

exponential backoff to retry the request.

Resolve a 5xx error

A 5xx error occurs when an unexpected error arises while processing the request.

This can be caused by various issues, including a request’s timing overlapping

with another request or a request for an unsupported action, such as attempting

to update permissions for a single page in a Google Site instead of the site

itself.

To fix this error, use

exponential backoff to retry the request.

Following is a list of 5xx errors:

- 500 Backend error

- 502 Bad Gateway

- 503 Service Unavailable

- 504 Gateway Timeout

How to fix the Runtime Code 403 Rate-limit exceeded

This article features error number Code 403, commonly known as Rate-limit exceeded described as Google Error 403. Rate-limit exceeded.

About Runtime Code 403

Runtime Code 403 happens when Picasa fails or crashes whilst it’s running, hence its name. It doesn’t necessarily mean that the code was corrupt in some way, but just that it did not work during its run-time. This kind of error will appear as an annoying notification on your screen unless handled and corrected. Here are symptoms, causes and ways to troubleshoot the problem.

Definitions (Beta)

Here we list some definitions for the words contained in your error, in an attempt to help you understand your problem. This is a work in progress, so sometimes we might define the word incorrectly, so feel free to skip this section!

- Limit — Relates to any sort of limit applied to data or resources, e.g limiting the size or value of a variable, limiting the rate of incoming traffic or CPU usage

- Rate — A measure, quantity, or frequency, typically one measured against some other quantity or measure.

- Google+ — Integrate applications or websites with the Google+ platform

Symptoms of Code 403 — Rate-limit exceeded

Runtime errors happen without warning. The error message can come up the screen anytime Picasa is run. In fact, the error message or some other dialogue box can come up again and again if not addressed early on.

There may be instances of files deletion or new files appearing. Though this symptom is largely due to virus infection, it can be attributed as a symptom for runtime error, as virus infection is one of the causes for runtime error. User may also experience a sudden drop in internet connection speed, yet again, this is not always the case.

(For illustrative purposes only)

Causes of Rate-limit exceeded — Code 403

During software design, programmers code anticipating the occurrence of errors. However, there are no perfect designs, as errors can be expected even with the best program design. Glitches can happen during runtime if a certain error is not experienced and addressed during design and testing.

Runtime errors are generally caused by incompatible programs running at the same time. It may also occur because of memory problem, a bad graphics driver or virus infection. Whatever the case may be, the problem must be resolved immediately to avoid further problems. Here are ways to remedy the error.

Repair Methods

Runtime errors may be annoying and persistent, but it is not totally hopeless, repairs are available. Here are ways to do it.

If a repair method works for you, please click the upvote button to the left of the answer, this will let other users know which repair method is currently working the best.

Please note: Neither ErrorVault.com nor it’s writers claim responsibility for the results of the actions taken from employing any of the repair methods listed on this page — you complete these steps at your own risk.

Method 7 — IE related Runtime Error

If the error you are getting is related to the Internet Explorer, you may do the following:

- Reset your browser.

- For Windows 7, you may click Start, go to Control Panel, then click Internet Options on the left side. Then you can click Advanced tab then click the Reset button.

- For Windows 8 and 10, you may click search and type Internet Options, then go to Advanced tab and click Reset.

- Disable script debugging and error notifications.

- On the same Internet Options window, you may go to Advanced tab and look for Disable script debugging

- Put a check mark on the radio button

- At the same time, uncheck the «Display a Notification about every Script Error» item and then click Apply and OK, then reboot your computer.

If these quick fixes do not work, you can always backup files and run repair reinstall on your computer. However, you can do that later when the solutions listed here did not do the job.

Method 1 — Close Conflicting Programs

When you get a runtime error, keep in mind that it is happening due to programs that are conflicting with each other. The first thing you can do to resolve the problem is to stop these conflicting programs.

- Open Task Manager by clicking Ctrl-Alt-Del at the same time. This will let you see the list of programs currently running.

- Go to the Processes tab and stop the programs one by one by highlighting each program and clicking the End Process buttom.

- You will need to observe if the error message will reoccur each time you stop a process.

- Once you get to identify which program is causing the error, you may go ahead with the next troubleshooting step, reinstalling the application.

Method 2 — Update / Reinstall Conflicting Programs

Using Control Panel

- For Windows 7, click the Start Button, then click Control panel, then Uninstall a program

- For Windows 8, click the Start Button, then scroll down and click More Settings, then click Control panel > Uninstall a program.

- For Windows 10, just type Control Panel on the search box and click the result, then click Uninstall a program

- Once inside Programs and Features, click the problem program and click Update or Uninstall.

- If you chose to update, then you will just need to follow the prompt to complete the process, however if you chose to Uninstall, you will follow the prompt to uninstall and then re-download or use the application’s installation disk to reinstall the program.

Using Other Methods

- For Windows 7, you may find the list of all installed programs when you click Start and scroll your mouse over the list that appear on the tab. You may see on that list utility for uninstalling the program. You may go ahead and uninstall using utilities available in this tab.

- For Windows 10, you may click Start, then Settings, then choose Apps.

- Scroll down to see the list of Apps and features installed in your computer.

- Click the Program which is causing the runtime error, then you may choose to uninstall or click Advanced options to reset the application.

Method 3 — Update your Virus protection program or download and install the latest Windows Update

Virus infection causing runtime error on your computer must immediately be prevented, quarantined or deleted. Make sure you update your virus program and run a thorough scan of the computer or, run Windows update so you can get the latest virus definition and fix.

Method 4 — Re-install Runtime Libraries

You might be getting the error because of an update, like the MS Visual C++ package which might not be installed properly or completely. What you can do then is to uninstall the current package and install a fresh copy.

- Uninstall the package by going to Programs and Features, find and highlight the Microsoft Visual C++ Redistributable Package.

- Click Uninstall on top of the list, and when it is done, reboot your computer.

- Download the latest redistributable package from Microsoft then install it.

Method 5 — Run Disk Cleanup

You might also be experiencing runtime error because of a very low free space on your computer.

- You should consider backing up your files and freeing up space on your hard drive

- You can also clear your cache and reboot your computer

- You can also run Disk Cleanup, open your explorer window and right click your main directory (this is usually C: )

- Click Properties and then click Disk Cleanup

Method 6 — Reinstall Your Graphics Driver

If the error is related to a bad graphics driver, then you may do the following:

- Open your Device Manager, locate the graphics driver

- Right click the video card driver then click uninstall, then restart your computer

Other languages:

Wie beheben Fehler 403 (Frequenzgrenze überschritten) — Google-Fehler 403. Ratenlimit überschritten.

Come fissare Errore 403 (Limite di velocità superato) — Errore di Google 403. Limite di frequenza superato.

Hoe maak je Fout 403 (Snelheidslimiet overschreden) — Google Error 403. Snelheidslimiet overschreden.

Comment réparer Erreur 403 (Limite de débit dépassée) — Erreur Google 403. Limite de débit dépassée.

어떻게 고치는 지 오류 403 (속도 제한 초과) — Google 오류 403. 비율 제한을 초과했습니다.

Como corrigir o Erro 403 (Limite de taxa excedido) — Erro 403 do Google. Limite de taxa excedido.

Hur man åtgärdar Fel 403 (Takstgränsen har överskridits) — Google-fel 403. Gränsen har överskridits.

Как исправить Ошибка 403 (Ограничение скорости превышено) — Ошибка Google 403. Превышен предел скорости.

Jak naprawić Błąd 403 (Przekroczono limit szybkości) — Błąd Google 403. Przekroczono limit szybkości.

Cómo arreglar Error 403 (Excede el límite de velocidad) — Error 403 de Google. Se superó el límite de velocidad.

About The Author: Phil Hart has been a Microsoft Community Contributor since 2010. With a current point score over 100,000, they’ve contributed more than 3000 answers in the Microsoft Support forums and have created almost 200 new help articles in the Technet Wiki.

Follow Us:

Last Updated:

02/06/22 01:46 : A Windows 10 user voted that repair method 1 worked for them.

Recommended Repair Tool:

This repair tool can fix common computer problems such as blue screens, crashes and freezes, missing DLL files, as well as repair malware/virus damage and more by replacing damaged and missing system files.

STEP 1:

Click Here to Download and install the Windows repair tool.

STEP 2:

Click on Start Scan and let it analyze your device.

STEP 3:

Click on Repair All to fix all of the issues it detected.

DOWNLOAD NOW

Compatibility

Requirements

1 Ghz CPU, 512 MB RAM, 40 GB HDD

This download offers unlimited scans of your Windows PC for free. Full system repairs start at $19.95.

Article ID: ACX010161EN

Applies To: Windows 10, Windows 8.1, Windows 7, Windows Vista, Windows XP, Windows 2000

Speed Up Tip #53

Updating Device Drivers in Windows:

Allow the operating system to communicate efficiently with your device by updating all your drivers to the latest version. This would prevent crashes, errors, and slowdowns on your computer. Your chipset and motherboard should be running on the most recent driver updates released by the manufacturers.

Click Here for another way to speed up your Windows PC

Microsoft & Windows® logos are registered trademarks of Microsoft. Disclaimer: ErrorVault.com is not affiliated with Microsoft, nor does it claim such affiliation. This page may contain definitions from https://stackoverflow.com/tags under the CC-BY-SA license. The information on this page is provided for informational purposes only. © Copyright 2018

Stay organized with collections

Save and categorize content based on your preferences.

BigQuery has various quotas and limits

that limit the rate and volume of different requests and operations. They exist

both to protect the infrastructure and to help guard against unexpected

customer usage. This document describes how to diagnose and mitigate

specific errors resulting from quotas and limits.

If your error message is not listed in this document, then refer to

the list of error messages which has more

generic error information.

Overview

If a BigQuery operation fails because of exceeding a quota, the API

returns the HTTP 403 Forbidden status code. The response body contains more

information about the quota that was reached. The response body looks similar to

the following:

{

"code" : 403,

"errors" : [ {

"domain" : "global",

"message" : "Quota exceeded: ...",

"reason" : "quotaExceeded"

} ],

"message" : "Quota exceeded: ..."

}

The message field in the payload describes which limit was exceeded. For

example, the message field might say Exceeded rate limits: too many table.

update operations for this table

In general, quota limits fall into two categories, indicated by the reason

field in the response payload.

-

rateLimitExceeded. This value indicates a short-term

limit. To resolve these limit issues, retry the operation after few seconds.

Use exponential backoff between retry attempts. That is, exponentially

increase the delay between each retry. -

quotaExceeded. This value indicates a longer-term limit. If you reach a

longer-term quota limit, you should wait 10 minutes or longer before trying

the operation again. If you consistently reach one of these longer-term

quota limits, you should analyze your workload for ways to mitigate the

issue. Mitigations can include optimizing your workload or requesting a

quota increase.

For quotaExceeded errors, examine the error message to understand which quota

limit was exceeded. Then, analyze your workload to see if you can avoid reaching

the quota. For example, optimizing query performance can mitigate quota errors

for concurrent queries.

In some cases, the quota can be raised by

contacting BigQuery support or

contacting Google Cloud sales, but we recommend trying the

suggestions in this document first.

Diagnosis

To diagnose issues, do the following:

-

Use

INFORMATION_SCHEMAviews to analyze the underlying issue. These views contain

metadata about your BigQuery resources, including jobs,

reservations, and streaming inserts.For example, the following query uses the

INFORMATION_SCHEMA.JOBSview to list all

quota-related errors within the past day:SELECT job_id, creation_time, error_result FROM `region-us`.INFORMATION_SCHEMA.JOBS WHERE creation_time > TIMESTAMP_SUB(CURRENT_TIMESTAMP, INTERVAL 1 DAY) AND error_result.reason IN ('rateLimitExceeded', 'quotaExceeded') -

View errors in Cloud Audit Logs.

For example, using

Logs Explorer, the following query

returns errors with eitherQuota exceededorlimitin the message string:resource.type = ("bigquery_project" OR "bigquery_dataset") protoPayload.status.code ="7" protoPayload.status.message: ("Quota exceeded" OR "limit")In this example, the status code

7indicates

PERMISSION_DENIED, which

corresponds to the HTTP403status code.For additional Cloud Audit Logs query samples, see BigQuery

queries.

Concurrent queries quota errors

If a project is simultaneously running more interactive queries

than the assigned limit for that project, you might encounter this error.

For more information about this limit, see the Maximum number of concurrent interactive

queries limit.

Error message

Exceeded rate limits: too many concurrent queries for this project_and_region

Diagnosis

If you haven’t identified the query jobs that are returning this error, do the

following:

-

Check for other queries that are running concurrently with the failed queries.

For example, if your failed query was submitted on 2021-06-08 12:00:00 UTC in

theusregion, run the following query to the

INFORMATION_SCHEMA.JOBSview

table in the project where the failed query was submitted:DECLARE failed_query_submission_time DEFAULT CAST('2021-06-08 12:00:00' AS TIMESTAMP); SELECT job_id, state, user_email, query FROM `region-us`.INFORMATION_SCHEMA.JOBS_BY_PROJECT WHERE creation_time >= date_sub(failed_query_submission_time, INTERVAL 1 DAY) AND job_type = 'QUERY' AND priority = 'INTERACTIVE' AND start_time <= failed_query_submission_time AND (end_time >= failed_query_submission_time OR state != 'DONE') -

If your query to

INFORMATION_SCHEMA.JOBS_BY_PROJECTfails with the same

error, then run thebq ls

command in the

Cloud Shell terminal to list the queries that are running:bq ls -j --format=prettyjson -n 200 | jq '.[] | select(.status.state=="RUNNING")| {configuration: .query, id: .id, jobReference: .jobReference, user_email: .user_email}'

Resolution

To resolve this quota error, do the following:

-

Pause the job. If your preceding diagnosis identifies a process or a

workflow responsible for an increase in queries, then pause that process or

workflow. -

Use jobs with batch priority. Batch queries

don’t count towards your concurrent rate limit. Running batch queries can

allow you to start many queries at once. Batch queries use the same resources

as interactive (on-demand) queries. BigQuery queues each batch

query on your behalf, and starts the query when idle resources are

available in the BigQuery shared resource pool.Alternatively, you can also create a separate project to run queries.

-

Distribute queries. Organize and distribute the load across different

projects as informed by the nature of your queries and your business needs. -

Distribute run times. Distribute the load across a larger time frame. If

your

reporting solution needs to run many queries, try to introduce some randomness

for when queries start. For example, don’t start all reports at the same time. -

Use BigQuery BI Engine. If you have encountered this error while using a

business intelligence (BI) tool

to create dashboards that query data in BigQuery, then we

recommend that you can use BigQuery BI Engine.

Using BigQuery BI Engine is optimal for this use case. -

Optimize queries and data model. Oftentimes, a query can be rewritten so

that it runs more efficiently. For example, if your query contains a Common

table expression (CTE)–WITHclause–which is referenced in more than

one place in the query, then this computation is done multiple times. It is

better to persist calculations done by the CTE in a temporary table, and then

reference it in the query.Multiple joins can also be the source of lack of efficiency. In this case, you

might want to consider using

nested and repeated columns. Using this often improves locality of the

data, eliminates the need for some joins, and overall reduces resource

consumption and the query run time.Optimizing queries make them cheaper, so when

you use flat-rate pricing, you can run more queries with your slots. For more

information, see Introduction to optimizing query

performance. -

Optimize query model. BigQuery is not a relational

database. It is not optimized for infinite number of small queries. Running a

large number of small queries quickly depletes your quotas. Such queries

don’t run as efficiently as they do with the smaller database products.

BigQuery is a large data warehouse and this is its primary use

case. It performs best with analytical queries over large amounts of data. -

Persist data (Saved tables). Pre-process the data in

BigQuery and store it in additional tables. For example, if you

execute many similar, computationally-intensive queries with differentWHERE

conditions, then their results are not cached. Such queries also consume

resources each time they run. You can improve the performance of such queries

and decrease their processing time by pre-computing the data and storing it in

a table. This pre-computed data in the table can be queried bySELECT

queries. It can often be done during ingestion within the ETL

process,

or by using scheduled queries or

materialized views. -

Increase quota limits. You can also increase the quota limits to resolve

this error. To raise the limit, contact support or

contact sales. Requesting a quota increase might take several days

to process. To provide more information for your request, we recommend that

your request includes the priority of the job, the user running the query, and

the affected method.Limits are applied at the project level. However, increasing the number of

concurrent jobs per project reduces the number of available slots for each

concurrently running query, which might reduce performance of individual

queries. To improve the performance, we recommend that you increase the

number of slots if the concurrent queries limit is increased.To learn more about about raising this limit, see

Quotas and limits. For more information

about slots, see slot reservation.

Number of partition modifications for column-partitioned tables quota errors

BigQuery returns this error when your column-partitioned table

reaches the

quota of the number of partition modifications permitted per day.

Partition modifications include the total of all load jobs,

copy jobs, and query jobs

that append or overwrite a destination partition, or that

use a DML DELETE, INSERT,

MERGE, TRUNCATE TABLE, or UPDATE statement to write data to a table.

To see the value of the Number of partition

modifications per column-partitioned table per day limit, see Partitioned

tables.

Error message

Quota exceeded: Your table exceeded quota for Number of partition modifications to a column partitioned table

Resolution

This quota cannot be increased. To resolve this quota error, do the following:

- Change the partitioning on the table to have more data in each partition, in

order to decrease the total number of partitions. For example, change from

partitioning by day to partitioning by month

or change how you partition the table. - Use clustering

instead of partitioning. - If you frequently load data from multiple small files stored in Cloud Storage that uses

a job per file, then combine multiple load jobs into a single job. You can load from multiple

Cloud Storage URIs with

a comma-separated list (for example,gs://my_path/file_1,gs://my_path/file_2), or by

using wildcards (for example,gs://my_path/*).For more information, see Batch

loading data. - If you use single-row queries (that is,

INSERTstatements) to write data to a

table, consider batching multiple queries into one to reduce the number of jobs.

BigQuery doesn’t perform well when used as a relational

database, so single-rowINSERTstatements executed at a high speed is not a

recommended best practice. - If you intend to insert data at a high rate, consider using

BigQuery Storage Write API. It is a

recommended solution for high-performance data ingestion. The BigQuery Storage Write API has robust

features, including exactly-once delivery semantics. To learn about limits and

quotas, see Storage Write

API and to see costs of using this API, see

BigQuery

data ingestion pricing.

Streaming insert quota errors

This section gives some tips for troubleshooting quota errors related to

streaming data into BigQuery.

In certain regions, streaming inserts have a higher quota if you don’t populate

the insertId field for each row. For more information about quotas for

streaming inserts, see Streaming inserts.

The quota-related errors for BigQuery streaming depend on the

presence or absence of insertId.

Error message

If the insertId field is empty, the following quota error is possible:

| Quota limit | Error message |

|---|---|

| Bytes per second per project |

Your entity with gaia_id: GAIA_ID, project: PROJECT_ID in region: REGION exceeded quota for insert bytes per second. |

If the insertId field is populated, the following quota errors are possible:

| Quota limit | Error message |

|---|---|

| Rows per second per project |

Your project: PROJECT_ID in REGION exceeded quota for streaming insert rows per second. |

| Rows per second per table |

Your table: TABLE_ID exceeded quota for streaming insert rows per second. |

| Bytes per second per table |

Your table: TABLE_ID exceeded quota for streaming insert bytes per second. |

The purpose of the insertId field is to deduplicate inserted rows. If multiple

inserts with the same insertId arrive within a few minutes’ window,

BigQuery writes a single version of the record. However, this

automatic deduplication is not guaranteed. For maximum streaming throughput, we

recommend that you don’t include insertId and instead use

manual deduplication.

For more information, see

Ensuring data consistency.

Diagnosis

Use the STREAMING_TIMELINE_BY_*

views to analyze the streaming traffic. These views aggregate streaming

statistics over one-minute intervals, grouped by error code. Quota errors appear

in the results with error_code equal to RATE_LIMIT_EXCEEDED or

QUOTA_EXCEEDED.

Depending on the specific quota limit that was reached, look at total_rows or

total_input_bytes. If the error is a table-level quota, filter by table_id.

For example, the following query shows total bytes ingested per minute, and the

total number of quota errors:

SELECT

start_timestamp,

error_code,

SUM(total_input_bytes) as sum_input_bytes,

SUM(IF(error_code IN ('QUOTA_EXCEEDED', 'RATE_LIMIT_EXCEEDED'),

total_requests, 0)) AS quota_error

FROM

`region-us`.INFORMATION_SCHEMA.STREAMING_TIMELINE_BY_PROJECT

WHERE

start_timestamp > TIMESTAMP_SUB(CURRENT_TIMESTAMP, INTERVAL 1 DAY)

GROUP BY

start_timestamp,

error_code

ORDER BY 1 DESC

Resolution

To resolve this quota error, do the following:

-

If you are using the

insertIdfield for deduplication, and your project is

in a region that supports the higher streaming quota, we recommend removing the

insertIdfield. This solution may require some additional steps to manually

deduplicate the data. For more information, see

Manually removing duplicates. -

If you are not using

insertId, or if it’s not feasible to remove it, monitor

your streaming traffic over a 24-hour period and analyze the quota errors:-

If you see mostly

RATE_LIMIT_EXCEEDEDerrors rather thanQUOTA_EXCEEDED

errors, and your overall traffic is below 80% of quota, the errors probably

indicate temporary spikes. You can address these errors by retrying the

operation using exponential backoff between retries. -

If you are using a Dataflow job to insert data, consider using

load jobs instead of streaming

inserts. For more information, see Setting the insertion

method.

If you are using Dataflow with a custom I/O connector, consider

using a built-in I/O connector instead. For more information, see Custom

I/O patterns. -

If you see

QUOTA_EXCEEDEDerrors or the overall traffic consistently

exceeds 80% of the quota, submit a request for a quota increase. For more

information, see

Requesting a higher quota limit. -

You may also want to consider replacing streaming inserts with the newer

Storage Write API which has higher throughput,

lower price, and many useful features.

-

Loading CSV files quota errors

If you load a large CSV file using the bq load command with the

--allow_quoted_newlines flag,

you might encounter this error.

Error message

Input CSV files are not splittable and at least one of the files is larger than

the maximum allowed size. Size is: ...

Resolution

To resolve this quota error, do the following:

- Set the

--allow_quoted_newlinesflag tofalse. - Split the CSV file into smaller chunks that are each less than 4 GB.

For more information about limits that apply when you load data into

BigQuery, see Load jobs.

Table imports or query appends quota errors

BigQuery returns this error message when your table reaches the

limit for

table operations per day for Standard tables. Table operations include the

combined total of all load jobs,

copy jobs, and query jobs

that append or overwrite a destination table or that use

a DML DELETE, INSERT,

MERGE, TRUNCATE TABLE, or UPDATE statement to write data to a table.

To see the value of the Table operations per day limit, see Standard

tables.

Error message

Your table exceeded quota for imports or query appends per table

Diagnosis

If you have not identified the source from where most table operations are

originating, do the following:

-

Make a note of the project, dataset, and table that the failed query, load,

or the copy job is writing to. -

Use

INFORMATION_SCHEMA.JOBS_BY_*tables to learn more about jobs

that modify the table.The following example finds the hourly count of jobs grouped by job type for

a 24-hour period usingJOBS_BY_PROJECT. If you expect multiple

projects to write to the table, replaceJOBS_BY_PROJECTwith

JOBS_BY_ORGANIZATION.SELECT TIMESTAMP_TRUNC(creation_time, HOUR), job_type, count(1) FROM `region-us`.INFORMATION_SCHEMA.JOBS_BY_PROJECT #Adjust time WHERE creation_time BETWEEN "2021-06-20 00:00:00" AND "2021-06-21 00:00:00" AND destination_table.project_id = "my-project-id" AND destination_table.dataset_id = "my_dataset" AND destination_table.table_id = "my_table" GROUP BY 1, 2 ORDER BY 1 DESC

Resolution

This quota cannot be increased. To resolve this quota error, do the following:

- If you frequently load data from multiple small files stored in Cloud Storage that uses

a job per file, then combine multiple load jobs into a single job. You can load from multiple

Cloud Storage URIs with

a comma-separated list (for example,gs://my_path/file_1,gs://my_path/file_2), or by

using wildcards (for example,gs://my_path/*).For more information, see Batch

loading data. - If you use single-row queries (that is,

INSERTstatements) to write data to a

table, consider batching multiple queries into one to reduce the number of jobs.

BigQuery doesn’t perform well when used as a relational

database, so single-rowINSERTstatements executed at a high speed is not a

recommended best practice. - If you intend to insert data at a high rate, consider using

BigQuery Storage Write API. It is a

recommended solution for high-performance data ingestion. The BigQuery Storage Write API has robust

features, including exactly-once delivery semantics. To learn about limits and

quotas, see Storage Write

API and to see costs of using this API, see

BigQuery

data ingestion pricing.

BigQuery returns this error when your table reaches the limit for

maximum rate of table metadata update operations per table for Standard tables.

Table operations include the combined total of all load jobs,

copy jobs, and query jobs

that append to or overwrite a destination table or that use

a DML DELETE, INSERT,

MERGE, TRUNCATE TABLE, or UPDATE to write data to a table.

To see the value of the Maximum rate of table metadata update

operations per table limit, see Standard tables.

Error message

Exceeded rate limits: too many table update operations for this table

Diagnosis

Metadata table updates can originate from API calls that modify a table’s

metadata or from jobs that modify a table’s content. If you have not

identified the source from where most update operations to a table’s metadata

are originating, do the following:

Identify API calls

-

Go to the Google Cloud navigation

menu and

select Logging > Logs Explorer:Go to the Logs Explorer

-

Filter logs to view table operations by running the following query:

resource.type="bigquery_dataset" protoPayload.resourceName="projects/my-project-id/datasets/my_dataset/tables/my_table" (protoPayload.methodName="google.cloud.bigquery.v2.TableService.PatchTable" OR protoPayload.methodName="google.cloud.bigquery.v2.TableService.UpdateTable" OR protoPayload.methodName="google.cloud.bigquery.v2.TableService.InsertTable")

Identify jobs

The following query returns a list of jobs that modify the affected table in

the project. If you expect multiple projects in an organization

to write to the table, replace JOBS_BY_PROJECT with JOBS_BY_ORGANIZATION.

SELECT

job_id,

user_email,

query

#Adjust region

FROM `region-us`.INFORMATION_SCHEMA.JOBS_BY_PROJECT

#Adjust time

WHERE creation_time BETWEEN "2021-06-21 10:00:00" AND "2021-06-21 20:00:00"

AND destination_table.project_id = "my-project-id"

AND destination_table.dataset_id = "my_dataset"

AND destination_table.table_id = "my_table"

For more information, see BigQuery audit logs

overview.

Resolution

This quota cannot be increased. To resolve this quota error, do the following:

- Reduce the update rate for the table metadata.

- Add a delay between jobs or table operations to make sure that the update rate

is within the limit. -

For data inserts or modification, consider using DML operations. DML

operations are not affected by the Maximum rate of table metadata update

operations per table rate limit.DML operations have other limits

and quotas. For more information, see Using

data manipulation language (DML). - If you frequently load data from multiple small files stored in Cloud Storage that uses

a job per file, then combine multiple load jobs into a single job. You can load from multiple

Cloud Storage URIs with

a comma-separated list (for example,gs://my_path/file_1,gs://my_path/file_2), or by

using wildcards (for example,gs://my_path/*).For more information, see Batch

loading data. - If you use single-row queries (that is,

INSERTstatements) to write data to a

table, consider batching multiple queries into one to reduce the number of jobs.

BigQuery doesn’t perform well when used as a relational

database, so single-rowINSERTstatements executed at a high speed is not a

recommended best practice. - If you intend to insert data at a high rate, consider using

BigQuery Storage Write API. It is a

recommended solution for high-performance data ingestion. The BigQuery Storage Write API has robust

features, including exactly-once delivery semantics. To learn about limits and

quotas, see Storage Write

API and to see costs of using this API, see

BigQuery

data ingestion pricing.

Maximum number of API requests limit errors

BigQuery returns this error when you hit the rate limit for the

number of API requests to a BigQuery API per user per method—for

example, the tables.get

method calls from a service account, or the jobs.insert

method calls from a different user email.

For more information, see the Maximum number of API requests per second per

user per method rate limit in

All BigQuery API.

Error message

Too many API requests per user per method for this user_method

Diagnosis

If you have not identified the method that has reached this rate limit, do the

following:

For service account

-

Go to the project

that hosts the service account. -

In the Google Cloud console, go to the API Dashboard.

For instructions on how to view the detailed usage information of an API,

see Using the API Dashboard. -

In the API Dashboard, select BigQuery API.

-

To view more detailed usage information, select Metrics, and then do

the following:-

For Select Graphs, select Traffic by API method.

-

Filter the chart by the service account’s credentials. You might see

spikes for a method in the time range where you noticed the error.

-

For API calls

Some API calls log errors in BigQuery audit

logs in Cloud Logging. To identify the method that reached the limit, do the

following:

-

In the Google Cloud console, go to the Google Cloud navigation

menu and then

select Logging > Logs Explorer for your project:Go to the Logs Explorer

-

Filter logs by running the following query:

resource.type="bigquery_resource" protoPayload.authenticationInfo.principalEmail="<user email or service account>" "Too many API requests per user per method for this user_method" In the log entry, you can find the method name under the property protoPayload.method_name.

For more information, see BigQuery audit logs

overview.

Resolution

To resolve this quota error, do the following:

-

Reduce the number of API requests or add a delay between multiple API requests

so that the number of requests stays under this limit. -

If the limit is only exceeded occasionally, you can implement retries on this

specific error with exponential backoff. -

If you frequently insert data, consider using

streaming inserts because streaming

inserts are

not affected by the BigQuery API quota. However, the streaming inserts API

has costs associated with it and has its own set of limits and quotas.To learn about the cost of streaming inserts, see

BigQuery pricing. -

While loading data to BigQuery using Dataflow

with the BigQuery I/O connector, you

might encounter this error for thetables.get

method. To resolve this issue, do the following:-

Set the destination table’s create disposition to

CREATE_NEVER. For more

information, see Create disposition. -

Use the Apache Beam SDK version 2.24.0 or higher. In the

previous versions of the SDK, theCREATE_IF_NEEDEDdisposition

calls thetables.getmethod to check if the table exists.

-

-

You can request a quota increase by contacting support or sales. For

additional quota, see Request a quota increase.

Requesting a quota increase might take several days to process. To provide more

information for your request, we recommend that

your request includes the priority of the job, the user running the query, and

the affected method.

Your project exceeded quota for free query bytes scanned

BigQuery returns this error when you run a query in the free

usage tier and the account reaches the monthly limit of data size that can be

queried. For more information about Queries (analysis), see Free

usage tier.

Error message

Your project exceeded quota for free query bytes scanned

Resolution

To continue using BigQuery, you need to upgrade the account to a

paid Cloud Billing account.

Maximum tabledata.list bytes per second per project quota errors

BigQuery returns this error when the project number mentioned

in the error message reaches the maximum size of data that can be read through

the tabledata.list API call in a project per second. For more information, see

Maximum tabledata.list bytes per minute.

Error message

Your project:[project number] exceeded quota for tabledata.list bytes per second per project

Resolution

To resolve this error, do the following:

- In general, we recommend trying to stay below this limit. For example, by

spacing out requests over a longer period with delays. If the error doesn’t

happen frequently, implementing retries with exponential backoff

solves this issue. - If the use case expects fast and frequent reading of large amount of data from

a table, we recommend using BigQuery Storage Read API

instead of thetabledata.listAPI. -

If the preceding suggestions do not work, you can request a quota increase from

Google Cloud console API dashboard by doing the following:- Go to the Google Cloud console API dashboard.

- In the dashboard, filter for Quota:

Tabledata list bytes per minute (default quota). - Select the quota and follow the instruction in Requesting higher quota limit.

It might take several days to review and process the request.

Maximum number of copy jobs per day per project quota errors

BigQuery returns this error when the number of copy jobs running

in a project has exceeded the daily limit.

To learn more about the limit for Copy jobs per day, see Copy jobs.

Error message

Your project exceeded quota for copies per project

Diagnosis

If you’d like to gather more data about where the copy jobs are coming from,