I have a connection to a MS SQL Server 2012 database in classic ASP (VBScript). This is my connection string:

Provider=SQL Server Native Client 11.0;Server=localhost;

Database=databank;Uid=myuser;Pwd=mypassword;

When I execute this SQL command:

UPDATE [info] SET [stamp]='2014-03-18 01:00:02',

[data]='12533 characters goes here',

[saved]='2014-03-18 01:00:00',

[confirmed]=0,[ip]=0,[mode]=3,[rebuild]=0,

[updated]=1,[findable]=0

WHERE [ID]=193246;

I get the following error:

Microsoft SQL Server Native Client 11.0

error '80040e31'

Query timeout expired

/functions.asp, line 476

The SQL query is pretty long, the data field is updated with 12533 characters. The ID column is indexed so finding the post with ID 193246 should be fast.

When I execute the exact same SQL expression (copied and pasted) on SQL Server Management Studio it completes successfully in no time. No problem what so ever. So there isn’t a problem with the SQL itself. I’ve even tried using a ADODB.Recordset object and update via that (no self-written SQL) but I still get the same timeout error.

If I go to Tools > Options > Query Execution in the Management Studio I see that execution time-out is set to 0 (infinite). Under Tools > Options > Designers I see that transaction time-out is set to 30 seconds, which should be plenty enough since the script and database is on the same computer («localhost» is in the connection string).

What is going on here? Why can I execute the SQL in the Management Studio but not in my ASP code?

Edit: Tried setting the 30 sec timeout in the Designers tab to 600 sec just to make sure, but I still get the same error (happens after 30 sec of page loading btw).

Here is the code that I use to execute the SQL on the ASP page:

Set Conn = Server.CreateObject("ADODB.Connection")

Conn.Open "Provider=SQL Server Native Client 11.0;

Server=localhost;Database=databank;Uid=myuser;Pwd=mypassword;"

Conn.Execute "UPDATE [info] SET [stamp]='2014-03-18 01:00:02',

[data]='12533 characters goes here',[saved]='2014-03-18 01:00:00',

[confirmed]=0,[ip]=0,[mode]=3,[rebuild]=0,[updated]=1,[findable]=0

WHERE [ID]=193246;"

Edit 2: Using Conn.CommandTimeout = 0 to give infinite execution time for the query does nothing, it just makes the query execute forever. Waited 25 min and it was still executing.

I then tried to separate the SQL into two SQL statements, the long data update in one and the other updates in the other. It still wouldn’t update the long data field, just got timeout.

I tried this with two additional connection strings:

Driver={SQL Server};Server=localhost;Database=databank;Uid=myuser;Pwd=mypassword;

Driver={SQL Server Native Client 11.0};Server=localhost;Database=databank;Uid=myuser;Pwd=mypassword;

Didn’t work. I even tried changing the data to 12533 A’s just to see if the actual data was causing the problem. Nope, same problem.

Then I found out something interesting: I tried to execute the short SQL first, before the long update of the data field. It ALSO got query timeout exception…

But why? It has so little stuff to update in it (the whole SQL statement is less than 200 characters). Will investigate further.

Edit 3: I thought it might have been something to do with the login but I didn’t find anything that looked wrong. I even tried changing the connection string to use the sa-account but even that didn’t work, still getting «Query timeout expired».

This is driving me mad. There is no solution, no workaround and worst of all no ideas!

Edit 4: Went to Tools > Options > Designers in the Management Studio and ticked off the «Prevent saving changes that require table re-creation». It did nothing.

Tried changing the «data» column data type from «nvarchar(MAX)» to the inferior «ntext» type (I’m getting desperate). It didn’t work.

Tried executing the smallest change on the post I could think of:

UPDATE [info] SET [confirmed]=0 WHERE [ID]=193246;

That would set a bit column to false. Didn’t work. I tried executing the exact same query in the Management Studio and it worked flawlessly.

Throw me some ideas if you have got them because I’m running out for real now.

Edit 5: Have now also tried the following connection string:

Provider=SQLOLEDB.1;Password=mypassword;Persist Security Info=True;User ID=myuser;Initial Catalog=databank;Data Source=localhost

Didn’t work. Only tried to set confirmed to false but still got a time out.

Edit 6: Have now attempted to update a different post in the same table:

UPDATE [info] SET [confirmed]=0 WHERE [ID]=1;

It also gave the timeout error. So now we know it isn’t post specific.

I am able to update posts in other tables in the same «databank» database via ASP. I can also update tables in other databases on localhost.

Could there be something broken with the [info] table? I used the MS Access wizard to auto move data from Access to MS SQL Server 2012, it created columns of data type «ntext» and I manually went and changed that to «nvarchar(MAX)» since ntext is deprecated. Could something have broken down? It did require me to re-create the table when I changed the data type.

I have to get some sleep but I will be sure to check back tomorrow if anybody has responded to me. Please do, even if you only have something encouraging to say.

Edit 7: Quick edit before bed. Tried to define the provider as «SQLNCLI11» in the connection string as well (using the DLL name instead of the actual provider name). It makes no difference. Connection is created just as fine but the timeout still happens.

Also I’m not using MS SQL Server 2012 Express (as far as I know, «Express» wasn’t mentioned anywhere during installation). It’s the full thing.

If it helps, here’s the «Help» > «About…» info that is given by the Management Studio:

Microsoft SQL Server Management Studio: 11.0.2100.60

Microsoft Analysis Services Client Tools: 11.0.2100.60

Microsoft Data Access Components (MDAC): 6.3.9600.16384

Microsoft MSXML: 3.0 5.0 6.0

Microsoft Internet Explorer: 9.11.9600.16521

Microsoft .NET Framework: 4.0.30319.34011

Operating System: 6.3.9600

Edit 8 (also known as the «programmers never sleep» edit):

After trying some things I eventually tried to close the database connection and reopening it right before executing the SQL statements. It worked all of a sudden. What the…?

I have had my code inside a subroutine and it turns out that outside of it the post that I was trying to update was already opened! So the reason for the timeout was that the post or the whole table was locked by the very same connection that tried to update it. So the connection (or CPU thread) was waiting for a lock that would never unlock.

Hate it when it turns out to be so simple after trying so hard.

The post had been opened outside the subroutine by this simple code:

Set RecSet = Conn.Execute("SELECT etc")

I just added the following before calling the subroutine.

RecSet.Close

Set RecSet = Nothing

The reason why this never crossed my mind is simply because this was allowed in MS Access but now I have changed to MS SQL Server and it wasn’t so kind (or sloppy, rather). The created RecSet by Conn.Execute() had never created a locked post in the database before but now all of a sudden it did. Not too strange since the connection string and the actual database had changed.

I hope this post saves someone else some headache if you are migrating from MS Access to MS SQL Server. Though I can’t imagine there are that many Access users left in the world nowadays.

- Remove From My Forums

-

Question

-

Hi

I have a simple query for update table (30 columns). That table has a trigger to log canges to another table. This query I called from another update query more then 10 000 times. And if I do this i have a timeout. Message is:

Microsoft OLE DB Provider for ODBC Drivers

error ‘80040e31’[Microsoft][ODBC SQL Server Driver]Query timeout expired

How can I optimize a logic to avoid timeout?

Thank you

-

Moved by

Monday, March 28, 2011 6:21 PM

TSQL question (From:SQL Server Database Engine)

-

Moved by

Answers

-

1. There is a typo in the trigger (double .. when you call the function). Also, add TOP 1 and ORDER BY in these statements — you probably want to get the latest inserted time or latest deletion.

2. Why do you use ON 1=1 condition to JOIN with Inserted and Deleted tables? This type of JOIN produces a CROSS JOIN, no wonder you got timeouts.

This is my attempt to re-write:

declare @operationType nvarchar(1) declare @createDate datetime declare @UpdatedColumnsMask varbinary(500) = COLUMNS_UPDATED() -- detect operation type if not exists(select top 1 * from inserted) begin -- delete SET @operationType = 'D' SELECT top (1) @createDate = dbo.uf_DateWithCompTimeZone(CompanyId) FROM deleted ORDER BY CompanyID DESC end else if not exists(select top 1 * from deleted) begin -- insert SET @operationType = 'I' SELECT TOP (1) @createDate = dbo.uf_DateWithCompTimeZone(CompanyId) FROM inserted ORDER BY CompanyID DESC end else begin -- update (or MERGE) SET @operationType = 'U' SELECT TOP(1) @createDate = dbo.uf_DateWithCompTimeZone(CompanyId) FROM inserted ORDER BY CompanyID DESC end -- log data to tmp table INSERT INTO tbl1 SELECT @createDate, @operationType, @status, @updatedColumnsMask, d.F1, i.F1, d.F2, i.F2, d.F3, i.F3, d.F4, i.F4, d.F5, i.F5, ... FROM inserted i FULL JOIN deleted d on i.PK = d.PK END

For every expert, there is an equal and opposite expert. — Becker’s Law

Naomi Nosonovsky, Sr. Programmer-Analyst

My blog

-

Proposed as answer by

Anton Palyok

Wednesday, March 30, 2011 7:42 AM -

Marked as answer by

Kalman Toth

Sunday, April 3, 2011 9:41 PM

-

Proposed as answer by

The old SQL Server RAISERROR-hack does the trick for me.

RAISERROR(N'', 0, 1) WITH NOWAIT

If you can call it in your remote code more often than those default 600 seconds (10 minutes) then you can use it.

It just forces buffer flushing and this is enough to overcome your remote query timeout limitation.

In details. I have a stored procedure on a SQL Server instance. That instance is linked.

This procedure calls (especially in loops)

PRINT CONCAT('Buffer spamming to prevent "Query timeout expired"; at ', CONVERT(VARCHAR(12),GETDATE(),114))

RAISERROR(N'', 0, 1) WITH NOWAIT

Then I invoke this procedure through the Linked Server feature on another SQL Server instance.

EXECUTE MyNamedLinkedServer.MyDb.MyScheme.MyRemoteStoredProcedure

Warning! It is not tested in SQL Server 2019.

Basically we have two weird options in this situation.

First is to remember to configure remote query timeout

EXECUTE sp_configure 'remote query timeout', <your_value_seconds>;

for every new DB instance.

Other is to remember to use the RAISERROR hack.

Data volumes are changing, right people may change their job. Sooner or later you’ll get into this trouble in any way.

Sadly to say but we can’t set the remote query timeout on connection or session levels. IMHO

In current circumstances and for a robustness I would implement some queue analogue in SQL Server. I mean that I request some work and put parameters through the Linked Server feature. Later I check for results, periodically.

On the other weird hand, I have procedures that do not use RAISERROR hack, at all. But they always work stable for hours.

I did my best trying to realise what is going on there.

I can say that they have almost no SET NOCOUNT ON and their loops do not hung for a long time and sub-procedures’ calls are no longer than 10 minutes.

Skip to content

If you ever use linked servers to run queries on a remote database server, you may run into this issue if your queries take longer than 10 minutes: OLE DB provider “SQLNCLI11” for linked server xxxxx returned message “Query timeout expired” [SQLSTATE 01000] (Message 7412)

Below is a screenshot (sorry so small) of what I found in my job history while reviewing the logs. It is somewhat deceiving because this timeout does not cause an error, but is considered a warning. We found it because our overnight jobs were missing data, but no errors were found, actually looking through the job history showed what the issue was. There are ways to program your T-Sql to actually throw an error if it times out, but we needed to have the job run no matter how long it took so that is why we increased the timeout value.

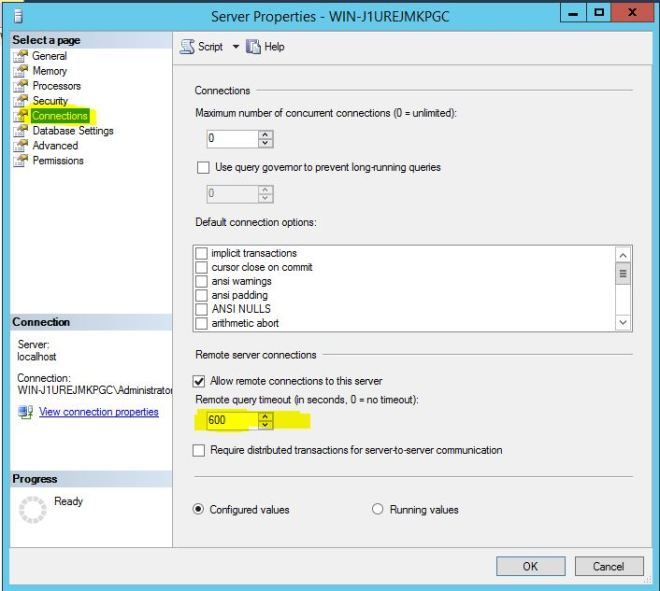

The default query timeout is 10 minutes (600 seconds). To change this setting, all you need to do is right click on your instance in SSMS and click properties.

On the Connections page, there is a setting called “remote query timeout” which defaults to 600 seconds. On my server, I increased the time to 1800 seconds which is 30 minutes. For your environment, you need to calculate the longest running query that you think you may have and then add a little extra. Here is a link from Books Online on more information about the setting:

https://technet.microsoft.com/en-us/library/ms177457(v=sql.105).aspx

If you ever get the remote query timeout alert, changing this setting will allow you to run remote queries longer than 10 minutes.

I have a connection to a MS SQL Server 2012 database in classic ASP (VBScript). This is my connection string:

Provider=SQL Server Native Client 11.0;Server=localhost;

Database=databank;Uid=myuser;Pwd=mypassword;

When I execute this SQL command:

UPDATE [info] SET [stamp]='2014-03-18 01:00:02',

[data]='12533 characters goes here',

[saved]='2014-03-18 01:00:00',

[confirmed]=0,[ip]=0,[mode]=3,[rebuild]=0,

[updated]=1,[findable]=0

WHERE [ID]=193246;

I get the following error:

Microsoft SQL Server Native Client 11.0

error '80040e31'

Query timeout expired

/functions.asp, line 476

The SQL query is pretty long, the data field is updated with 12533 characters. The ID column is indexed so finding the post with ID 193246 should be fast.

When I execute the exact same SQL expression (copied and pasted) on SQL Server Management Studio it completes successfully in no time. No problem what so ever. So there isn’t a problem with the SQL itself. I’ve even tried using a ADODB.Recordset object and update via that (no self-written SQL) but I still get the same timeout error.

If I go to Tools > Options > Query Execution in the Management Studio I see that execution time-out is set to 0 (infinite). Under Tools > Options > Designers I see that transaction time-out is set to 30 seconds, which should be plenty enough since the script and database is on the same computer («localhost» is in the connection string).

What is going on here? Why can I execute the SQL in the Management Studio but not in my ASP code?

Edit: Tried setting the 30 sec timeout in the Designers tab to 600 sec just to make sure, but I still get the same error (happens after 30 sec of page loading btw).

Here is the code that I use to execute the SQL on the ASP page:

Set Conn = Server.CreateObject("ADODB.Connection")

Conn.Open "Provider=SQL Server Native Client 11.0;

Server=localhost;Database=databank;Uid=myuser;Pwd=mypassword;"

Conn.Execute "UPDATE [info] SET [stamp]='2014-03-18 01:00:02',

[data]='12533 characters goes here',[saved]='2014-03-18 01:00:00',

[confirmed]=0,[ip]=0,[mode]=3,[rebuild]=0,[updated]=1,[findable]=0

WHERE [ID]=193246;"

Edit 2: Using Conn.CommandTimeout = 0 to give infinite execution time for the query does nothing, it just makes the query execute forever. Waited 25 min and it was still executing.

I then tried to separate the SQL into two SQL statements, the long data update in one and the other updates in the other. It still wouldn’t update the long data field, just got timeout.

I tried this with two additional connection strings:

Driver={SQL Server};Server=localhost;Database=databank;Uid=myuser;Pwd=mypassword;

Driver={SQL Server Native Client 11.0};Server=localhost;Database=databank;Uid=myuser;Pwd=mypassword;

Didn’t work. I even tried changing the data to 12533 A’s just to see if the actual data was causing the problem. Nope, same problem.

Then I found out something interesting: I tried to execute the short SQL first, before the long update of the data field. It ALSO got query timeout exception…

But why? It has so little stuff to update in it (the whole SQL statement is less than 200 characters). Will investigate further.

Edit 3: I thought it might have been something to do with the login but I didn’t find anything that looked wrong. I even tried changing the connection string to use the sa-account but even that didn’t work, still getting «Query timeout expired».

This is driving me mad. There is no solution, no workaround and worst of all no ideas!

Edit 4: Went to Tools > Options > Designers in the Management Studio and ticked off the «Prevent saving changes that require table re-creation». It did nothing.

Tried changing the «data» column data type from «nvarchar(MAX)» to the inferior «ntext» type (I’m getting desperate). It didn’t work.

Tried executing the smallest change on the post I could think of:

UPDATE [info] SET [confirmed]=0 WHERE [ID]=193246;

That would set a bit column to false. Didn’t work. I tried executing the exact same query in the Management Studio and it worked flawlessly.

Throw me some ideas if you have got them because I’m running out for real now.

Edit 5: Have now also tried the following connection string:

Provider=SQLOLEDB.1;Password=mypassword;Persist Security Info=True;User ID=myuser;Initial Catalog=databank;Data Source=localhost

Didn’t work. Only tried to set confirmed to false but still got a time out.

Edit 6: Have now attempted to update a different post in the same table:

UPDATE [info] SET [confirmed]=0 WHERE [ID]=1;

It also gave the timeout error. So now we know it isn’t post specific.

I am able to update posts in other tables in the same «databank» database via ASP. I can also update tables in other databases on localhost.

Could there be something broken with the [info] table? I used the MS Access wizard to auto move data from Access to MS SQL Server 2012, it created columns of data type «ntext» and I manually went and changed that to «nvarchar(MAX)» since ntext is deprecated. Could something have broken down? It did require me to re-create the table when I changed the data type.

I have to get some sleep but I will be sure to check back tomorrow if anybody has responded to me. Please do, even if you only have something encouraging to say.

Edit 7: Quick edit before bed. Tried to define the provider as «SQLNCLI11» in the connection string as well (using the DLL name instead of the actual provider name). It makes no difference. Connection is created just as fine but the timeout still happens.

Also I’m not using MS SQL Server 2012 Express (as far as I know, «Express» wasn’t mentioned anywhere during installation). It’s the full thing.

If it helps, here’s the «Help» > «About…» info that is given by the Management Studio:

Microsoft SQL Server Management Studio: 11.0.2100.60

Microsoft Analysis Services Client Tools: 11.0.2100.60

Microsoft Data Access Components (MDAC): 6.3.9600.16384

Microsoft MSXML: 3.0 5.0 6.0

Microsoft Internet Explorer: 9.11.9600.16521

Microsoft .NET Framework: 4.0.30319.34011

Operating System: 6.3.9600

Edit 8 (also known as the «programmers never sleep» edit):

After trying some things I eventually tried to close the database connection and reopening it right before executing the SQL statements. It worked all of a sudden. What the…?

I have had my code inside a subroutine and it turns out that outside of it the post that I was trying to update was already opened! So the reason for the timeout was that the post or the whole table was locked by the very same connection that tried to update it. So the connection (or CPU thread) was waiting for a lock that would never unlock.

Hate it when it turns out to be so simple after trying so hard.

The post had been opened outside the subroutine by this simple code:

Set RecSet = Conn.Execute("SELECT etc")

I just added the following before calling the subroutine.

RecSet.Close

Set RecSet = Nothing

The reason why this never crossed my mind is simply because this was allowed in MS Access but now I have changed to MS SQL Server and it wasn’t so kind (or sloppy, rather). The created RecSet by Conn.Execute() had never created a locked post in the database before but now all of a sudden it did. Not too strange since the connection string and the actual database had changed.

I hope this post saves someone else some headache if you are migrating from MS Access to MS SQL Server. Though I can’t imagine there are that many Access users left in the world nowadays.

SQL Server provides you with a good solution to automate a lot of your administrative tasks using the SQL Server Agent jobs. These jobs are handled from the operating system side by a Windows service that is responsible for executing these jobs and feeding the SQL Server systems tables with the metadata about these jobs. The system database that is used by the SQL Server Agent for the job management called the msdb database. All information related to the job steps, schedules and the history can be found in the msdb database tables. The msdb system database is also responsible for the SQL Server Mail, Service Broker, SQL Server Maintenance Plans and the databases backup history.

The msdb database tables that store information about the SQL Server Agent jobs are: the dbo.sysjobactivity which contains information about the SQL Server Agent job activates, the dbo.sysjobhistory table that keeps information about the execution history for the agent jobs , the dbo.sysjobs table that contains information about all the SQL Agent jobs, the dbo.sysjobschedules table that stores information about the schedules for all SQL Agent job, the dbo.sysjobservers table that contains the association of the SQL Agent jobs with the target servers, the dbo.sysjobsteps table that stores information about the SQL Agent jobs steps and the dbo.sysjobstepslogs table that stores logs about the SQL Agent jobs steps.

There are three main database fixed roles in the msdb database that control the access to the SQL Server Agent. The first role is SQLAgentUserRole that is least privileged one in the msdb database. This role members have access on the local jobs and local job schedules that they owned only. This role members can see only the Jobs node from the SQL Server Agent.

The second role is SQLAgentReaderRole, that members have access to see all the jobs and job schedules they owned and the ones they don’t own. But they can’t change on the jobs and schedules that they don’t own. This role members can see only the Jobs node from the SQL Server Agent.

The last role is SQLAgentOperatorRole, which is the most privileged role in the msdb database. The members of this role can start and stop the execution of the local jobs, enable and disable it, and they have the ability to delete the jobs history for these local jobs. But they are not able to change in the jobs that they don’t own. This role members can see all the SQL Server Agent nodex except the Error Logs one.

How could we get the correct job result on the step level?

One of the DBA’s daily tasks is checking the SQL Server Agent jobs that are scheduled at night and report back any failure to the corresponding system owners. A system owner reported to me that her scheduled job failed last night even though the evidence indicates that it was completed successfully. To get to the bottom of this I went through the job steps, and I found out that the job contains remote query that is using linked server and it gave Query Timeout Expired error.

Doing the below steps, I was under the impression that the system owner’s job was completed successfully, even though at later stage I found out the opposite.

The result of the SQL server agent jobs can be checked by drilling down the job to View History as below:

The job history window will be displayed showing valuable information about the last few runs of the chosen scheduled job including Date, Server, Job Name, Result Message and the Duration in seconds:

As shown in the snapshot above, the last run of our job succeeded. But the system’s owner complained that the job failed?

Let’s expand the job log to show the result of each step as below:

The step’s result still misleading us by showing the green tick sign beside the step, showing that the step also completed successfully. Going through the step result message carefully, we are shocked with this message: “Query timeout expired». [SQLSTATE 01000] (Message 7412). The step succeeded.”. This means that the query duration exceeded the server’s remote query timeout duration, raising this message from the SQL linked server OLE DB provider.

SQL Server remote query is a query that contains outgoing connection to a remote database. A SQL server parameter called remote query timeout is used to decide how long a remote query will take before initiating timeout message. The default remote query timeout value is 600 seconds. Setting the value to 0 will disable the timeout, so the query will wait until it is canceled.

If there is a network latency between you server and the remote SQL server, you can change this value to resolve the query timeout issue from the Connections tab of the Server Properties dialog box below:

Our main concern here, how we could have an automated way to trace the query timeout message within the SQL Agent job steps result in our case, or any failure message in your case, in order to get a better indication of the job status.

Let’s try first to view the job steps result that contains query timeout message, which is stored in the sysjobhistory and sysjobs tables from the msdb system database:

|

USE msdb Go SELECT JS.name AS JobName, JH.step_name AS StepName, JH.message AS StepMessage, JH.run_duration AS StepDuration, JH.run_date AS TS FROM sysjobhistory JH INNER JOIN sysjobs JS ON JS.job_id = JH.job_id WHERE JH.message LIKE ‘%Query timeout expired%’ ORDER BY JH.run_date desc GO |

Executing the query, the result will be as follows:

To be initiative and to avoid future complains related to the same problem, it is better to automate this process and review it as a part of your daily check. To achieve that, we will create a table that will host the query results, modify the previous script to fill that table with the previous day’s jobs result.

Let’s start with the table creation using the simple T-SQL script below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

USE [SQLShackDemo] GO SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [dbo].[JobStepsHistory]( [JobName] [nvarchar](50) NULL, [StepName] [nvarchar](50) NULL, [StepMessage] [nvarchar](max) NULL, [StepDuration] [int] NULL, [TS] [datetime] NULL ) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY] GO |

Once the table is ready, we will modify our script to insert into that table, any query timeout message during the last day. It is better to schedule the below script as a SQL Agent job that will be run the first thing in the morning, to make sure that all the scheduled jobs are finished:

|

USE [SQLShackDemo] GO INSERT INTO DBO.JobStepsHistory SELECT JS.name, JH.step_name, JH.message, JH.run_duration, JH.run_date FROM MSDB.DBO.sysjobhistory JH INNER JOIN MSDB.DBO.sysjobs JS ON JS.job_id = JH.job_id WHERE JH.message LIKE ‘%Query timeout expired%’ AND datepart (day,CONVERT(CHAR(10), CAST(STR(JH.run_date,8, 0) AS dateTIME), 111)) = datepart(day,getdate()—1) ORDER BY JH.run_date desc GO |

What is required from your side now, is a simple select statement from that table, every morning, to make sure that no query timeout occurred last night on your scheduled jobs. Also you can have more advanced automated way to send you the content of that table by email. You can also modify the previous script to trace any type of misleading messages you may face by replacing the query timeout message in LIKE statement inside WHERE clause.

You can simulate a test scenario for the “Query Timeout Expired” problem by creating a SQL stored procedure in the remote database, including WAITFOR delay exceeding the default 10 minutes.

- Author

- Recent Posts

Ahmad Yaseen is a Microsoft Big Data engineer with deep knowledge and experience in SQL BI, SQL Server Database Administration and Development fields.

He is a Microsoft Certified Solution Expert in Data Management and Analytics, Microsoft Certified Solution Associate in SQL Database Administration and Development, Azure Developer Associate and Microsoft Certified Trainer.

Also, he is contributing with his SQL tips in many blogs.

View all posts by Ahmad Yaseen

The old SQL Server RAISERROR-hack does the trick for me.

RAISERROR(N'', 0, 1) WITH NOWAIT

If you can call it in your remote code more often than those default 600 seconds (10 minutes) then you can use it.

It just forces buffer flushing and this is enough to overcome your remote query timeout limitation.

In details. I have a stored procedure on a SQL Server instance. That instance is linked.

This procedure calls (especially in loops)

PRINT CONCAT('Buffer spamming to prevent "Query timeout expired"; at ', CONVERT(VARCHAR(12),GETDATE(),114))

RAISERROR(N'', 0, 1) WITH NOWAIT

Then I invoke this procedure through the Linked Server feature on another SQL Server instance.

EXECUTE MyNamedLinkedServer.MyDb.MyScheme.MyRemoteStoredProcedure

Warning! It is not tested in SQL Server 2019.

Basically we have two weird options in this situation.

First is to remember to configure remote query timeout

EXECUTE sp_configure 'remote query timeout', <your_value_seconds>;

for every new DB instance.

Other is to remember to use the RAISERROR hack.

Data volumes are changing, right people may change their job. Sooner or later you’ll get into this trouble in any way.

Sadly to say but we can’t set the remote query timeout on connection or session levels. IMHO

In current circumstances and for a robustness I would implement some queue analogue in SQL Server. I mean that I request some work and put parameters through the Linked Server feature. Later I check for results, periodically.

On the other weird hand, I have procedures that do not use RAISERROR hack, at all. But they always work stable for hours.

I did my best trying to realise what is going on there.

I can say that they have almost no SET NOCOUNT ON and their loops do not hung for a long time and sub-procedures’ calls are no longer than 10 minutes.