I am printing Python exception messages to a log file with logging.error:

import logging

try:

1/0

except ZeroDivisionError as e:

logging.error(e) # ERROR:root:division by zero

Is it possible to print more detailed information about the exception and the code that generated it than just the exception string? Things like line numbers or stack traces would be great.

vvvvv

23.9k19 gold badges48 silver badges75 bronze badges

asked Mar 4, 2011 at 9:21

0

logger.exception will output a stack trace alongside the error message.

For example:

import logging

try:

1/0

except ZeroDivisionError:

logging.exception("message")

Output:

ERROR:root:message

Traceback (most recent call last):

File "<stdin>", line 2, in <module>

ZeroDivisionError: integer division or modulo by zero

@Paulo Cheque notes, «be aware that in Python 3 you must call the logging.exception method just inside the except part. If you call this method in an arbitrary place you may get a bizarre exception. The docs alert about that.»

GG.

20.8k13 gold badges82 silver badges130 bronze badges

answered Mar 4, 2011 at 9:25

13

Using exc_info options may be better, to allow you to choose the error level (if you use exception, it will always be at the error level):

try:

# do something here

except Exception as e:

logging.critical(e, exc_info=True) # log exception info at CRITICAL log level

ti7

15.7k6 gold badges39 silver badges67 bronze badges

answered Apr 10, 2015 at 8:01

flyceeflycee

11.8k3 gold badges19 silver badges14 bronze badges

3

One nice thing about logging.exception that SiggyF’s answer doesn’t show is that you can pass in an arbitrary message, and logging will still show the full traceback with all the exception details:

import logging

try:

1/0

except ZeroDivisionError:

logging.exception("Deliberate divide by zero traceback")

With the default (in recent versions) logging behaviour of just printing errors to sys.stderr, it looks like this:

>>> import logging

>>> try:

... 1/0

... except ZeroDivisionError:

... logging.exception("Deliberate divide by zero traceback")

...

ERROR:root:Deliberate divide by zero traceback

Traceback (most recent call last):

File "<stdin>", line 2, in <module>

ZeroDivisionError: integer division or modulo by zero

Stevoisiak

23.2k27 gold badges120 silver badges222 bronze badges

answered Jul 1, 2013 at 4:34

ncoghlanncoghlan

39.8k10 gold badges71 silver badges79 bronze badges

3

Quoting

What if your application does logging some other way – not using the

loggingmodule?

Now, traceback could be used here.

import traceback

def log_traceback(ex, ex_traceback=None):

if ex_traceback is None:

ex_traceback = ex.__traceback__

tb_lines = [ line.rstrip('n') for line in

traceback.format_exception(ex.__class__, ex, ex_traceback)]

exception_logger.log(tb_lines)

-

Use it in Python 2:

try: # your function call is here except Exception as ex: _, _, ex_traceback = sys.exc_info() log_traceback(ex, ex_traceback) -

Use it in Python 3:

try: x = get_number() except Exception as ex: log_traceback(ex)

answered Oct 19, 2015 at 10:21

zangwzangw

42.8k19 gold badges172 silver badges207 bronze badges

3

You can log the stack trace without an exception.

https://docs.python.org/3/library/logging.html#logging.Logger.debug

The second optional keyword argument is stack_info, which defaults to False. If true, stack information is added to the logging message, including the actual logging call. Note that this is not the same stack information as that displayed through specifying exc_info: The former is stack frames from the bottom of the stack up to the logging call in the current thread, whereas the latter is information about stack frames which have been unwound, following an exception, while searching for exception handlers.

Example:

>>> import logging

>>> logging.basicConfig(level=logging.DEBUG)

>>> logging.getLogger().info('This prints the stack', stack_info=True)

INFO:root:This prints the stack

Stack (most recent call last):

File "<stdin>", line 1, in <module>

>>>

answered Dec 3, 2019 at 10:01

BaczekBaczek

1,1691 gold badge13 silver badges23 bronze badges

If you use plain logs — all your log records should correspond this rule: one record = one line. Following this rule you can use grep and other tools to process your log files.

But traceback information is multi-line. So my answer is an extended version of solution proposed by zangw above in this thread. The problem is that traceback lines could have n inside, so we need to do an extra work to get rid of this line endings:

import logging

logger = logging.getLogger('your_logger_here')

def log_app_error(e: BaseException, level=logging.ERROR) -> None:

e_traceback = traceback.format_exception(e.__class__, e, e.__traceback__)

traceback_lines = []

for line in [line.rstrip('n') for line in e_traceback]:

traceback_lines.extend(line.splitlines())

logger.log(level, traceback_lines.__str__())

After that (when you’ll be analyzing your logs) you could copy / paste required traceback lines from your log file and do this:

ex_traceback = ['line 1', 'line 2', ...]

for line in ex_traceback:

print(line)

Profit!

answered Nov 4, 2016 at 17:32

doomateldoomatel

5971 gold badge5 silver badges8 bronze badges

This answer builds up from the above excellent ones.

In most applications, you won’t be calling logging.exception(e) directly. Most likely you have defined a custom logger specific for your application or module like this:

# Set the name of the app or module

my_logger = logging.getLogger('NEM Sequencer')

# Set the log level

my_logger.setLevel(logging.INFO)

# Let's say we want to be fancy and log to a graylog2 log server

graylog_handler = graypy.GELFHandler('some_server_ip', 12201)

graylog_handler.setLevel(logging.INFO)

my_logger.addHandler(graylog_handler)

In this case, just use the logger to call the exception(e) like this:

try:

1/0

except ZeroDivisionError, e:

my_logger.exception(e)

Stevoisiak

23.2k27 gold badges120 silver badges222 bronze badges

answered Apr 16, 2015 at 13:38

WillWill

1,96920 silver badges19 bronze badges

1

If «debugging information» means the values present when exception was raised, then logging.exception(...) won’t help. So you’ll need a tool that logs all variable values along with the traceback lines automatically.

Out of the box you’ll get log like

2020-03-30 18:24:31 main ERROR File "./temp.py", line 13, in get_ratio

2020-03-30 18:24:31 main ERROR return height / width

2020-03-30 18:24:31 main ERROR height = 300

2020-03-30 18:24:31 main ERROR width = 0

2020-03-30 18:24:31 main ERROR builtins.ZeroDivisionError: division by zero

Have a look at some pypi tools, I’d name:

- tbvaccine

- traceback-with-variables

- better-exceptions

Some of them give you pretty crash messages:

But you might find some more on pypi

answered Nov 4, 2020 at 22:22

A little bit of decorator treatment (very loosely inspired by the Maybe monad and lifting). You can safely remove Python 3.6 type annotations and use an older message formatting style.

fallible.py

from functools import wraps

from typing import Callable, TypeVar, Optional

import logging

A = TypeVar('A')

def fallible(*exceptions, logger=None)

-> Callable[[Callable[..., A]], Callable[..., Optional[A]]]:

"""

:param exceptions: a list of exceptions to catch

:param logger: pass a custom logger; None means the default logger,

False disables logging altogether.

"""

def fwrap(f: Callable[..., A]) -> Callable[..., Optional[A]]:

@wraps(f)

def wrapped(*args, **kwargs):

try:

return f(*args, **kwargs)

except exceptions:

message = f'called {f} with *args={args} and **kwargs={kwargs}'

if logger:

logger.exception(message)

if logger is None:

logging.exception(message)

return None

return wrapped

return fwrap

Demo:

In [1] from fallible import fallible

In [2]: @fallible(ArithmeticError)

...: def div(a, b):

...: return a / b

...:

...:

In [3]: div(1, 2)

Out[3]: 0.5

In [4]: res = div(1, 0)

ERROR:root:called <function div at 0x10d3c6ae8> with *args=(1, 0) and **kwargs={}

Traceback (most recent call last):

File "/Users/user/fallible.py", line 17, in wrapped

return f(*args, **kwargs)

File "<ipython-input-17-e056bd886b5c>", line 3, in div

return a / b

In [5]: repr(res)

'None'

You can also modify this solution to return something a bit more meaningful than None from the except part (or even make the solution generic, by specifying this return value in fallible‘s arguments).

answered Jul 12, 2018 at 16:55

Eli KorvigoEli Korvigo

10.2k6 gold badges46 silver badges73 bronze badges

In your logging module(if custom module) just enable stack_info.

api_logger.exceptionLog("*Input your Custom error message*",stack_info=True)

answered May 20, 2020 at 10:40

DunggeonDunggeon

921 gold badge1 silver badge11 bronze badges

If you look at the this code example (which works for Python 2 and 3) you’ll see the function definition below which can extract

- method

- line number

- code context

- file path

for an entire stack trace, whether or not there has been an exception:

def sentry_friendly_trace(get_last_exception=True):

try:

current_call = list(map(frame_trans, traceback.extract_stack()))

alert_frame = current_call[-4]

before_call = current_call[:-4]

err_type, err, tb = sys.exc_info() if get_last_exception else (None, None, None)

after_call = [alert_frame] if err_type is None else extract_all_sentry_frames_from_exception(tb)

return before_call + after_call, err, alert_frame

except:

return None, None, None

Of course, this function depends on the entire gist linked above, and in particular extract_all_sentry_frames_from_exception() and frame_trans() but the exception info extraction totals less than around 60 lines.

Hope that helps!

answered Jun 28, 2020 at 3:26

I wrap all functions around my custom designed logger:

import json

import timeit

import traceback

import sys

import unidecode

def main_writer(f,argument):

try:

f.write(str(argument))

except UnicodeEncodeError:

f.write(unidecode.unidecode(argument))

def logger(*argv,logfile="log.txt",singleLine = False):

"""

Writes Logs to LogFile

"""

with open(logfile, 'a+') as f:

for arg in argv:

if arg == "{}":

continue

if type(arg) == dict and len(arg)!=0:

json_object = json.dumps(arg, indent=4, default=str)

f.write(str(json_object))

f.flush()

"""

for key,val in arg.items():

f.write(str(key) + " : "+ str(val))

f.flush()

"""

elif type(arg) == list and len(arg)!=0:

for each in arg:

main_writer(f,each)

f.write("n")

f.flush()

else:

main_writer(f,arg)

f.flush()

if singleLine==False:

f.write("n")

if singleLine==True:

f.write("n")

def tryFunc(func, func_name=None, *args, **kwargs):

"""

Time for Successfull Runs

Exception Traceback for Unsuccessful Runs

"""

stack = traceback.extract_stack()

filename, codeline, funcName, text = stack[-2]

func_name = func.__name__ if func_name is None else func_name # sys._getframe().f_code.co_name # func.__name__

start = timeit.default_timer()

x = None

try:

x = func(*args, **kwargs)

stop = timeit.default_timer()

# logger("Time to Run {} : {}".format(func_name, stop - start))

except Exception as e:

logger("Exception Occurred for {} :".format(func_name))

logger("Basic Error Info :",e)

logger("Full Error TraceBack :")

# logger(e.message, e.args)

logger(traceback.format_exc())

return x

def bad_func():

return 'a'+ 7

if __name__ == '__main__':

logger(234)

logger([1,2,3])

logger(['a','b','c'])

logger({'a':7,'b':8,'c':9})

tryFunc(bad_func)

answered Oct 13, 2021 at 9:17

My approach was to create a context manager, to log and raise Exceptions:

import logging

from contextlib import AbstractContextManager

class LogError(AbstractContextManager):

def __init__(self, logger=None):

self.logger = logger.name if isinstance(logger, logging.Logger) else logger

def __exit__(self, exc_type, exc_value, traceback):

if exc_value is not None:

logging.getLogger(self.logger).exception(exc_value)

with LogError():

1/0

You can either pass a logger name or a logger instance to LogError(). By default it will use the base logger (by passing None to logging.getLogger).

One could also simply add a switch for raising the error or just logging it.

answered Aug 23, 2022 at 12:01

MuellerSebMuellerSeb

7816 silver badges11 bronze badges

If you can cope with the extra dependency then use twisted.log, you don’t have to explicitly log errors and also it returns the entire traceback and time to the file or stream.

Wim Coenen

65.9k13 gold badges156 silver badges249 bronze badges

answered Mar 4, 2011 at 9:26

Jakob BowyerJakob Bowyer

33.7k8 gold badges75 silver badges91 bronze badges

1

A clean way to do it is using format_exc() and then parse the output to get the relevant part:

from traceback import format_exc

try:

1/0

except Exception:

print 'the relevant part is: '+format_exc().split('n')[-2]

Regards

Rohan

52.1k12 gold badges89 silver badges86 bronze badges

answered Feb 20, 2013 at 16:32

1

Если Вы хотя бы немного знакомы с программированием и пробовали запускать что-то «в продакшен», то вам наверняка станет больно от такого диалога:

— Вась, у нас там приложение слегло. Посмотри, что случилось?

— Эмм… А как я это сделаю?..

Да, у Василия, судя по всему, не настроено логирование. И это ужасно, хотя бы по нескольким причинам:

- Он никогда не узнает, из-за чего его приложение упало.

- Он не сможет отследить, что привело к ошибке (даже если приложение не упало).

- Он не сможет посмотреть состояние своей системы в момент времени N.

- Он не сможет профилактически поглядывать в логи, чтобы следить за работоспособностью приложения.

- Он не сможет хвастаться своим… (кхе-кхе).

Впрочем, последний пункт, наверно, лишний. Однако, одну вещь мы поняли наверняка:

Логирование — крайне важная штука в программировании.

В языке Python основным инструментом для логирования является библиотека logging. Так давайте вместе с IT Resume рассмотрим её подробней.

Что такое logging?

Модуль logging в Python — это набор функций и классов, которые позволяют регистрировать события, происходящие во время работы кода. Этот модуль входит в стандартную библиотеку, поэтому для его использования достаточно написать лишь одну строку:

import loggingОсновная функция, которая пригодится Вам для работы с этим модулем — basicConfig(). В ней Вы будете указывать все основные настройки (по крайней мере, на базовом уровне).

У функции basicConfig() 3 основных параметра:

level— уровень логирования на Python;filename— место, куда мы направляем логи;format— вид, в котором мы сохраняем результат.

Давайте рассмотрим каждый из параметров более подробно.

Наверно, всем очевидно, что события, которые генерирует наш код кардинально могут отличаться между собой по степени важности. Одно дело отлавливать критические ошибки (FatalError), а другое — информационные сообщения (например, момент логина пользователя на сайте).

Соответственно, чтобы не засорять логи лишней информацией, в basicConfig() Вы можете указать минимальный уровень фиксируемых событий.

По умолчанию фиксируются только предупреждения (WARNINGS) и события с более высоким приоритетом: ошибки (ERRORS) и критические ошибки (CRITICALS).

logging.basicConfig(level=logging.DEBUG)А далее, чтобы записать информационное сообщение (или вывести его в консоль, об этом поговорим чуть позже), достаточно написать такой код:

logging.debug('debug message')

logging.info('info message')

И так далее. Теперь давайте обсудим, куда наши сообщения попадают.

Отображение лога и запись в файл

За место, в которое попадают логи, отвечает параметр filename в basicConfig. По умолчанию все Ваши логи будут улетать в консоль.

Другими словами, если Вы просто выполните такой код:

import logging

logging.error('WOW')То сообщение WOW придёт Вам в консоль. Понятно, что в консоли никому эти сообщения не нужны. Как же тогда направить запись лога в файл? Очень просто:

logging.basicConfig(filename = "mylog.log")Ок, с записью в файл и выбором уровня логирования все более-менее понятно. А как настроить свой шаблон? Разберёмся.

Кстати, мы собрали для Вас сублимированную шпаргалку по логированию на Python в виде карточек. У нас ещё много полезностей, не пожалеете 🙂

Форматирование лога

Итак, последнее, с чем нам нужно разобраться — форматирование лога. Эта опция позволяет Вам дополнять лог полезной информацией — датой, названием файла с ошибкой, номером строки, названием метода и так далее.

Сделать это можно, как все уже догадались, с помощью параметра format.

Например, если внутри basicConfig указать:

format = "%(asctime)s - %(levelname)s - %(funcName)s: %(lineno)d - %(message)s"То вывод ошибки будет выглядеть так:

2019-01-16 10:35:12,468 - ERROR - <module>:1 - Hello, world!Вы можете сами выбирать, какую информацию включить в лог, а какую оставить. По умолчанию формат такой:

<УРОВЕНЬ>: <ИМЯ_ЛОГГЕРА>: <СООБЩЕНИЕ>.

Важно помнить, что все параметры logging.basicConfig должны передаваться до первого вызова функций логирования.

Эпилог

Что же, мы разобрали все основные параметры модуля logging и функции basicConfig, которые позволят Вам настроить базовое логирование в Вашем проекте. Дальше — только практика и набивание шишек 🙂

Вместо заключения просто оставим здесь рабочий кусочек кода, который можно использовать 🙂

import logging

logging.basicConfig(

level=logging.DEBUG,

filename = "mylog.log",

format = "%(asctime)s - %(module)s - %(levelname)s - %(funcName)s: %(lineno)d - %(message)s",

datefmt='%H:%M:%S',

)

logging.info('Hello')Если хотите разобраться с параметрами более подробно, Вам поможет официальная документация (очень неплохая, кстати).

In this post , we will see – How to log an error in Python with debug information. Logging is important is Python to debug the errors .

Lets see how we can do that.

(Also in the same context , MUST READ earlier posts – How to Handle Errors and Exceptions in Python ?

if( aicp_can_see_ads() ) {

}

How to Code Custom Exception Handling in Python ?)

Option 1 – Using logging.error –

import logging

try:

<SOME_OPERATION>

except <STANDARD_PYTHON_ERRORNAME>: #Any Stanndard Error

logging.exception("message")

Option 2 – Using sys.excepthook –

To handle all types of uncaught exceptions, wecan use the “try-except” block (read our detailed post here) or we can use sys.excepthook.

Note that – excepthook is invoked every time an exception is raised but is uncaught. So you can override the default behavior of sys.excepthook to do as you would like it (including using logging.exception).

if( aicp_can_see_ads() ) {

}

When an exception is raised and uncaught, the interpreter calls sys.excepthook with 3 parameters –

- Exception class,

- Exception instance,

- A traceback object

But we can customize the handling by assigning another three-argument function to sys.excepthook.

Let’s see an example as to how to use it –

import logging

import sys

logger = logging.getLogger('<CUSTOME_LOGGER>')

# Write a Custom logger to write to a text file

def customr_handle(exc_type, exc_value, exc_traceback):

logger.exception("Uncaught exception: {0}".format(str(exc_value)))

# Initiate exception handler

sys.excepthook = customr_handle

if __name__ == '__main__':

main()

Another way of using it

import sys

import logging

logger = logging.getLogger(__name__)

handleIt = logging.StreamHandler(stream=sys.stdout)

logger.addHandler(handleIt)

def custom_handler(type, value, traceback):

if issubclass(exc_type, <ANY_STANDARD_EXCEPTION>):

sys.__excepthook__(type, value, traceback)

return

logger.error("Uncaught exception", exc_info=(type, value, traceback))

sys.excepthook = custom_handler

if __name__ == "__main__":

raise RuntimeError("Test The Logic")

Option 3 – Using the logging to a file –

You can log to an external file.

if( aicp_can_see_ads() ) {

}

logFile = open("logoutput.log", "w")

try:

<SOME_OPERATION>

except Exception as e:

logFile.write("Exception - {0}n".format(str(e)))

Option 4 – Using exc_info –

This function gives back a tuple of three values – which are the information about the exception being handled.

The information returned is specific both to the current thread and to the current stack frame.

import sys print sys.exc_info() try: <SOME_OPERATION> except <ANY_STANDARD_EXCEPTION>: print sys.exc_info()

Or you can also use as below –

try: <SOME_OPERATION> except Exception as e: logging.critical(e, exc_info=True)

if( aicp_can_see_ads() ) {

}

Option 5 – Using the traceback module –

import traceback try: raise Exception() except Exception as e: print(traceback.extract_tb(e.__traceback__))

Other Interesting Reads –

-

How To Fix – fatal error: Python.h: No such file or directory ?

-

How to Send Large Messages in Kafka ?

-

How to Handle Errors and Exceptions in Python ?

-

How To Fix – Indentation Errors in Python

[the_ad id=”1420″]

if( aicp_can_see_ads() ) {

}

How to log an error in Python, logging python error, python logging to file, python logging best practices, python logging to console, python logging multiple modules, python logging filehandler, python logging config, python logging timestamp, python logging stdout, What is logging module in Python, logging python error example, logging python error exception, logging python error stacktrace, logging python error traceback, logging python error exec_info, logging python error vs critical, logging python error try except, python, log error, error log, python error, python log, python logging, python log exception to file, print to error log python, create error log file python,python log all exceptions, python logging libraries, python data logger, create log file in python python error ,python error handling best practices ,python error types ,python error function ,python error no module named ,python error unexpected indent ,python error logging ,python error checker ,python error stack trace ,python error break outside loop ,python error handling best practices ,python error handling ,python error handling examples ,python error handling try except ,python error handling decorator ,python error handling continue ,python error handling index out of range ,python error handling raise ,python error handling try ,python error handling line number ,python error fixer online ,python error fix ,python syntax error fixer online ,python indentation error fix ,python syntax error fixer ,python memory error fix ,python indentation error fix online ,python type error fix ,python.exe error fix ,python best practices for code quality ,python best practices github ,python best practices book ,python best practices for beginners ,python best practices example ,python log exception ,python log exception stack trace ,python log exception to file ,python log error ,python log exception message ,python log execution time ,python log e ,python log example ,python log exception traceback ,python log error exception ,python log error ,python log error message ,python log errors to file ,python log error exception ,python log error traceback ,python log error and exit ,python log error example ,python log error line number ,python log error to console ,python logging best practices ,python logging module ,python logo ,python logger ,python logical operators ,python logging to file ,python log ,python logical and ,python logo png ,python logging multiple modules ,python log exception ,python log exception stack trace ,python log exception to file ,python log exception message ,python log execution time ,python log example ,python log exception traceback ,python log exc_info ,python log exception type ,python log exception and raise ,python error logging ,python error log file location ,python error logging to file ,python error logger ,python error logging best practices ,python error logging example ,python error logging decorator ,python logging best practices ,python logging module ,python logger ,python logging to file ,python logging multiple modules ,python logging not writing to file ,python logging config file example ,python logging filehandler ,python logging multiple files ,python logging example to file ,python logging error ,python logging error example ,python logging error vs exception ,python logging errors to separate file ,python logging error with exception ,python logging error stack trace ,python logging error exc_info ,python logging error to stderr ,python logging error vs critical ,python logging error traceback python log exception ,python log exception stack trace ,python log exception to file ,python log exception message ,python log exception traceback ,python log exception type ,python log exception and raise ,python log exception object ,python log exception as warning ,python log exception example ,python log exception stack trace ,python log exception to file ,python log exception message ,python log exception traceback ,python log exception type ,python log exception and raise ,python log exception object ,python log exception as warning ,python log exception example ,python log exception error ,python exception logging ,python exception log stack trace ,python exception loop ,python exception log message ,python exception location ,python exception log traceback ,python exception logging to file

python error log ,python error logging ,python error log file location ,python error logging to file ,python error logger ,python error logging best practices ,python error logging decorator ,python log error and exit ,python log error and raise exception ,python log error and continue ,python log assertion error ,python log an error ,python log any error ,python print to apache error log ,python catch and log error ,python error bar log scale ,python error bar log plot ,python logging error bad file descriptor ,python logging error color ,python logging error count ,python logging error critical ,python log error to console ,python logging error vs critical ,python create error log file ,crontab python error log ,python log error details ,python logging error debug ,python error logging to database ,python log domain error ,django python error log ,python default error log ,python error logging example ,python log error exception ,python log.error exc_info ,python logging error exit ,python logging.error(e) ,python logging error email ,python log error vs exception ,python logging error try except ,python error log file ,python logging error format ,python logging error keyerror 'formatters' ,python logging error stale file handle ,python flask error log ,python get error log ,python error handling log ,python how to log error ,python log error in file ,python log import error ,print error log in python ,python logging error keyerror ,python error log location ,python error log location windows ,python error log level ,python log error line number ,python log.error vs log.exception ,python lambda log error ,log fatal error python library file not exist ,python log error message ,python log error message to file ,python try except log error message ,python mysql error log ,python math domain error log ,python mean_squared_log_error ,python logging error not working ,python logging error not enough arguments for format string ,python logging error nameerror name 'open' is not defined ,python logging error no such file or directory ,python logging error oserror errno 22 invalid argument ,python logging error only ,python output error log ,python error log path ,python print error log ,python pip error log ,raspberry pi python error log ,python print error to log file ,qgis python error log ,python logging error raise exception ,python logging error red ,python log runtime error ,python requests log error ,python redirect error to log ,python error logs ,python error logs location ,python error logs linux ,python log error stack trace ,python log error to stderr ,python save error log ,python script error log ,python error log to file ,python log error traceback ,python error to log ,python log error trace ,python logging error unicodeencodeerror ,ubuntu python error log ,uwsgi python error log ,python unittest log error ,python logging error vs warning ,python logging error valueerror i/o operation on closed file ,python logging error vs info ,python log valueerror math domain error ,python log error with traceback ,python log error with stack trace ,python log error with exception ,python logging error warning ,python write error log ,python error logging stack trace ,python logging error exception ,python logging error traceback ,python logging error to stderr ,python logging error and exit ,python logging error arguments ,python error handling and logging ,python automatic error logging ,python assert logging error ,aws lambda python logging error ,python logging difference between error and critical ,python logging capture error ,logging fatal python error cannot recover from stack overflow ,python logging error exc_info ,python flask error logging ,python logging get error message ,python error handling logging ,python logging error handler ,python logging error in red ,python logging error info ,python logging install error ,python logging info stdout error stderr ,python import logging error ,python logging keyerror ,python error log linux ,python logging error line number ,python lambda error logging ,python logging.error vs logging.exception ,python error logging module ,python logging error message ,python mock logging.error ,python logging only error to stderr ,python logging only error to file ,python logging os error ,python logging permission error ,python logging print error ,python logging runtime error ,python logging error stderr ,python logging error to stdout ,python logging setlevel error ,python suppress logging error ,python logging unicode error ,python unittest logging error ,python logging error vs exception ,python logging error with exception ,python logging windowserror error 32 ,python log ,python logging ,python logo ,python logging example ,python logical operators ,python logging to file ,python logo png ,python logging multiple modules ,python logging best practices ,python log analysis tools ,python log analyzer ,python log analysis ,python log all output to file ,python log all errors to file ,python log any base ,python log append ,python log adapter ,python log base 2 ,python log base 10 ,python log base e ,python log backupcount ,python log base ,python log base 2 numpy ,python log base 3 ,python log console output to file ,python log calculation ,python log console ,python log config ,python log color ,python log class ,python log current time ,python log colorbar ,python log dictionary ,python log directly to s3 ,python log debug ,python log data ,python log decorator ,python log datetime ,python log dataframe ,python log data to file ,python log exception ,python log exception stack trace ,python log error ,python log exception to file ,python log exception message ,python log example ,python log execution time ,python log exception traceback ,python log function ,python log file ,python log format ,python log file creation ,python log file parser ,python log function name ,python log file not created ,python log file stack overflow ,python log gamma ,python log graph ,python log generator ,python log grid ,python log github ,python log gpu usage ,python log generic exception ,python log getlogger ,python log handler ,python log histogram ,python log http requests ,python log handler example ,python log hostname ,python log handler level ,python log handler stdout ,python log http response ,python log in ,python log into a file ,python log in file ,python log into website ,python log info ,python log info to console ,python log is not defined ,python login system ,python log json ,python log json format ,python log json object ,python log json formatter ,python log json pretty ,python log json to file ,python log journalctl ,python jira log work ,python log keystrokes ,python log kwargs ,python log kubernetes ,python log keyboardinterrupt ,python log kafka ,python log kibana ,python log keyerror ,python log keyerror 'formatters' ,python log levels ,python log likelihood ,python log log plot ,python log level environment variable ,python log list ,python log line number ,python log location ,python log loss ,python log module ,python logarithm ,python log monitoring ,python log multiple lines ,python log message ,python log memory usage ,python log math domain error ,python log method ,python log normal distribution ,python log normalization ,python log numpy ,python log natural ,python log number ,python log new line ,python log negative number ,python log not printing ,python log of number ,python log output to file ,python log object ,python log of array ,python log operation ,python log output ,python log object to string ,python log of list ,python log parser ,python log parsing ,python log print ,python log parser github ,python log parser example ,python log parsing interview questions ,python log print statements ,python log plot ,python log qualname ,python log queue ,python log qiita ,python qt log viewer ,python sqlite log queries ,python mysql log queries ,python log message queue ,python django log queries ,python log rotation ,python log rotation handler ,python log rotation not working ,python log rotation compression ,python log returns ,python log record ,python log range ,python log regression ,python log scraper ,python log stack trace ,python log statements ,python log scale ,python log stdout ,python log scale plot ,python log stdout to file ,python log scale x axis ,python log to file ,python log to console ,python log time ,python log to stdout ,python log to file and console ,python log transformation ,python log traceback ,python log to base 2 ,python log uniform ,python log utc time ,python log unhandled exceptions ,python log unicode ,python log ubuntu ,python log unittest ,python log username ,log util python ,python log viewer ,python log value ,python log variable ,python log vs print ,python log valueerror ,python log vs ln ,python log valueerror math domain error ,python log variable type ,python log without math ,python log warning ,python log with timestamp ,python log with base ,python log with color ,python log write to file ,python log warn vs warning ,python log with base 2 ,python log x axis ,python log xml ,python log(x 10) ,python xticks log scale ,python xlim log ,python xgboost log ,python histogram log x scale ,python log y axis ,python log y scale ,python log yaml ,python log yield ,python yaml log ,python yscale log ,python histogram log y ,python matplotlib log y ,python log zero ,python log zip ,python log z axis ,python log zeromq ,python log zur basis 2 ,python zip log files ,python np.log zero ,python math log zero ,python logging not writing to file ,python logging config file example ,python logging handler example ,python logging filehandler ,python logging across files ,python logging append ,python logging asctime format ,python logging async ,python logging as json ,python logging also print to console ,python logging asctime not working ,python logging asctime ,python logging basicconfig ,python logging both file and console ,python logging basicconfig example ,python logging basics ,python logging backupcount ,python logging best practices stack overflow ,python logging basicconfig format not working ,python logging color ,python logging console ,python logging custom formatter ,python logging cookbook ,python logging close handler ,python logging class ,python logging console and file ,python logging documentation ,python logging default file location ,python logging dictconfig example ,python logging dictconfig ,python logging debug not printing ,python logging default level ,python logging date format ,python logging decorator ,python logging exception example ,python logging example to file ,python logging exception ,python logging example github ,python logging extra fields ,python logging exception traceback ,python logging exc_info=true ,python logging format ,python logging format example ,python logging filehandler example ,python logging file rotation ,python logging from multiple modules ,python logging function ,python logging filter ,python logging getlogger ,python logging geeksforgeeks ,python logging github ,python logging get filename ,python logging github example ,python logging get level ,python logging get all loggers ,python logging get handler ,python logging handlers ,python logging hierarchy ,python logging httphandler example ,python logging howto ,python logging handler level ,python logging handler stdout ,python logging hostname ,python logging into file ,python logging info not printing ,python logging in json format ,python logging in file ,python logging info ,python logging interview questions ,python logging in multiple files ,python logging in pytest ,python logging json formatter ,python logging json ,python logging json format ,python logging json config ,python logging jupyter notebook ,python logging json output ,python logging journald ,python logging json handler ,python logging keyerror 'formatters' ,python logging keyerror ,python logging kubernetes ,python logging kwargs ,python logging kibana ,python logging keyword arguments ,python logging keyerror 'qualname' ,python logging kafka ,python logging levels ,python logging line number ,python logging libraries ,python logging level hierarchy ,python logging levels example ,python logging lambda ,python logging log rotation ,python logging log to file ,python logging module ,python logging module example ,python logging multiple files ,python logging multiprocessing ,python logging method name ,python logging multiple variables ,python logging module install ,python logging not printing ,python logging not working ,python logging not creating file ,python logging new file every day ,python logging not printing to stdout ,python logging name ,python logging new line ,python logging on console ,python logging overwrite file ,python logging on console and file ,python logging only to file ,python logging on stdout ,python logging object ,python logging only current module ,python logging output to file ,python logging package ,python logging print to console ,python logging print dictionary ,python logging print to console and file ,python logging program ,python logging package example ,python logging print exception ,python logging propagate example ,python logging queuehandler ,python logging qualname ,python logging quickstart ,python logging queuehandler example ,python logging queuehandler multiprocessing ,python logging quiet ,python logging qt ,python logging quiet mode ,python logging rotatingfilehandler example ,python logging rotatingfilehandler ,python logging rolling file ,python logging remove all handlers ,python logging real python ,python logging rotate size ,python logging root ,python logging root logger ,python logging streamhandler ,python logging set level ,python logging source code ,python logging stack overflow ,python logging stdout ,python logging shutdown ,python logging syslog ,python logging smtphandler example ,python logging to file and console ,python logging to file example ,python logging thread name ,python logging timedrotatingfilehandler example ,python logging to stdout ,python logging timestamp ,python logging timedrotatingfilehandler ,python logging using config file ,python logging utc ,python logging utf-8 ,python logging unicodeencodeerror ,python logging unrecognised argument(s) encoding ,python logging unittest ,python logging usage ,python logging uncaught exceptions ,python logging vs print ,python logging variables ,python logging verbose ,python logging vs logger ,python logging valueerror unrecognised argument(s) encoding ,python logging version ,python logging variable value ,python logging variable substitution ,python logging with timestamp ,python logging write to file ,python logging write to file and console ,python logging warning ,python logging with variables ,python logging w3schools ,python logging with line number ,python logging with rotation ,python logging xml formatter ,python logging xml ,python xmlrpc logging ,python logging yaml config example ,python logging yaml config ,python logging youtube ,python logging yaml filehandler ,python logging yaml format ,python logging yaml filename ,python logging yaml filter ,python logging yaml environment variable ,python logging zip ,python logging zeromq ,python zeep logging ,python logging time zone ,zappa python logging ,python logo images ,python logo svg ,python logo download ,python logo hd ,python logo vector ,python logo transparent background ,python logo meaning ,python logo animation ,python logo ascii art ,python logo ai ,python logo art ,python add logo to image ,python anaconda logo ,python add logo to plot ,python app logo ,python logo black and white ,python logo black background ,python logo blue ,python logo background ,python logo buy ,python bokeh logo ,python logo white background ,python logo color ,python logo copyright ,python logo copy paste ,python logo colours ,python logo creator ,python logo commands ,python logo clip art ,python logo concept ,python logo design ,python logo drawing ,python logo detection ,python logo detection opencv ,python logo definition ,python django logo ,python dash logo ,python logo emoji ,python logo explained ,python logo eps ,python logo evolution ,logo python en latex ,eckton python logo mini satchel ,python efteling logo ,python snap7 example logo ,python logo font ,python logo free download ,python logo free ,python logo flat ,python logo file ,python flask logo ,python first logo ,python find logo in image ,python logo gif ,python logo generator ,python logo github ,python logo guidelines ,python logo graphic ,python gaming logo ,python gui logo ,python logo small.gif ,python logo high resolution ,python logo history ,python logo hd wallpaper ,python logo hat ,python logo png hd ,python logo color hex ,python logo icon ,python logo images download ,python logo ico file ,python logo in latex ,python logo in turtle ,python logo inkscape ,python idle logo ,python logo jpg ,jupyter python logo ,python logo license ,python logo latex ,python logo line ,python libraries logo ,python machine learning logo ,python logo logo ,logo logiciel python ,python logo maker ,python logo mobile wallpaper ,python logo module ,python logo make ,ball python logo maker ,python logo 3d model ,python logo no background ,python logo no copyright ,python logo name ,python new logo ,python numpy logo ,nltk python logo ,python logo png download ,python logo png transparent ,python logo origin ,python logo outline ,python logo programming ,python logo pixel art ,python logo pictures ,python logo plot ,python qrcode logo ,python logo recognition ,python logo rgb ,python logo reason ,python logo represent ,python requests logo ,python remove logo ,opencv python logo recognition ,python logo sticker ,python logo svg download ,python logo small ,python logo snake ,python logo size ,python logo siemens ,python logo transparent ,python logo t shirt ,python logo turtle ,python logo trademark ,python logo template ,python tkinter logo ,python turtle logo code ,python tornado logo ,python logo usage ,python logo unicode ,python unittest logo ,python logo vector graphics ,python vs logo ,python logo without background ,python logo wallpaper ,python logo white ,python logo wiki ,python logo wikimedia ,python logo wallpaper hd ,python logo with black background ,python logo yellow ,python logging example code ,python logging example multiple modules ,python logging example format ,python logging example stdout ,python logging example to console ,python logging example to file and console ,python logging example arguments ,python logging addhandler example ,python logging adapter example ,python logging addfilter example ,python logging configure all loggers ,python logging args example ,python logging basicconfig append ,python logging addlevelname example ,python logging basic example ,python logging best example ,python logging by example ,python logging basic setup ,python behave logging example ,python 3 logging basicconfig example ,python logging example console ,python logging example config file ,python logging example config ,python logging example color ,python logging basicconfig console ,python logging sample code ,python logging.basicconfig create file ,python logging example debug ,python logging decorator example ,python logging dictionary example ,python logging basicconfig does not work ,python logging basicconfig datefmt ,python logging basicconfig default format ,python logging basicconfig directory ,python logging error example ,python logging basicconfig encoding ,python logging extra example ,python logging emit example ,python logging email example ,python logging basicconfig disable_existing_loggers ,python logging basicconfig no effect ,python logging example file ,python logging example file and console ,python logging example filehandler ,python logging filter example ,python logging basicconfig format ,python logging basicconfig file ,python logging fileconfig example ,python how to log ,python how to log exception ,python how to login to a website ,python how to log to a file ,python how to log errors ,python how to log to console ,python how to log traceback ,python how to log info ,python how to log to syslog ,python how to log an exception ,python how to log all errors ,python how to log into a website ,python how to create a log file ,python how to write a log file ,python how to read a log file ,python how to close a log file ,python log to both console and file ,python log to base 2 ,python log to base 10 ,python log to base ,python log to buffer ,python log to browser console ,python log base n ,python log basicconfig ,python how to create log file ,python how to calculate log ,python how to console log ,python logging how to close log file ,python log to console and file ,python log to csv ,python log to cloudwatch ,python log to csv file ,python how to log dictionary ,python how to do log ,python how to log to different files ,python log to database ,python log to docker ,python log to dataframe ,python log to dev null ,python log to debug ,python log to elasticsearch ,python log to elk ,python log to excel ,python log exception message ,python log e ,python how to log to file ,python how to read log file ,python how to write log file ,python how to save log file ,python how to parse log file ,python how to get log ,python log to graylog ,python log to file ,python log generator ,python log graph ,python log gamma ,python log grid ,python log github ,python log to html ,python log to http ,python server to log http requests ,python log handler ,python log histogram ,python log http requests ,python log handler example ,python log handler stdout ,python to log in ,python script to log in ,python log into file ,python log in file ,python log is not defined ,python log in console ,python how to log json ,python log to journalctl ,python log to journald ,python log to json format ,python log json object ,python log json formatter ,python log json pretty ,python convert log to json ,python log to kibana ,python log to kafka ,python log to kinesis ,python log keystrokes ,python log kwargs ,python log kubernetes ,python log keyboardinterrupt ,python log keyerror ,python how to set log level ,python log to logstash ,python log to list ,python string to log level ,python log levels ,python log level environment variable ,python log likelihood ,python log loss ,python how to make log file ,python log to multiple files ,python log to mongodb ,python log to mysql ,python log to messages ,python log to memory ,python log to multiple handlers ,python log to multiple loggers ,python how to do natural log ,python log to new file ,python log numpy ,python log normal distribution ,python log n ,python log not printing ,python log number ,python log negative number ,python log to output ,python code to log off computer ,python log to output file ,python log object ,python log of array ,python log of number ,python log operator ,python log of list ,python log to pandas ,python to print log ,python log plot ,python log parser ,python log parser github ,python log parser library ,python log package ,python log to queue ,python log qualname ,python sqlite log queries ,python mysql log queries ,python qt log viewer ,python django log queries ,python log qiita ,python quiver log plot ,python log to rsyslog ,python log to remote syslog ,python log to robot framework ,python log to redis ,python log to rabbitmq ,python log rotation ,python log returns ,python how to log stdout ,python how to save log ,python log to stdout and file ,python log to s3 ,python log to string ,python how to log time ,python how to take log ,python how to log to stdout ,python how to use log ,python log to uwsgi ,python log unhandled exceptions ,python log utf-8 ,python log uniform distribution ,python log utc time ,python log ubuntu ,python log unittest ,python log to variable ,python log viewer ,python log value ,python log vs print ,python log variable name and value ,python log visualization ,python log vs ln ,python log verbose ,python how to write log ,python log warning ,python log with timestamp ,python log without math ,python log with base ,python log with color ,python log to xml ,python log x axis ,python log(x) ,python log(x 10) ,python histogram log x scale ,python xticks log scale ,python matplotlib log x ,python xlim log ,python log y axis ,python log y scale ,python log yaml ,python log yield ,python yaml log ,python yscale log ,python histogram log y ,python matplotlib log y ,python log zero ,python log zip ,python log z axis ,python log zeromq ,python np.log zero ,python zip log files ,python math log zero ,python log zur basis 2 ,python log exception stack trace ,python log exception traceback ,python logging exception example ,python logger log exception ,python catch log exception ,python exception log file ,python log exception and raise ,python log exception and message ,python logging exception best practices ,python log caught exception ,python logging custom exception ,python log exception and continue ,python log exception details ,python log exception decorator ,python logging exception error ,python log exception format ,python logging exception formatter ,python log full exception ,python logging for exception ,python log generic exception ,python logging exception handler ,python logging exception handling ,python how to exception handling ,how to log exception in python ,python log exception as json ,python log exception line number ,python log exception level ,python logging how to log exception ,python log exception message and stack trace ,python logging module exception ,python log exception name ,python logging exception no traceback ,python log exception object ,python logging exception one line ,python logging exception output ,python log on exception ,python logging exception print ,python log exception raise ,python log exception and reraise ,python logging exception stderr ,python logging exception source code ,python log exception without stack trace ,python log exception type ,python log the exception ,python log exception without traceback ,python log uncaught exception ,python log exception vs error ,python log exception with traceback ,python log exception with stack trace ,python log exception with message ,python log warning exception ,python log with exception ,python code to login to a website and download file ,python code to login to a website using selenium ,python requests login to a website ,how to login to a website using python selenium ,python script to login to website automatically ,python selenium to login to website ,python login to website and scrape ,python login to website javascript ,python login to website and upload file ,how to login to any website using python ,python login website and scrape ,how to automate login to a website using python ,how to auto login to a website using python ,python login to website beautifulsoup ,python code to login to a website ,python login to website cookies ,python code to connect to a website ,python login to website example ,python selenium login to website example ,python login to website form ,python login to website github ,python login to https website ,how to login to a website in python ,python requests login to website javascript ,how to login to a website using python requests module ,python login to website mechanize ,how to login to a web page using python ,how to login to a website using python requests ,python script to login to a website ,python login to website selenium ,python log into secure website ,python script to connect to a website ,how to write a python script to login to a website ,how to login to a website using python ,how to connect to a website using python ,how to login to a website with python ,how to connect to a website with python ,python login to website with requests ,python login to website with cookies ,python login to website with captcha ,python login to website with selenium ,python how to write log to a file ,python log output to a file ,python log to a text file ,python log to file and console ,python log to file and screen ,python log to file append ,python log to file and print ,python log to file and stderr ,python 3 log to file and console ,python log file analysis ,python log file analyzer ,python logging to file basicconfig ,python log to both file and console ,python how to write to a binary file ,python logging to a file and console ,python logging to file config ,python log file clear ,python log file create ,python how to write to a csv file ,python how to append to a csv file ,python logging to a file example ,python how to write to a file example ,python write to log file example ,python log file error ,python how to write to a excel file ,python logging to file handler ,python how to log error ,python how to log errors ,python how to log all errors ,python log error with traceback ,python log error exception ,python log error and exit ,python log error vs exception ,python log error and continue ,python log error line number ,python log error and raise exception ,python log assertion error ,python log an error ,python log any error ,python logging error bad file descriptor ,python error logging best practices ,python logging count errors ,python log domain error ,python logging error exception ,python logging error example ,python logging error file ,python write error log file ,python logging get error message ,python logging error handling ,python log error in file ,how to log error in python ,python log import error ,how to log error message in python ,how to create error log file in python ,python logging error keyerror ,python logging error keyerror 'formatters' ,python logging keyerror ,python logging error levels ,python error log location ,python error log linux ,python log error message ,python log error message to file ,python logging error not working ,python logging error only ,python how to error out ,python logging error raise exception ,python logging error stderr ,python log error traceback ,python log error stack trace ,python logging error unicodeencodeerror ,python logging unicode error ,python logging error vs critical ,python logging error vs warning ,python logging error valueerror i/o operation on closed file ,python logging error vs info ,python log error with stack trace ,python log error with exception ,python best way to log errors ,python flask log errors ,python automatically log errors ,python script log errors ,python logger log errors ,python 3 log errors ,how to log errors in python ,python log info error ,python log all errors to file ,python logger error traceback , ,

if( aicp_can_see_ads() ) {

}

In the vast computing world, there are different programming languages that include facilities for logging. From our previous posts, you can learn best practices about Node logging, Java logging, and Ruby logging. As part of the ongoing logging series, this post describes what you need to discover about Python logging best practices.

Considering that “log” has the double meaning of a (single) log-record and a log-file, this post assumes that “log” refers to a log-file.

Advantages of Python logging

So, why learn about logging in Python? One of Python’s striking features is its capacity to handle high traffic sites, with an emphasis on code readability. Other advantages of logging in Python is its own dedicated library for this purpose, the various outputs where the log records can be directed, such as console, file, rotating file, Syslog, remote server, email, etc., and the large number of extensions and plugins it supports. In this post, you’ll find out examples of different outputs.

Python logging description

The Python standard library provides a logging module as a solution to log events from applications and libraries. Once the Python JSON logger is configured, it becomes part of the Python interpreter process that is running the code. In other words, it is global. You can also configure Python logging subsystem using an external configuration file. The specifications for the logging configuration format are found in the Python standard library.

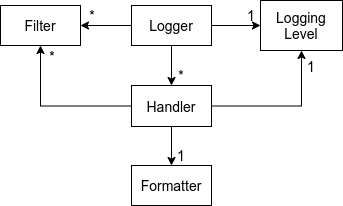

The logging library is based on a modular approach and includes categories of components: loggers, handlers, filters, and formatters. Basically:

- Loggers expose the interface that application code directly uses.

- Handlers send the log records (created by loggers) to the appropriate destination.

- Filters provide a finer grained facility for determining which log records to output.

- Formatters specify the layout of log records in the final output.

These multiple logger objects are organized into a tree that represents various parts of your system and different third-party libraries that you have installed. When you send a message into one of the loggers, the message gets output on all of that logger’s handlers, using a formatter that’s attached to each handler. The message then propagates up the logger tree until it hits the root logger, or a logger up in the tree that is configured with propagate=False.

Python logging platforms

This is an example of a basic logger in Python:

import logging logging.basicConfig(level=logging.DEBUG, format='%(asctime)s %(levelname)s %(message)s', filename='/tmp/myapp.log', filemode='w') logging.debug("Debug message") logging.info("Informative message") logging.error("Error message")

Line 1: import the logging module.

Line 2: create a basicConf function and pass some arguments to create the log file. In this case, we indicate the severity level, date format, filename and file mode to have the function overwrite the log file.

Line 3 to 5: messages for each logging level.

The default format for log records is SEVERITY: LOGGER: MESSAGE. Hence, if you run the code above as is, you’ll get this output:

2021-07-02 13:00:08,743 DEBUG Debug message 2021-07-02 13:00:08,743 INFO Informative message 2021-07-02 13:00:08,743 ERROR Error message

Regarding the output, you can set the destination of the log messages. As a first step, you can print messages to the screen using this sample code:

import logging logging.basicConfig(level=logging.DEBUG, format='%(asctime)s - %(levelname)s - %(message)s') logging.debug('This is a log message.')

If your goals are aimed at the Cloud, you can take advantage of Python’s set of logging handlers to redirect content. Currently in beta release, you can write logs to Stackdriver Logging from Python applications by using Google’s Python logging handler included with the Stackdriver Logging client library, or by using the client library to access the API directly. When developing your logger, take into account that the root logger doesn’t use your log handler. Since the Python Client for Stackdriver Logging library also does logging, you may get a recursive loop if the root logger uses your Python log handler.

The possibilities with Python logging are endless and you can customize them to your needs. The following are some tips for web application logging best practices, so you can take the most from Python logging:

Setting level names: This supports you in maintaining your own dictionary of log messages and reduces the possibility of typo errors.

LogWithLevelName = logging.getLogger('myLoggerSample') level = logging.getLevelName('INFO') LogWithLevelName.setLevel(level)

logging.getLevelName(logging_level) returns the textual representation of the severity called logging_level. The predefined values include, from highest to lowest severity:

- CRITICAL

- ERROR

- WARNING

- INFO

- DEBUG

Logging from multiple modules: if you have various modules, and you have to perform the initialization in every module before logging messages, you can use cascaded logger naming:

logging.getLogger(“coralogix”) logging.getLogger(“coralogix.database”) logging.getLogger(“coralogix.client”)

Making coralogix.client and coralogix.database descendants of the logger coralogix, and propagating their messages to it, it thereby enables easy multi-module logging. This is one of the positive side-effects of name in case the library structure of the modules reflects the software architecture.

Logging with Django and uWSGI: To deploy web applications you can use StreamHandler as logger which sends all logs to For Django you have:

'handlers': { 'stderr': { 'level': 'INFO', 'class': 'logging.StreamHandler', 'formatter': 'your_formatter', }, },

Next, uWSGI forwards all of the app output, including prints and possible tracebacks, to syslog with the app name attached:

$ uwsgi --log-syslog=yourapp …

Logging with Nginx: In case you need having additional features not supported by uWSGI — for example, improved handling of static resources (via any combination of Expires or E-Tag headers, gzip compression, pre-compressed gzip, etc.), access logs and their format can be customized in conf. You can use the combined format, such the example for a Linux system:

access_log /var/log/nginx/access.log;

This line is similar to explicitly specifying the combined format as this:

# note that the log_format directly below is a single line log_format mycombined '$remote_addr - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent"'server_port'; access_log /var/log/nginx/access.log mycombinedplus;

Log analysis and filtering: after writing proper logs, you might want to analyze them and obtain useful insights. First, open files using blocks, so you won’t have to worry about closing them. Moreover, avoid reading everything into memory at once. Instead, read a line at a time and use it to update the cumulative statistics. The use of the combined log format can be practical if you are thinking on using log analysis tools because they have pre-built filters for consuming these logs.

If you need to parse your log output for analysis you might want to use the code below:

with open(logfile, "rb") as f: for line in csv.reader(f, delimiter=' '): self._update(**self._parse(line))

Python’s CSV module contains code the read CSV files and other files with a similar format. In this way, you can combine Python’s logging library to register the logs and the CSV library to parse them.

And of course, the Coralogix way for python logging, using the Coralogix Python appender allows sending all Python written logs directly to Coralogix for search, live tail, alerting, and of course, machine learning powered insights such as new error detection and flow anomaly detection.

Python Logging Deep Dive

The rest of this guide is focused on how to log in Python using the built-in support for logging. It introduces various concepts that are relevant to understanding Python logging, discusses the corresponding logging APIs in Python and how to use them, and presents best practices and performance considerations for using these APIs.

We will introduce various concepts relevant to understanding logging in Python, discuss the corresponding logging APIs in Python and how to use them, and present best practices and performance considerations for using these APIs.

This Python tutorial assumes the reader has a good grasp of programming in Python; specifically, concepts and constructs pertaining to general programming and object-oriented programming. The information and Python logging examples in this article are based on Python version 3.8.

Python has offered built-in support for logging since version 2.3. This support includes library APIs for common concepts and tasks that are specific to logging and language-agnostic. This article introduces these concepts and tasks as realized and supported in Python’s logging library.

Basic Python Logging Concepts

When we use a logging library, we perform/trigger the following common tasks while using the associated concepts (highlighted in bold).

- A client issues a log request by executing a logging statement. Often, such logging statements invoke a function/method in the logging (library) API by providing the log data and the logging level as arguments. The logging level specifies the importance of the log request. Log data is often a log message, which is a string, along with some extra data to be logged. Often, the logging API is exposed via logger objects.

- To enable the processing of a request as it threads through the logging library, the logging library creates a log record that represents the log request and captures the corresponding log data.

- Based on how the logging library is configured (via a logging configuration), the logging library filters the log requests/records. This filtering involves comparing the requested logging level to the threshold logging level and passing the log records through user-provided filters.

- Handlers process the filtered log records to either store the log data (e.g., write the log data into a file) or perform other actions involving the log data (e.g., send an email with the log data). In some logging libraries, before processing log records, a handler may again filter the log records based on the handler’s logging level and user-provided handler-specific filters. Also, when needed, handlers often rely on user-provided formatters to format log records into strings, i.e., log entries.

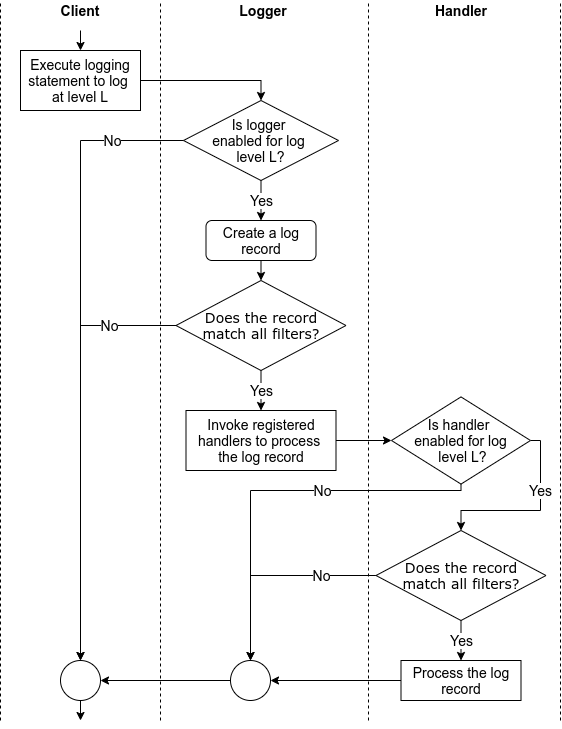

Independent of the logging library, the above tasks are performed in an order similar to that shown in Figure 1.

Figure 1: The flow of tasks when logging via a logging library

Python Logging Module

Python’s standard library offers support for logging via logging, logging.config, and logging.handlers modules.

loggingmodule provides the primary client-facing API.logging.configmodule provides the API to configure logging in a client.logging.handlersmodule provides different handlers that cover common ways of processing and storing log records.

We collectively refer to these Python log modules as Python’s logging library.

These Python log modules realize the concepts introduced in the previous section as classes, a set of module-level functions, or a set of constants. Figure 2 shows these classes and the associations between them.

Figure 2: Python classes and constants representing various logging concepts

Python Logging Levels

Out of the box, the Python logging library supports five logging levels: critical, error, warning, info, and debug. These levels are denoted by constants with the same name in the logging module, i.e., logging.CRITICAL, logging.ERROR, logging.WARNING, logging.INFO, and logging.DEBUG. The values of these constants are 50, 40, 30, 20, and 10, respectively.

At runtime, the numeric value of a logging level determines the meaning of a logging level. Consequently, clients can introduce new logging levels by using numeric values that are greater than 0 and not equal to pre-defined logging levels as logging levels.

Logging levels can have names. When names are available, logging levels appear by their names in log entries. Every pre-defined logging level has the same name as the name of the corresponding constant; hence, they appear by their names in log entries, e.g., logging.WARNING and 30 levels appear as ‘WARNING’. In contrast, custom logging levels are unnamed by default. So, an unnamed custom logging level with numeric value n appears as ‘Level n’ in log entries, and this results in inconsistent and human-unfriendly log entries. To address this, clients can name a custom logging level using the module-level function logging.addLevelName(level, levelName). For example, by using logging.addLevelName(33, 'CUSTOM1'), level 33 will be recorded as ‘CUSTOM1’.

The Python logging library adopts the community-wide applicability rules for logging levels, i.e., when should logging level X be used?

- Debug: Use

logging.DEBUGto log detailed information, typically of interest only when diagnosing problems, e.g., when the app starts. - Info: Use

logging.INFOto confirm the software is working as expected, e.g., when the app initializes successfully. - Warning: Use

logging.WARNINGto report behaviors that are unexpected or are indicative of future problems but do not affect the current functioning of the software, e.g., when the app detects low memory, and this could affect the future performance of the app. - Error: Use

logging.ERRORto report the software has failed to perform some function, e.g., when the app fails to save the data due to insufficient permission. - Critical: Use

logging.CRITICALto report serious errors that may prevent the continued execution of the software, e.g., when the app fails to allocate memory.

Python Loggers

The logging.Logger objects offer the primary interface to the logging library. These objects provide the logging methods to issue log requests along with the methods to query and modify their state. From here on out, we will refer to Logger objects as loggers.

Creation

The factory function logging.getLogger(name) is typically used to create loggers. By using the factory function, clients can rely on the library to manage loggers and to access loggers via their names instead of storing and passing references to loggers.

The name argument in the factory function is typically a dot-separated hierarchical name, e.g., a.b.c. This naming convention enables the library to maintain a hierarchy of loggers. Specifically, when the factory function creates a logger, the library ensures a logger exists for each level of the hierarchy specified by the name, and every logger in the hierarchy is linked to its parent and child loggers.

Threshold Logging Level

Each logger has a threshold logging level that determines if a log request should be processed. A logger processes a log request if the numeric value of the requested logging level is greater than or equal to the numeric value of the logger’s threshold logging level. Clients can retrieve and change the threshold logging level of a logger via Logger.getEffectiveLevel() and Logger.setLevel(level) methods, respectively.

When the factory function is used to create a logger, the function sets a logger’s threshold logging level to the threshold logging level of its parent logger as determined by its name.

Python Logging Methods

Every logger offers the following logging methods to issue log requests.

Logger.critical(msg, *args, **kwargs)Logger.error(msg, *args, **kwargs)Logger.debug(msg, *args, **kwargs)Logger.info(msg, *args, **kwargs)Logger.warn(msg, *args, **kwargs)

Each of these methods is a shorthand to issue log requests with corresponding pre-defined logging levels as the requested logging level.

In addition to the above methods, loggers also offer the following two methods:

Logger.log(level, msg, *args, **kwargs)issues log requests with explicitly specified logging levels. This method is useful when using custom logging levels.Logger.exception(msg, *args, **kwargs)issues log requests with the logging levelERRORand that capture the current exception as part of the log entries. Consequently, clients should invoke this method only from an exception handler.

msg and args arguments in the above methods are combined to create log messages captured by log entries. All of the above methods support the keyword argument exc_info to add exception information to log entries and stack_info and stacklevel to add call stack information to log entries. Also, they support the keyword argument extra, which is a dictionary, to pass values relevant to filters, handlers, and formatters.

When executed, the above methods perform/trigger all of the tasks shown in Figure 1 and the following two tasks:

- After deciding to process a log request based on its logging level and the threshold logging level, the logger creates a

LogRecordobject to represent the log request in the downstream processing of the request.LogRecordobjects capture themsgandargsarguments of logging methods and the exception and call stack information along with source code information. They also capture the keys and values in the extra argument of the logging method as fields. - After every handler of a logger has processed a log request, the handlers of its ancestor loggers process the request (in the order they are encountered walking up the logger hierarchy). The

Logger.propagatefield controls this aspect, which isTrueby default.

Beyond logging levels, filters provide a finer means to filter log requests based on the information in a log record, e.g., ignore log requests issued in a specific class. Clients can add and remove filters to/from loggers using Logger.addFilter(filter) and Logger.removeFilter(filter) methods, respectively.

Python Logging Filters

Any function or callable that accepts a log record argument and returns zero to reject the record and a non-zero value to admit the record can serve as a filter. Any object that offers a method with the signature filter(record: LogRecord) -> int can also serve as a filter.

A subclass of logging.Filter(name: str) that optionally overrides the logging.Filter.filter(record) method can also serve as a filter. Without overriding the filter method, such a filter will admit records emitted by loggers that have the same name as the filter and are children of the filter (based on the name of the loggers and the filter). If the name of the filter is empty, then the filter admits all records. If the method is overridden, then it should return zero value to reject the record and a non-zero value to admit the record.

Python Logging Handler

The logging.Handler objects perform the final processing of log records, i.e., logging log requests. This final processing often translates into storing the log record, e.g., writing it into system logs or files. It can also translate it to communicate the log record data to specific entities (e.g., send an email) or passing the log record to other entities for further processing (e.g., provide the log record to a log collection process or a log collection service).

Like loggers, handlers have a threshold logging level, which can be set via theHandler.setLevel(level) method. They also support filters via Handler.addFilter(filter) and Handler.removeFilter(filter) methods.

The handlers use their threshold logging level and filters to filter log records for processing. This additional filtering allows context-specific control over logging, e.g., a notifying handler should only process log requests that are critical or from a flaky module.

While processing the log records, handlers format log records into log entries using their formatters. Clients can set the formatter for a handler via Handler.setFormatter(formatter) method. If a handler does not have a formatter, then it uses the default formatter provided by the library.

The logging.handler module provides a rich collection of 15 useful handlers that cover many common use cases (including the ones mentioned above). So, instantiating and configuring these handlers suffices in many situations.

In situations that warrant custom handlers, developers can extend the Handler class or one of the pre-defined Handler classes by implementing the Handler.emit(record) method to log the provided log record.

Python Logging Formatter

The handlers use logging.Formatter objects to format a log record into a string-based log entry.

Note: Formatters do not control the creation of log messages.

A formatter works by combining the fields/data in a log record with the user-specified format string.

Unlike handlers, the logging library only provides a basic formatter that logs the requested logging level, the logger’s name, and the log message. So, beyond simple use cases, clients need to create new formatters by creating logging.Formatter objects with the necessary format strings.