- class sklearn.metrics.ConfusionMatrixDisplay(confusion_matrix, *, display_labels=None)[source]¶

-

Confusion Matrix visualization.

It is recommend to use

from_estimatoror

from_predictionsto

create aConfusionMatrixDisplay. All parameters are stored as

attributes.Read more in the User Guide.

- Parameters:

-

- confusion_matrixndarray of shape (n_classes, n_classes)

-

Confusion matrix.

- display_labelsndarray of shape (n_classes,), default=None

-

Display labels for plot. If None, display labels are set from 0 to

n_classes - 1.

- Attributes:

-

- im_matplotlib AxesImage

-

Image representing the confusion matrix.

- text_ndarray of shape (n_classes, n_classes), dtype=matplotlib Text, or None

-

Array of matplotlib axes.

Noneifinclude_valuesis false. - ax_matplotlib Axes

-

Axes with confusion matrix.

- figure_matplotlib Figure

-

Figure containing the confusion matrix.

See also

confusion_matrix-

Compute Confusion Matrix to evaluate the accuracy of a classification.

ConfusionMatrixDisplay.from_estimator-

Plot the confusion matrix given an estimator, the data, and the label.

ConfusionMatrixDisplay.from_predictions-

Plot the confusion matrix given the true and predicted labels.

Examples

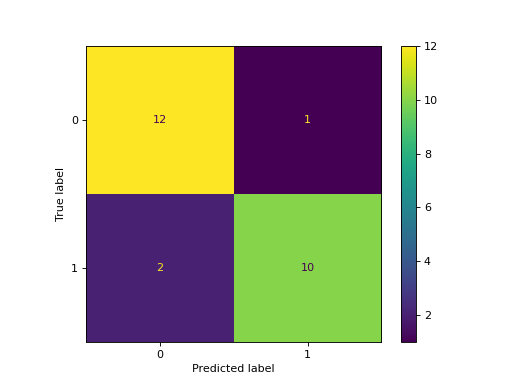

>>> import matplotlib.pyplot as plt >>> from sklearn.datasets import make_classification >>> from sklearn.metrics import confusion_matrix, ConfusionMatrixDisplay >>> from sklearn.model_selection import train_test_split >>> from sklearn.svm import SVC >>> X, y = make_classification(random_state=0) >>> X_train, X_test, y_train, y_test = train_test_split(X, y, ... random_state=0) >>> clf = SVC(random_state=0) >>> clf.fit(X_train, y_train) SVC(random_state=0) >>> predictions = clf.predict(X_test) >>> cm = confusion_matrix(y_test, predictions, labels=clf.classes_) >>> disp = ConfusionMatrixDisplay(confusion_matrix=cm, ... display_labels=clf.classes_) >>> disp.plot() <...> >>> plt.show()

Methods

from_estimator(estimator, X, y, *[, labels, …])Plot Confusion Matrix given an estimator and some data.

from_predictions(y_true, y_pred, *[, …])Plot Confusion Matrix given true and predicted labels.

plot(*[, include_values, cmap, …])Plot visualization.

- classmethod from_estimator(estimator, X, y, *, labels=None, sample_weight=None, normalize=None, display_labels=None, include_values=True, xticks_rotation=‘horizontal’, values_format=None, cmap=‘viridis’, ax=None, colorbar=True, im_kw=None, text_kw=None)[source]¶

-

Plot Confusion Matrix given an estimator and some data.

Read more in the User Guide.

New in version 1.0.

- Parameters:

-

- estimatorestimator instance

-

Fitted classifier or a fitted

Pipeline

in which the last estimator is a classifier. - X{array-like, sparse matrix} of shape (n_samples, n_features)

-

Input values.

- yarray-like of shape (n_samples,)

-

Target values.

- labelsarray-like of shape (n_classes,), default=None

-

List of labels to index the confusion matrix. This may be used to

reorder or select a subset of labels. IfNoneis given, those

that appear at least once iny_trueory_predare used in

sorted order. - sample_weightarray-like of shape (n_samples,), default=None

-

Sample weights.

- normalize{‘true’, ‘pred’, ‘all’}, default=None

-

Either to normalize the counts display in the matrix:

-

if

'true', the confusion matrix is normalized over the true

conditions (e.g. rows); -

if

'pred', the confusion matrix is normalized over the

predicted conditions (e.g. columns); -

if

'all', the confusion matrix is normalized by the total

number of samples; -

if

None(default), the confusion matrix will not be normalized.

-

- display_labelsarray-like of shape (n_classes,), default=None

-

Target names used for plotting. By default,

labelswill be used

if it is defined, otherwise the unique labels ofy_trueand

y_predwill be used. - include_valuesbool, default=True

-

Includes values in confusion matrix.

- xticks_rotation{‘vertical’, ‘horizontal’} or float, default=’horizontal’

-

Rotation of xtick labels.

- values_formatstr, default=None

-

Format specification for values in confusion matrix. If

None, the

format specification is ‘d’ or ‘.2g’ whichever is shorter. - cmapstr or matplotlib Colormap, default=’viridis’

-

Colormap recognized by matplotlib.

- axmatplotlib Axes, default=None

-

Axes object to plot on. If

None, a new figure and axes is

created. - colorbarbool, default=True

-

Whether or not to add a colorbar to the plot.

- im_kwdict, default=None

-

Dict with keywords passed to

matplotlib.pyplot.imshowcall. - text_kwdict, default=None

-

Dict with keywords passed to

matplotlib.pyplot.textcall.New in version 1.2.

- Returns:

-

- display

ConfusionMatrixDisplay

- display

See also

ConfusionMatrixDisplay.from_predictions-

Plot the confusion matrix given the true and predicted labels.

Examples

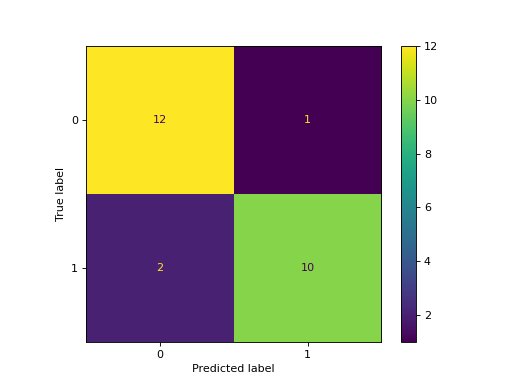

>>> import matplotlib.pyplot as plt >>> from sklearn.datasets import make_classification >>> from sklearn.metrics import ConfusionMatrixDisplay >>> from sklearn.model_selection import train_test_split >>> from sklearn.svm import SVC >>> X, y = make_classification(random_state=0) >>> X_train, X_test, y_train, y_test = train_test_split( ... X, y, random_state=0) >>> clf = SVC(random_state=0) >>> clf.fit(X_train, y_train) SVC(random_state=0) >>> ConfusionMatrixDisplay.from_estimator( ... clf, X_test, y_test) <...> >>> plt.show()

- classmethod from_predictions(y_true, y_pred, *, labels=None, sample_weight=None, normalize=None, display_labels=None, include_values=True, xticks_rotation=‘horizontal’, values_format=None, cmap=‘viridis’, ax=None, colorbar=True, im_kw=None, text_kw=None)[source]¶

-

Plot Confusion Matrix given true and predicted labels.

Read more in the User Guide.

New in version 1.0.

- Parameters:

-

- y_truearray-like of shape (n_samples,)

-

True labels.

- y_predarray-like of shape (n_samples,)

-

The predicted labels given by the method

predictof an

classifier. - labelsarray-like of shape (n_classes,), default=None

-

List of labels to index the confusion matrix. This may be used to

reorder or select a subset of labels. IfNoneis given, those

that appear at least once iny_trueory_predare used in

sorted order. - sample_weightarray-like of shape (n_samples,), default=None

-

Sample weights.

- normalize{‘true’, ‘pred’, ‘all’}, default=None

-

Either to normalize the counts display in the matrix:

-

if

'true', the confusion matrix is normalized over the true

conditions (e.g. rows); -

if

'pred', the confusion matrix is normalized over the

predicted conditions (e.g. columns); -

if

'all', the confusion matrix is normalized by the total

number of samples; -

if

None(default), the confusion matrix will not be normalized.

-

- display_labelsarray-like of shape (n_classes,), default=None

-

Target names used for plotting. By default,

labelswill be used

if it is defined, otherwise the unique labels ofy_trueand

y_predwill be used. - include_valuesbool, default=True

-

Includes values in confusion matrix.

- xticks_rotation{‘vertical’, ‘horizontal’} or float, default=’horizontal’

-

Rotation of xtick labels.

- values_formatstr, default=None

-

Format specification for values in confusion matrix. If

None, the

format specification is ‘d’ or ‘.2g’ whichever is shorter. - cmapstr or matplotlib Colormap, default=’viridis’

-

Colormap recognized by matplotlib.

- axmatplotlib Axes, default=None

-

Axes object to plot on. If

None, a new figure and axes is

created. - colorbarbool, default=True

-

Whether or not to add a colorbar to the plot.

- im_kwdict, default=None

-

Dict with keywords passed to

matplotlib.pyplot.imshowcall. - text_kwdict, default=None

-

Dict with keywords passed to

matplotlib.pyplot.textcall.New in version 1.2.

- Returns:

-

- display

ConfusionMatrixDisplay

- display

See also

ConfusionMatrixDisplay.from_estimator-

Plot the confusion matrix given an estimator, the data, and the label.

Examples

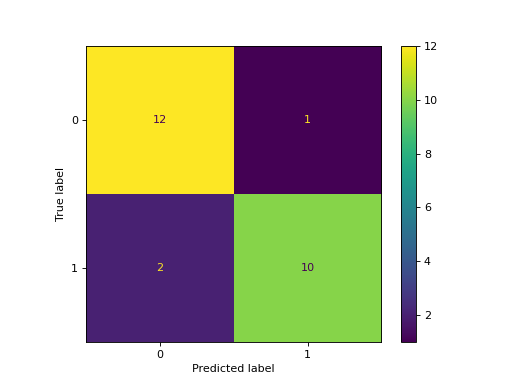

>>> import matplotlib.pyplot as plt >>> from sklearn.datasets import make_classification >>> from sklearn.metrics import ConfusionMatrixDisplay >>> from sklearn.model_selection import train_test_split >>> from sklearn.svm import SVC >>> X, y = make_classification(random_state=0) >>> X_train, X_test, y_train, y_test = train_test_split( ... X, y, random_state=0) >>> clf = SVC(random_state=0) >>> clf.fit(X_train, y_train) SVC(random_state=0) >>> y_pred = clf.predict(X_test) >>> ConfusionMatrixDisplay.from_predictions( ... y_test, y_pred) <...> >>> plt.show()

- plot(*, include_values=True, cmap=‘viridis’, xticks_rotation=‘horizontal’, values_format=None, ax=None, colorbar=True, im_kw=None, text_kw=None)[source]¶

-

Plot visualization.

- Parameters:

-

- include_valuesbool, default=True

-

Includes values in confusion matrix.

- cmapstr or matplotlib Colormap, default=’viridis’

-

Colormap recognized by matplotlib.

- xticks_rotation{‘vertical’, ‘horizontal’} or float, default=’horizontal’

-

Rotation of xtick labels.

- values_formatstr, default=None

-

Format specification for values in confusion matrix. If

None,

the format specification is ‘d’ or ‘.2g’ whichever is shorter. - axmatplotlib axes, default=None

-

Axes object to plot on. If

None, a new figure and axes is

created. - colorbarbool, default=True

-

Whether or not to add a colorbar to the plot.

- im_kwdict, default=None

-

Dict with keywords passed to

matplotlib.pyplot.imshowcall. - text_kwdict, default=None

-

Dict with keywords passed to

matplotlib.pyplot.textcall.New in version 1.2.

- Returns:

-

- display

ConfusionMatrixDisplay -

Returns a

ConfusionMatrixDisplayinstance

that contains all the information to plot the confusion matrix.

- display

Examples using sklearn.metrics.ConfusionMatrixDisplay¶

-

class sklearn.metrics.ConfusionMatrixDisplay(confusion_matrix, *, display_labels=None)[источник] -

Визуализация матрицы запутанности.

Рекомендуется использовать

plot_confusion_matrixдля созданияConfusionMatrixDisplay. Все параметры хранятся как атрибуты.Подробнее читайте в Руководстве пользователя .

- Parameters

-

-

confusion_matrixndarray of shape (n_classes, n_classes) -

Confusion matrix.

-

display_labelsndarray of shape (n_classes,), default=None -

Отобразите метки для графика. Если None, метки отображения устанавливаются от 0 до

n_classes - 1.

-

- Attributes

-

-

im_matplotlib AxesImage -

Изображение,представляющее матрицу путаницы.

-

text_ndarray of shape (n_classes, n_classes), dtype=matplotlib Text, or None -

Массив осей matplotlib.

Noneеслиinclude_valuesложно. -

ax_matplotlib Axes -

Оси с матрицей путаницы.

-

figure_matplotlib Figure -

Рисунок,содержащий матрицу путаницы.

-

Examples

>>> from sklearn.datasets import make_classification >>> from sklearn.metrics import confusion_matrix, ConfusionMatrixDisplay >>> from sklearn.model_selection import train_test_split >>> from sklearn.svm import SVC >>> X, y = make_classification(random_state=0) >>> X_train, X_test, y_train, y_test = train_test_split(X, y, ... random_state=0) >>> clf = SVC(random_state=0) >>> clf.fit(X_train, y_train) SVC(random_state=0) >>> predictions = clf.predict(X_test) >>> cm = confusion_matrix(y_test, predictions, labels=clf.classes_) >>> disp = ConfusionMatrixDisplay(confusion_matrix=cm, ... display_labels=clf.classes_) >>> disp.plot()

Methods

plot(*[, include_values, cmap, …])Plot visualization.

-

plot(*, include_values=True, cmap='viridis', xticks_rotation='horizontal', values_format=None, ax=None, colorbar=True)[источник] -

Plot visualization.

- Parameters

-

-

include_valuesbool, default=True -

Включает значения в матрицу путаницы.

-

cmapstr or matplotlib Colormap, default=’viridis’ -

Цветовая карта,распознаваемая matplotlib.

-

xticks_rotation{‘vertical’, ‘horizontal’} or float, default=’horizontal’ -

Вращение меток xtick.

-

values_formatstr, default=None -

Спецификация формата для значений в матрице путаницы. Если

None, спецификация формата — «d» или «.2g», в зависимости от того, что короче. -

axmatplotlib axes, default=None -

Объект Axes для построения графика. Если

None, создается новая фигура и оси. -

colorbarbool, default=True -

Добавлять или нет цветную полосу к графику.

-

- Returns

-

-

displayConfusionMatrixDisplay

-

Примеры использования sklearn.metrics.ConfusionMatrixDisplay

scikit-learn

1.1

-

sklearn.metrics.completeness_score

Метрика полноты маркировки кластера с заданной истинностью.

-

sklearn.metrics.confusion_matrix

Вычислите матрицу путаницы, чтобы оценить точность классификации.

-

sklearn.metrics.consensus_score

Подобие двух наборов бикластеров.

-

sklearn.metrics.coverage_error

Мера ошибки охвата.

Note

Click here

to download the full example code or to run this example in your browser via Binder

Example of confusion matrix usage to evaluate the quality

of the output of a classifier on the iris data set. The

diagonal elements represent the number of points for which

the predicted label is equal to the true label, while

off-diagonal elements are those that are mislabeled by the

classifier. The higher the diagonal values of the confusion

matrix the better, indicating many correct predictions.

The figures show the confusion matrix with and without

normalization by class support size (number of elements

in each class). This kind of normalization can be

interesting in case of class imbalance to have a more

visual interpretation of which class is being misclassified.

Here the results are not as good as they could be as our

choice for the regularization parameter C was not the best.

In real life applications this parameter is usually chosen

using Tuning the hyper-parameters of an estimator.

Confusion matrix, without normalization [[13 0 0] [ 0 10 6] [ 0 0 9]] Normalized confusion matrix [[1. 0. 0. ] [0. 0.62 0.38] [0. 0. 1. ]]

import numpy as np import matplotlib.pyplot as plt from sklearn import svm, datasets from sklearn.model_selection import train_test_split from sklearn.metrics import ConfusionMatrixDisplay # import some data to play with iris = datasets.load_iris() X = iris.data y = iris.target class_names = iris.target_names # Split the data into a training set and a test set X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0) # Run classifier, using a model that is too regularized (C too low) to see # the impact on the results classifier = svm.SVC(kernel="linear", C=0.01).fit(X_train, y_train) np.set_printoptions(precision=2) # Plot non-normalized confusion matrix titles_options = [ ("Confusion matrix, without normalization", None), ("Normalized confusion matrix", "true"), ] for title, normalize in titles_options: disp = ConfusionMatrixDisplay.from_estimator( classifier, X_test, y_test, display_labels=class_names, cmap=plt.cm.Blues, normalize=normalize, ) disp.ax_.set_title(title) print(title) print(disp.confusion_matrix) plt.show()

Total running time of the script: ( 0 minutes 0.183 seconds)

Gallery generated by Sphinx-Gallery

- Use Matplotlib to Plot Confusion Matrix in Python

- Use Seaborn to Plot Confusion Matrix in Python

- Use Pretty Confusion Matrix to Plot Confusion Matrix in Python

This article will discuss plotting a confusion matrix in Python using different library packages.

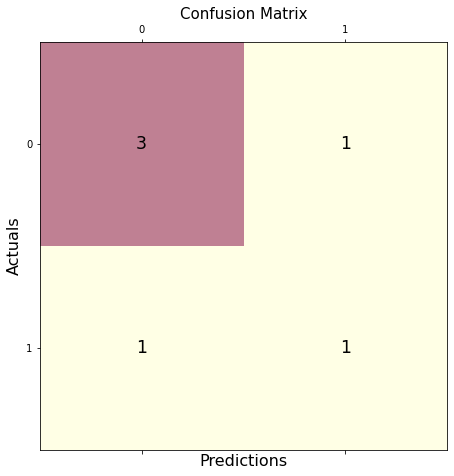

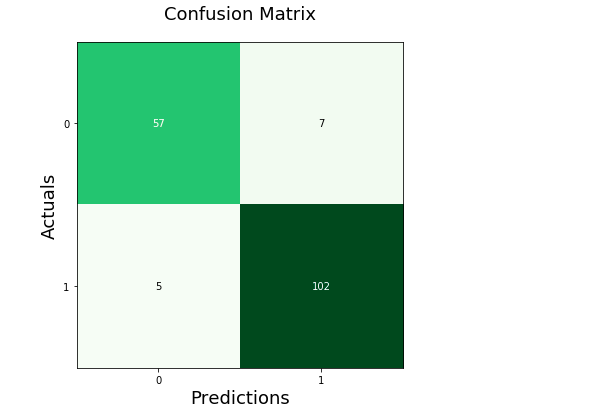

Use Matplotlib to Plot Confusion Matrix in Python

This program represents how we can plot the confusion matrix using Matplotlib.

Below are the two library packages we need to plot our confusion matrix.

from sklearn.metrics import confusion_matrix

import matplotlib.pyplot as plt

After importing the necessary packages, we need to create the confusion matrix from the given data.

First, we declare the variables y_true and y_pred. y-true is filled with the actual values while y-pred is filled with the predicted values.

y_true = ["bat", "ball", "ball", "bat", "bat", "bat"]

y_pred = ["bat", "bat", "ball", "ball", "bat", "bat"]

We then declare a variable mat_con to store the matrix. Below is the syntax we will use to create the confusion matrix.

mat_con = (confusion_matrix(y_true, y_pred, labels=["bat", "ball"]))

It tells the program to create a confusion matrix with the two parameters, y_true and y_pred. labels tells the program that the confusion matrix will be made with two input values, bat and ball.

To plot a confusion matrix, we also need to indicate the attributes required to direct the program in creating a plot.

fig, px = plt.subplots(figsize=(7.5, 7.5))

px.matshow(mat_con, cmap=plt.cm.YlOrRd, alpha=0.5)

plt.subplots() creates an empty plot px in the system, while figsize=(7.5, 7.5) decides the x and y length of the output window. An equal x and y value will display your plot on a perfectly squared window.

px.matshow is used to fill our confusion matrix in the empty plot, whereas the cmap=plt.cm.YlOrRd directs the program to fill the columns with yellow-red gradients.

alpha=0.5 is used to decide the depth of gradient or how dark the yellow and red are.

Then, we run a nested loop to plot our confusion matrix in a 2X2 format.

for m in range(mat_con.shape[0]):

for n in range(mat_con.shape[1]):

px.text(x=m,y=n,s=mat_con[m, n], va='center', ha='center', size='xx-large')

for m in range(mat_con.shape[0]): runs the loop for the number of rows, (shape[0] stands for number of rows). for n in range(mat_con.shape[1]): runs another loop inside the existing loop for the number of columns present.

px.text(x=m,y=n,s=mat_con[m, n], va='center', ha='center', size='xx-large') fills the confusion matrix plot with the rows and columns values.

In the final step, we use plt.xlabel() and plt.ylabel() to label the axes, and we put the title plot with the syntax plt.title().

plt.xlabel('Predictions', fontsize=16)

plt.ylabel('Actuals', fontsize=16)

plt.title('Confusion Matrix', fontsize=15)

plt.show()

Putting it all together, we generate the complete code below.

# imports

from sklearn.metrics import confusion_matrix

import matplotlib.pyplot as plt

# creates confusion matrix

y_true = ["bat", "ball", "ball", "bat", "bat", "bat"]

y_pred = ["bat", "bat", "ball", "ball", "bat", "bat"]

mat_con = (confusion_matrix(y_true, y_pred, labels=["bat", "ball"]))

# Setting the attributes

fig, px = plt.subplots(figsize=(7.5, 7.5))

px.matshow(mat_con, cmap=plt.cm.YlOrRd, alpha=0.5)

for m in range(mat_con.shape[0]):

for n in range(mat_con.shape[1]):

px.text(x=m,y=n,s=mat_con[m, n], va='center', ha='center', size='xx-large')

# Sets the labels

plt.xlabel('Predictions', fontsize=16)

plt.ylabel('Actuals', fontsize=16)

plt.title('Confusion Matrix', fontsize=15)

plt.show()

Output:

Use Seaborn to Plot Confusion Matrix in Python

Using Seaborn allows us to create different-looking plots without dwelling much into attributes or the need to create nested loops.

Below is the library package needed to plot our confusion matrix.

As represented in the previous program, we would be creating a confusion matrix using the confusion_matrix() method.

To create the plot, we will be using the syntax below.

fx = sebrn.heatmap(conf_matrix, annot=True, cmap='turbo')

We used the seaborn heatmap plot. annot=True fills the plot with data; a False value would result in a plot with no values.

cmap='turbo' stands for the color shading; we can choose from tens of different shading for our plot.

The code below will label our axes and set the title.

fx.set_title('Plotting Confusion Matrix using Seabornnn');

fx.set_xlabel('nValues model predicted')

fx.set_ylabel('True Values ');

Lastly, we label the boxes with the following syntax. This step is optional, but not using it will decrease the visible logic clarity of the matrix.

fx.xaxis.set_ticklabels(['False','True'])

fx.yaxis.set_ticklabels(['False','True']

Let’s put everything together into a working program.

# imports

import seaborn as sebrn

from sklearn.metrics import confusion_matrix

import matplotlib.pyplot as atlas

y_true = ["bat", "ball", "ball", "bat", "bat", "bat"]

y_pred = ["bat", "bat", "ball", "ball", "bat", "bat"]

conf_matrix = (confusion_matrix(y_true, y_pred, labels=["bat", "ball"]))

# Using Seaborn heatmap to create the plot

fx = sebrn.heatmap(conf_matrix, annot=True, cmap='turbo')

# labels the title and x, y axis of plot

fx.set_title('Plotting Confusion Matrix using Seabornnn');

fx.set_xlabel('Predicted Values')

fx.set_ylabel('Actual Values ');

# labels the boxes

fx.xaxis.set_ticklabels(['False','True'])

fx.yaxis.set_ticklabels(['False','True'])

atlas.show()

Output:

Use Pretty Confusion Matrix to Plot Confusion Matrix in Python

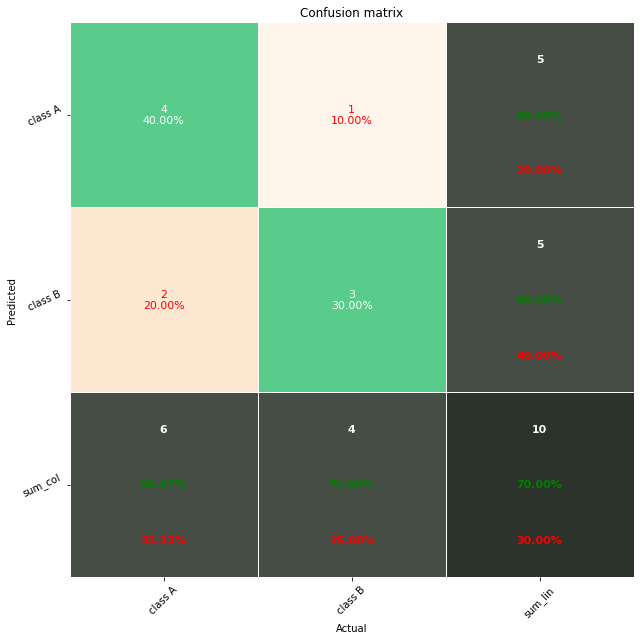

The Pretty Confusion Matrix is a Python library created to plot a stunning confusion matrix filled with lots of data related to metrics. This python library is useful when creating a highly detailed confusion matrix for your data sets.

In the below program, we plotted a confusion matrix using two sets of arrays: true_values and predicted_values. As we can see, plotting through Pretty Confusion Matrix is relatively simple than other plotting libraries.

from pretty_confusion_matrix import pp_matrix_from_data

true_values = [1,0,0,1,0,0,1,0,0,1]

predicted_values = [1,0,0,1,0,1,0,0,1,1]

cmap = 'PuRd'

pp_matrix_from_data(true_values, predicted_values)

Output:

Visualizations play an essential role in the exploratory data analysis activity of machine learning.

You can plot confusion matrix using the confusion_matrix() method from sklearn.metrics package.

Why Confusion Matrix?

After creating a machine learning model, accuracy is a metric used to evaluate the machine learning model. On the other hand, you cannot use accuracy in every case as it’ll be misleading. Because the accuracy of 99% may look good as a percentage, but consider a machine learning model used for Fraud Detection or Drug consumption detection.

In such critical scenarios, the 1% percentage failure can create a significant impact.

For example, if a model predicted a fraud transaction of 10000$ as Not Fraud, then it is not a good model and cannot be used in production.

In the drug consumption model, consider if the model predicted that the person had consumed the drug but actually has not. But due to the False prediction of the model, the person may be imprisoned for a crime that is not committed actually.

In such scenarios, you need a better metric than accuracy to validate the machine learning model.

This is where the confusion matrix comes into the picture.

In this tutorial, you’ll learn what a confusion matrix is, how to plot confusion matrix for the binary classification model and the multivariate classification model.

What is Confusion Matrix?

Confusion matrix is a matrix that allows you to visualize the performance of the classification machine learning models. With this visualization, you can get a better idea of how your machine learning model is performing.

Creating Binary Class Classification Model

In this section, you’ll create a classification model that will predict whether a patient has breast cancer or not, denoted by output classes True or False.

The breast cancer dataset is available in the sklearn dataset library.

It contains a total number of 569 data rows. Each row includes 30 numeric features and one output class. If you want to manipulate or visualize the sklearn dataset, you can convert it into pandas dataframe and play around with the pandas dataframe functionalities.

To create the model, you’ll load the sklearn dataset, split it into train and testing set and fit the train data into the KNeighborsClassifier model.

After creating the model, you can use the test data to predict the values and check how the model is performing.

You can use the actual output classes from your test data and the predicted output returned by the predict() method to plot the confusion matrix and evaluate the model accuracy.

Use the below snippet to create the model.

Snippet

import numpy as np

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier as KNN

breastCancer = load_breast_cancer()

X = breastCancer.data

y = breastCancer.target

# Split the dataset into train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.4, random_state = 42)

knn = KNN(n_neighbors = 3)

# train the model

knn.fit(X_train, y_train)

print('Model is Created')The KNeighborsClassifier model is created for the breast cancer training data.

Output

Model is CreatedTo test the model created, you can use the test data obtained from the train test split and predict the output. Then, you’ll have the predicted values.

Snippet

y_pred = knn.predict(X_test)

y_predOutput

array([0, 0, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 0, 0, 1, 1, 0, 0, 1,

0, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 0, 1, 1,

1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0, 0, 1, 1, 1,

0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 0,

1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 0, 1, 1,

0, 1, 1, 0, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0,

0, 1, 1, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 1, 1,

1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1,

0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1,

0, 1, 1, 1, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1,

0, 1, 0, 0, 1, 1, 0, 1])Now use the predicted classes and the actual output classes from the test data to visualize the confusion matrix.

You’ll learn how to plot the confusion matrix for the binary classification model in the next section.

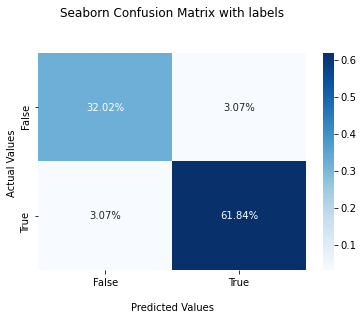

Plot Confusion Matrix for Binary Classes

You can create the confusion matrix using the confusion_matrix() method from sklearn.metrics package. The confusion_matrix() method will give you an array that depicts the True Positives, False Positives, False Negatives, and True negatives.

** Snippet**

from sklearn.metrics import confusion_matrix

#Generate the confusion matrix

cf_matrix = confusion_matrix(y_test, y_pred)

print(cf_matrix)Output

[[ 73 7]

[ 7 141]]Once you have the confusion matrix created, you can use the heatmap() method available in the seaborn library to plot the confusion matrix.

Seaborn heatmap() method accepts one mandatory parameter and few other optional parameters.

data– A rectangular dataset that can be coerced into a 2d array. Here, you can pass the confusion matrix you already haveannot=True– To write the data value in the cell of the printed matrix. By default, this isFalse.cmap=Blues– This is to denote the matplotlib color map names. Here, we’ve created the plot using the blue color shades.

The heatmap() method returns the matplotlib axes that can be stored in a variable. Here, you’ll store in variable ax. Now, you can set title, x-axis and y-axis labels and tick labels for x-axis and y-axis.

- Title – Used to label the complete image. Use the set_title() method to set the title.

- Axes-labels – Used to name the

xaxis oryaxis. Use the set_xlabel() to set the x-axis label and set_ylabel() to set the y-axis label. - Tick labels – Used to denote the datapoints on the axes. You can pass the tick labels in an array, and it must be in ascending order. Because the confusion matrix contains the values in the ascending order format. Use the xaxis.set_ticklabels() to set the tick labels for x-axis and yaxis.set_ticklabels() to set the tick labels for y-axis.

Finally, use the plot.show() method to plot the confusion matrix.

Use the below snippet to create a confusion matrix, set title and labels for the axis, and set the tick labels, and plot it.

Snippet

import seaborn as sns

ax = sns.heatmap(cf_matrix, annot=True, cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labelsnn');

ax.set_xlabel('nPredicted Values')

ax.set_ylabel('Actual Values ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['False','True'])

ax.yaxis.set_ticklabels(['False','True'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

Alternatively, you can also plot the confusion matrix using the ConfusionMatrixDisplay.from_predictions() method available in the sklearn library itself if you want to avoid using the seaborn.

Next, you’ll learn how to plot a confusion matrix with percentages.

Plot Confusion Matrix for Binary Classes With Percentage

The objective of creating and plotting the confusion matrix is to check the accuracy of the machine learning model. It’ll be good to visualize the accuracy with percentages rather than using just the number. In this section, you’ll learn how to plot a confusion matrix for binary classes with percentages.

To plot the confusion matrix with percentages, first, you need to calculate the percentage of True Positives, False Positives, False Negatives, and True negatives. You can calculate the percentage of these values by dividing the value by the sum of all values.

Using the np.sum() method, you can sum all values in the confusion matrix.

Then pass the percentage of each value as data to the heatmap() method by using the statement cf_matrix/np.sum(cf_matrix).

Use the below snippet to plot the confusion matrix with percentages.

Snippet

ax = sns.heatmap(cf_matrix/np.sum(cf_matrix), annot=True,

fmt='.2%', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labelsnn');

ax.set_xlabel('nPredicted Values')

ax.set_ylabel('Actual Values ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['False','True'])

ax.yaxis.set_ticklabels(['False','True'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

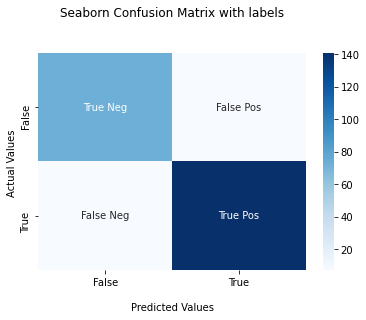

Plot Confusion Matrix for Binary Classes With Labels

In this section, you’ll plot a confusion matrix for Binary classes with labels True Positives, False Positives, False Negatives, and True negatives.

You need to create a list of the labels and convert it into an array using the np.asarray() method with shape 2,2. Then, this array of labels must be passed to the attribute annot. This will plot the confusion matrix with the labels annotation.

Use the below snippet to plot the confusion matrix with labels.

Snippet

labels = ['True Neg','False Pos','False Neg','True Pos']

labels = np.asarray(labels).reshape(2,2)

ax = sns.heatmap(cf_matrix, annot=labels, fmt='', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labelsnn');

ax.set_xlabel('nPredicted Values')

ax.set_ylabel('Actual Values ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['False','True'])

ax.yaxis.set_ticklabels(['False','True'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

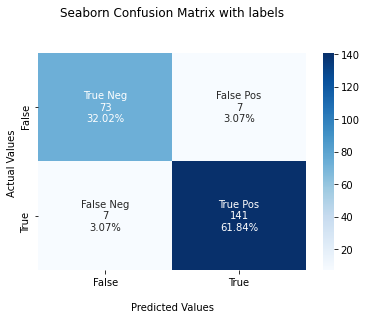

Plot Confusion Matrix for Binary Classes With Labels And Percentages

In this section, you’ll learn how to plot a confusion matrix with labels, counts, and percentages.

You can use this to measure the percentage of each label. For example, how much percentage of the predictions are True Positives, False Positives, False Negatives, and True negatives

For this, first, you need to create a list of labels, then count each label in one list and measure the percentage of the labels in another list.

Then you can zip these different lists to create labels. Zipping means concatenating an item from each list and create one list. Then, this list must be converted into an array using the np.asarray() method.

Then pass the final array to annot attribute. This will create a confusion matrix with the label, count, and percentage information for each class.

Use the below snippet to visualize the confusion matrix with all the details.

Snippet

group_names = ['True Neg','False Pos','False Neg','True Pos']

group_counts = ["{0:0.0f}".format(value) for value in

cf_matrix.flatten()]

group_percentages = ["{0:.2%}".format(value) for value in

cf_matrix.flatten()/np.sum(cf_matrix)]

labels = [f"{v1}n{v2}n{v3}" for v1, v2, v3 in

zip(group_names,group_counts,group_percentages)]

labels = np.asarray(labels).reshape(2,2)

ax = sns.heatmap(cf_matrix, annot=labels, fmt='', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labelsnn');

ax.set_xlabel('nPredicted Values')

ax.set_ylabel('Actual Values ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['False','True'])

ax.yaxis.set_ticklabels(['False','True'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

This is how you can create a confusion matrix for the binary classification machine learning model.

Next, you’ll learn about creating a confusion matrix for a classification model with multiple output classes.

Creating Classification Model For Multiple Classes

In this section, you’ll create a classification model for multiple output classes. In other words, it’s also called multivariate classes.

You’ll be using the iris dataset available in the sklearn dataset library.

It contains a total number of 150 data rows. Each row includes four numeric features and one output class. Output class can be any of one Iris flower type. Namely, Iris Setosa, Iris Versicolour, Iris Virginica.

To create the model, you’ll load the sklearn dataset, split it into train and testing set and fit the train data into the KNeighborsClassifier model.

After creating the model, you can use the test data to predict the values and check how the model is performing.

You can use the actual output classes from your test data and the predicted output returned by the predict() method to plot the confusion matrix and evaluate the model accuracy.

Use the below snippet to create the model.

Snippet

import numpy as np

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier as KNN

iris = load_iris()

X = iris.data

y = iris.target

# Split dataset into train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.4, random_state = 42)

knn = KNN(n_neighbors = 3)

# train th model

knn.fit(X_train, y_train)

print('Model is Created')Output

Model is CreatedNow the model is created.

Use the test data from the train test split and predict the output value using the predict() method as shown below.

Snippet

y_pred = knn.predict(X_test)

y_predYou’ll have the predicted output as an array. The value 0, 1, 2 shows the predicted category of the test data.

Output

array([1, 0, 2, 1, 1, 0, 1, 2, 1, 1, 2, 0, 0, 0, 0, 1, 2, 1, 1, 2, 0, 2,

0, 2, 2, 2, 2, 2, 0, 0, 0, 0, 1, 0, 0, 2, 1, 0, 0, 0, 2, 1, 1, 0,

0, 1, 1, 2, 1, 2, 1, 2, 1, 0, 2, 1, 0, 0, 0, 1])Now, you can use the predicted data available in y_pred to create a confusion matrix for multiple classes.

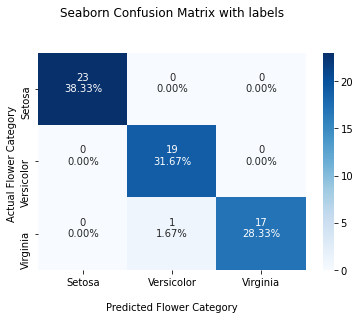

Plot Confusion matrix for Multiple Classes

In this section, you’ll learn how to plot a confusion matrix for multiple classes.

You can use the confusion_matrix() method available in the sklearn library to create a confusion matrix. It’ll contain three rows and columns representing the actual flower category and the predicted flower category in ascending order.

Snippet

from sklearn.metrics import confusion_matrix

#Get the confusion matrix

cf_matrix = confusion_matrix(y_test, y_pred)

print(cf_matrix)Output

[[23 0 0]

[ 0 19 0]

[ 0 1 17]]The below output shows the confusion matrix for actual and predicted flower category counts.

You can use this matrix to plot the confusion matrix using the seaborn library, as shown below.

Snippet

import seaborn as sns

import matplotlib.pyplot as plt

ax = sns.heatmap(cf_matrix, annot=True, cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labelsnn');

ax.set_xlabel('nPredicted Flower Category')

ax.set_ylabel('Actual Flower Category ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

ax.yaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

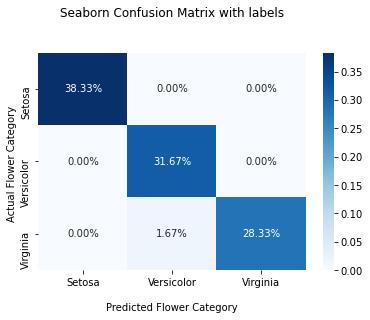

Plot Confusion Matrix for Multiple Classes With Percentage

In this section, you’ll plot the confusion matrix for multiple classes with the percentage of each output class. You can calculate the percentage by dividing the values in the confusion matrix by the sum of all values.

Use the below snippet to plot the confusion matrix for multiple classes with percentages.

Snippet

ax = sns.heatmap(cf_matrix/np.sum(cf_matrix), annot=True,

fmt='.2%', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labelsnn');

ax.set_xlabel('nPredicted Flower Category')

ax.set_ylabel('Actual Flower Category ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

ax.yaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

Plot Confusion Matrix for Multiple Classes With Numbers And Percentages

In this section, you’ll learn how to plot a confusion matrix with labels, counts, and percentages for the multiple classes.

You can use this to measure the percentage of each label. For example, how much percentage of the predictions belong to each category of flowers.

For this, first, you need to create a list of labels, then count each label in one list and measure the percentage of the labels in another list.

Then you can zip these different lists to create concatenated labels. Zipping means concatenating an item from each list and create one list. Then, this list must be converted into an array using the np.asarray() method.

This final array must be passed to annot attribute. This will create a confusion matrix with the label, count, and percentage information for each category of flowers.

Use the below snippet to visualize the confusion matrix with all the details.

Snippet

#group_names = ['True Neg','False Pos','False Neg','True Pos','True Pos','True Pos','True Pos','True Pos','True Pos']

group_counts = ["{0:0.0f}".format(value) for value in

cf_matrix.flatten()]

group_percentages = ["{0:.2%}".format(value) for value in

cf_matrix.flatten()/np.sum(cf_matrix)]

labels = [f"{v1}n{v2}n" for v1, v2 in

zip(group_counts,group_percentages)]

labels = np.asarray(labels).reshape(3,3)

ax = sns.heatmap(cf_matrix, annot=labels, fmt='', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labelsnn');

ax.set_xlabel('nPredicted Flower Category')

ax.set_ylabel('Actual Flower Category ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

ax.yaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

This is how you can plot a confusion matrix for multiple classes with percentages and numbers.

Plot Confusion Matrix Without Classifier

To plot the confusion matrix without a classifier model, refer to this StackOverflow answer.

Conclusion

To summarize, you’ve learned how to plot a confusion matrix for the machine learning model with binary output classes and multiple output classes.

You’ve also learned how to annotate the confusion matrix with more details such as labels, count of each label, and percentage of each label for better visualization.

If you’ve any questions, comment below.

You May Also Like

- How to Save and Load Machine Learning Models in python

- How to Plot Correlation Matrix in Python

In this short tutorial, you’ll see a full example of a Confusion Matrix in Python.

Topics to be reviewed:

- Creating a Confusion Matrix using pandas

- Displaying the Confusion Matrix using seaborn

- Getting additional stats via pandas_ml

- Working with non-numeric data

Creating a Confusion Matrix in Python using Pandas

To start, here is the dataset to be used for the Confusion Matrix in Python:

| y_actual | y_predicted |

| 1 | 1 |

| 0 | 1 |

| 0 | 0 |

| 1 | 1 |

| 0 | 0 |

| 1 | 1 |

| 0 | 1 |

| 0 | 0 |

| 1 | 1 |

| 0 | 0 |

| 1 | 0 |

| 0 | 0 |

You can then capture this data in Python by creating pandas DataFrame using this code:

import pandas as pd

data = {'y_actual': [1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0],

'y_predicted': [1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0]

}

df = pd.DataFrame(data)

print(df)

This is how the data would look like once you run the code:

y_actual y_predicted

0 1 1

1 0 1

2 0 0

3 1 1

4 0 0

5 1 1

6 0 1

7 0 0

8 1 1

9 0 0

10 1 0

11 0 0

To create the Confusion Matrix using pandas, you’ll need to apply the pd.crosstab as follows:

confusion_matrix = pd.crosstab(df['y_actual'], df['y_predicted'], rownames=['Actual'], colnames=['Predicted']) print (confusion_matrix)

And here is the full Python code to create the Confusion Matrix:

import pandas as pd

data = {'y_actual': [1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0],

'y_predicted': [1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0]

}

df = pd.DataFrame(data)

confusion_matrix = pd.crosstab(df['y_actual'], df['y_predicted'], rownames=['Actual'], colnames=['Predicted'])

print(confusion_matrix)

Run the code and you’ll get the following matrix:

Predicted 0 1

Actual

0 5 2

1 1 4

Displaying the Confusion Matrix using seaborn

The matrix you just created in the previous section was rather basic.

You can use the seaborn package in Python to get a more vivid display of the matrix. To accomplish this task, you’ll need to add the following two components into the code:

- import seaborn as sn

- sn.heatmap(confusion_matrix, annot=True)

You’ll also need to use the matplotlib package to plot the results by adding:

- import matplotlib.pyplot as plt

- plt.show()

Putting everything together:

import pandas as pd

import seaborn as sn

import matplotlib.pyplot as plt

data = {'y_actual': [1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0],

'y_predicted': [1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0]

}

df = pd.DataFrame(data)

confusion_matrix = pd.crosstab(df['y_actual'], df['y_predicted'], rownames=['Actual'], colnames=['Predicted'])

sn.heatmap(confusion_matrix, annot=True)

plt.show()

Optionally, you can also add the totals at the margins of the confusion matrix by setting margins=True.

So your Python code would look like this:

import pandas as pd

import seaborn as sn

import matplotlib.pyplot as plt

data = {'y_actual': [1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0],

'y_predicted': [1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0]

}

df = pd.DataFrame(data)

confusion_matrix = pd.crosstab(df['y_actual'], df['y_predicted'], rownames=['Actual'], colnames=['Predicted'], margins=True)

sn.heatmap(confusion_matrix, annot=True)

plt.show()

Getting additional stats using pandas_ml

You may print additional stats (such as the Accuracy) using the pandas_ml package in Python. You can install the pandas_ml package using PIP:

pip install pandas_ml

You’ll then need to add the following syntax into the code:

confusion_matrix = ConfusionMatrix(df['y_actual'], df['y_predicted']) confusion_matrix.print_stats()

Here is the complete code that you can use to get the additional stats:

import pandas as pd

from pandas_ml import ConfusionMatrix

data = {'y_actual': [1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0],

'y_predicted': [1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0]

}

df = pd.DataFrame(data)

confusion_matrix = ConfusionMatrix(df['y_actual'], df['y_predicted'])

confusion_matrix.print_stats()

Run the code, and you’ll see the measurements below (note that if you’re getting an error when running the code, you may consider changing the version of pandas. For example, you may change the version of pandas to 0.23.4 using this command: pip install pandas==0.23.4):

population: 12

P: 5

N: 7

PositiveTest: 6

NegativeTest: 6

TP: 4

TN: 5

FP: 2

FN: 1

ACC: 0.75

For our example:

- TP = True Positives = 4

- TN = True Negatives = 5

- FP = False Positives = 2

- FN = False Negatives = 1

You can also observe the TP, TN, FP and FN directly from the Confusion Matrix:

For a population of 12, the Accuracy is:

Accuracy = (TP+TN)/population = (4+5)/12 = 0.75

Working with non-numeric data

So far you have seen how to create a Confusion Matrix using numeric data. But what if your data is non-numeric?

For example, what if your data contained non-numeric values, such as ‘Yes’ and ‘No’ (rather than ‘1’ and ‘0’)?

In this case:

- Yes = 1

- No = 0

So the dataset would look like this:

| y_actual | y_predicted |

| Yes | Yes |

| No | Yes |

| No | No |

| Yes | Yes |

| No | No |

| Yes | Yes |

| No | Yes |

| No | No |

| Yes | Yes |

| No | No |

| Yes | No |

| No | No |

You can then apply a simple mapping exercise to map ‘Yes’ to 1, and ‘No’ to 0.

Specifically, you’ll need to add the following portion to the code:

df['y_actual'] = df['y_actual'].map({'Yes': 1, 'No': 0})

df['y_predicted'] = df['y_predicted'].map({'Yes': 1, 'No': 0})

And this is how the complete Python code would look like:

import pandas as pd

from pandas_ml import ConfusionMatrix

data = {'y_actual': ['Yes', 'No', 'No', 'Yes', 'No', 'Yes', 'No', 'No', 'Yes', 'No', 'Yes', 'No'],

'y_predicted': ['Yes', 'Yes', 'No', 'Yes', 'No', 'Yes', 'Yes', 'No', 'Yes', 'No', 'No', 'No']

}

df = pd.DataFrame(data)

df['y_actual'] = df['y_actual'].map({'Yes': 1, 'No': 0})

df['y_predicted'] = df['y_predicted'].map({'Yes': 1, 'No': 0})

confusion_matrix = ConfusionMatrix(df['y_actual'], df['y_predicted'])

confusion_matrix.print_stats()

You would then get the same stats:

population: 12

P: 5

N: 7

PositiveTest: 6

NegativeTest: 6

TP: 4

TN: 5

FP: 2

FN: 1

ACC: 0.75

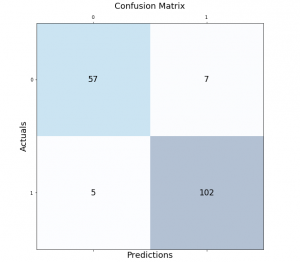

In this post, you will learn about how to draw / show confusion matrix using Matplotlib Python package. It is important to learn this technique as it will come very handy in assessing the machine learning model performance of classification models trained using different classification algorithms.

Confusion Matrix using Matplotlib

In order to demonstrate the confusion matrix using Matplotlib, let’s fit a pipeline estimator to the Sklearn breast cancer dataset using StandardScaler (for standardising the dataset) and Random Forest Classifier as the machine learning algorithm.

from sklearn import datasets from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler from sklearn.ensemble import RandomForestClassifier from sklearn.pipeline import make_pipeline # # Load the breast cancer data set # bc = datasets.load_breast_cancer() X = bc.data y = bc.target # # Create training and test split # X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.30, random_state=1, stratify=y) # # Create the pipeline # pipeline = make_pipeline(StandardScaler(), RandomForestClassifier(n_estimators=10, max_features=5, max_depth=2, random_state=1)) # # Fit the Pipeline estimator # pipeline.fit(X_train, y_train)

Once an estimator is fit to the training data set, nest step is to print the confusion matrix. In order to do that, the following steps will need to be followed:

- Get the predictions. Predict method on the instance of estimator (pipeline) is invoked.

- Create the confusion matrix using actuals and predictions for the test dataset. The confusion_matrix method of sklearn.metrics is used to create the confusion matrix array.

- Method matshow is used to print the confusion matrix box with different colors. In this example, the blue color is used. The method matshow is used to display an array as a matrix.

- In addition to the usage of matshow method, it is also required to loop through the array to print the prediction outcome in different boxes.

#

# Get the predictions

#

y_pred = pipeline.predict(X_test)

#

# Calculate the confusion matrix

#

conf_matrix = confusion_matrix(y_true=y_test, y_pred=y_pred)

#

# Print the confusion matrix using Matplotlib

#

fig, ax = plt.subplots(figsize=(7.5, 7.5))

ax.matshow(conf_matrix, cmap=plt.cm.Blues, alpha=0.3)

for i in range(conf_matrix.shape[0]):

for j in range(conf_matrix.shape[1]):

ax.text(x=j, y=i,s=conf_matrix[i, j], va='center', ha='center', size='xx-large')

plt.xlabel('Predictions', fontsize=18)

plt.ylabel('Actuals', fontsize=18)

plt.title('Confusion Matrix', fontsize=18)

plt.show()

This is how the confusion matrix will look like:

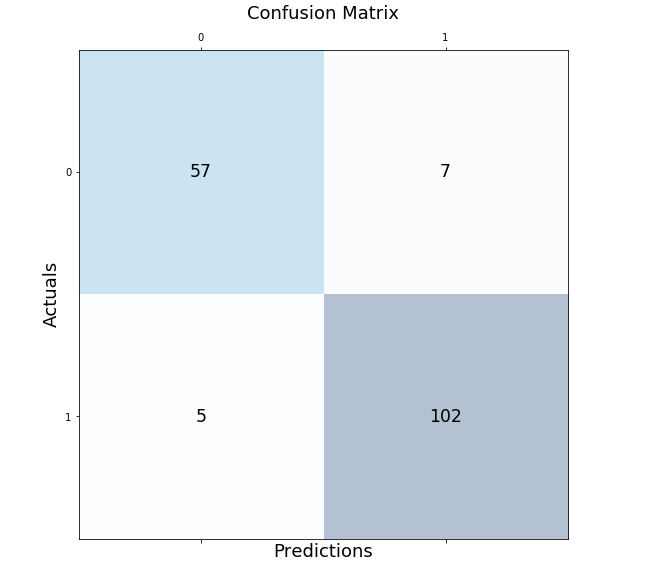

Confusion Matrix using Mlxtend Package

Here is another package, mlxtend.plotting (by Dr. Sebastian Rashcka) which can be used to draw or show confusion matrix. It is much simpler and easy to use than drawing the confusion matrix in the earlier section. All you need to do is import the method, plot_confusion_matrix and pass the confusion matrix array to the parameter, conf_mat. The green color is used to create the show the confusion matrix.

from mlxtend.plotting import plot_confusion_matrix

fig, ax = plot_confusion_matrix(conf_mat=conf_matrix, figsize=(6, 6), cmap=plt.cm.Greens)

plt.xlabel('Predictions', fontsize=18)

plt.ylabel('Actuals', fontsize=18)

plt.title('Confusion Matrix', fontsize=18)

plt.show()

Here is how the confusion matrix will look like:

- Author

- Recent Posts

I have been recently working in the area of Data analytics including Data Science and Machine Learning / Deep Learning. I am also passionate about different technologies including programming languages such as Java/JEE, Javascript, Python, R, Julia, etc, and technologies such as Blockchain, mobile computing, cloud-native technologies, application security, cloud computing platforms, big data, etc. For latest updates and blogs, follow us on Twitter. I would love to connect with you on Linkedin.

Check out my latest book titled as First Principles Thinking: Building winning products using first principles thinking

Ajitesh Kumar

I have been recently working in the area of Data analytics including Data Science and Machine Learning / Deep Learning. I am also passionate about different technologies including programming languages such as Java/JEE, Javascript, Python, R, Julia, etc, and technologies such as Blockchain, mobile computing, cloud-native technologies, application security, cloud computing platforms, big data, etc. For latest updates and blogs, follow us on Twitter. I would love to connect with you on Linkedin.

Check out my latest book titled as First Principles Thinking: Building winning products using first principles thinking

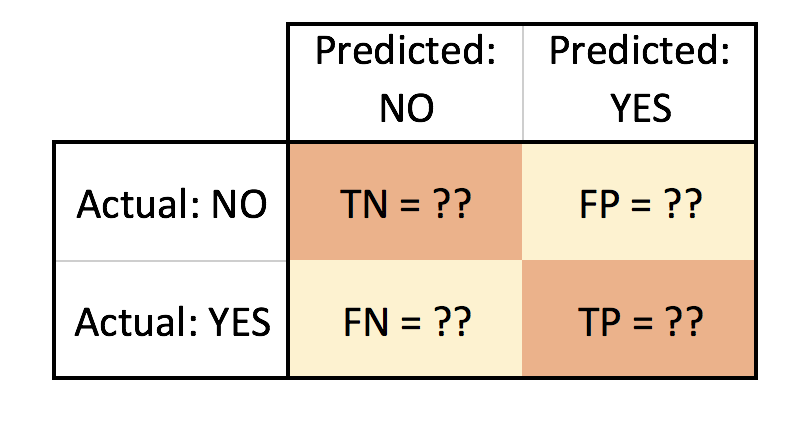

In this post I will demonstrate how to plot the Confusion Matrix. I will be using the confusion martrix from the Scikit-Learn library (sklearn.metrics) and Matplotlib for displaying the results in a more intuitive visual format.

The documentation for Confusion Matrix is pretty good, but I struggled to find a quick way to add labels and visualize the output into a 2×2 table.

For a good introductory read on confusion matrix check out this great post:

http://www.dataschool.io/simple-guide-to-confusion-matrix-terminology

This is a mockup of the look I am trying to achieve:

- TN = True Negative

- FN = False Negative

- FP = False Positive

- TP = True Positive

Let’s go through a quick Logistic Regression example using Scikit-Learn. For data I will use the popular Iris dataset (to read more about it reference https://en.wikipedia.org/wiki/Iris_flower_data_set).

We will use the confusion matrix to evaluate the accuracy of the classification and plot it using matplotlib:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn import datasets

data = datasets.load_iris()

df = pd.DataFrame(data.data, columns=data.feature_names)

df['Target'] = pd.DataFrame(data.target)

df.head() >>> output

show first 5 rows | sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | Target | |

|---|---|---|---|---|---|

| 5.1 | 3.5 | 1.4 | 0.2 | 0 | |

| 4.9 | 3.0 | 1.4 | 0.2 | 0 | |

| 4.7 | 3.2 | 1.3 | 0.2 | 0 | |

| 4.6 | 3.1 | 1.5 | 0.2 | 0 | |

| 5.0 | 3.6 | 1.4 | 0.2 | 0 |

We can examine our data quickly using Pandas correlation function to pick a suitable feature for our logistic regression. We will use the default pearson method.

corr = df.corr()

print(corr.Target) >>> output

sepal length (cm) 0.782561

sepal width (cm) -0.419446

petal length (cm) 0.949043

petal width (cm) 0.956464

Target 1.000000

Name: Target, dtype: float64So, let’s pick the two with highest potential: Petal Width (cm) and Petal Lengthh (cm) as our (X) independent variables. For our Target/dependent variable (Y) we can pick the Versicolor class. The Target class actually has three choices, to simplify our task and narrow it down to a binary classifier I will pick Versicolor to narrow our classification classes to (0 or 1): either it is versicolor (1) or it is Not versicolor (0).

print(data.target_names) >>> output

array(['setosa', 'versicolor', 'virginica'],

dtype='<U10')Let’s now create our X and Y:

x = df.iloc[0: ,3].reshape(-1,1)

y = (data.target == 1).astype(np.int) # we are picking Versicolor to be 1 and all other classes will be 0We will split our data into a test and train sets, then start building our Logistic Regression model. We will use an 80/20 split.

from sklearn.cross_validation import train_test_split

x_train, x_test, y_train, y_test = train_test_split(x,y, test_size = 0.20, random_state = 0)Before we create our classifier, we will need to normalize the data (feature scaling) using the utility function StandardScalar part of Scikit-Learn preprocessing package.

from sklearn.preprocessing import StandardScaler

sc_x = StandardScaler()

x_train = sc_x.fit_transform(x_train)

x_test = sc_x.transform(x_test)Now we are ready to build our Logistic Classifier:

from sklearn.linear_model import LogisticRegression

logit = LogisticRegression(random_state= 0)

logit.fit(x_train, y_train)

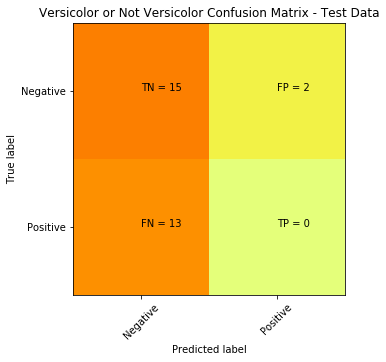

y_predicted = logit.predict(x_test)Now, let’s evaluate our classifier with the confusion matrix:

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_predicted)

print(cm) >>> output

[[15 2]

[ 13 0]]Visually the above doesn’t easily convey how is our classifier performing, but we mainly focus on the top right and bottom left (these are the errors or misclassifications).

The confusion matrix tells us we a have total of 15 (13 + 2) misclassified data out of the 30 test points (in terms of: Versicolor, or Not Versicolor). A better way to visualize this can be accomplished with the code below:

plt.clf()

plt.imshow(cm, interpolation='nearest', cmap=plt.cm.Wistia)

classNames = ['Negative','Positive']

plt.title('Versicolor or Not Versicolor Confusion Matrix - Test Data')

plt.ylabel('True label')

plt.xlabel('Predicted label')

tick_marks = np.arange(len(classNames))

plt.xticks(tick_marks, classNames, rotation=45)

plt.yticks(tick_marks, classNames)

s = [['TN','FP'], ['FN', 'TP']]

for i in range(2):

for j in range(2):

plt.text(j,i, str(s[i][j])+" = "+str(cm[i][j]))

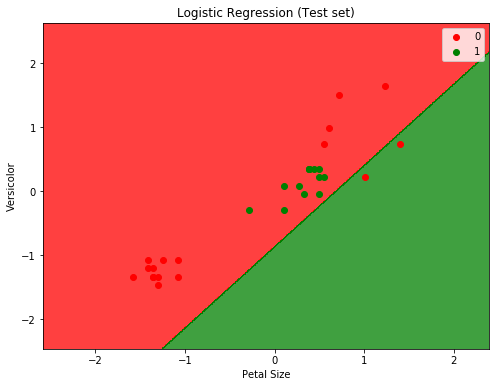

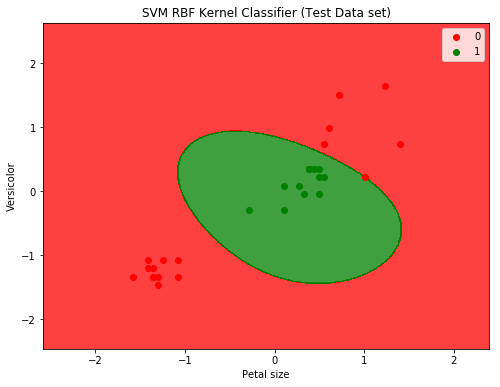

plt.show()To plot and display the decision boundary that separates the two classes (Versicolor or Not Versicolor ):

from matplotlib.colors import ListedColormap

plt.clf()

X_set, y_set = x_test, y_test

X1, X2 = np.meshgrid(np.arange(start = X_set[:, 0].min() - 1, stop = X_set[:, 0].max() + 1, step = 0.01),

np.arange(start = X_set[:, 1].min() - 1, stop = X_set[:, 1].max() + 1, step = 0.01))

plt.contourf(X1, X2, logit.predict(np.array([X1.ravel(), X2.ravel()]).T).reshape(X1.shape),

alpha = 0.75, cmap = ListedColormap(('red', 'green')))

plt.xlim(X1.min(), X1.max())

plt.ylim(X2.min(), X2.max())

for i, j in enumerate(np.unique(y_set)):

plt.scatter(X_set[y_set == j, 0], X_set[y_set == j, 1],

c = ListedColormap(('red', 'green'))(i), label = j)

plt.title('Logistic Regression (Test set)')

plt.xlabel('Petal Size')

plt.ylabel('Versicolor')

plt.legend()

plt.show()Will from the two plots we can easily see that the classifier is not doing a good job. And before digging into why (which will be another post on how to determine if data is linearly separable or not), we can assume that it’s because the data is not linearly separable (for the IRIS dataset in fact only setosa class is linearly separable).

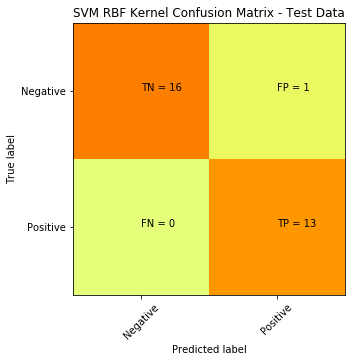

We can try another non-linear classifier, in this case we can use SVM with a Gaussian RBF Kernel:

from sklearn.svm import SVC

svm = SVC(kernel='rbf', random_state=0)

svm.fit(x_train, y_train)

predicted = svm.predict(x_test)

cm = confusion_matrix(y_test, predicted)

plt.clf()

plt.imshow(cm, interpolation='nearest', cmap=plt.cm.Wistia)

classNames = ['Negative','Positive']

plt.title('SVM RBF Kernel Confusion Matrix - Test Data')

plt.ylabel('True label')

plt.xlabel('Predicted label')

tick_marks = np.arange(len(classNames))

plt.xticks(tick_marks, classNames, rotation=45)

plt.yticks(tick_marks, classNames)

s = [['TN','FP'], ['FN', 'TP']]

for i in range(2):

for j in range(2):

plt.text(j,i, str(s[i][j])+" = "+str(cm[i][j]))

plt.show()Here is the plot to show the decision boundary

SVM with RBF Kernel produced a significant improvement: down from 15 misclassifications to only 1.

Hope this helps.

note: code was written using Jupyter Notebook

Те, кто работает с данными, отлично знают, что не в нейросетке счастье — а в том, как правильно обработать данные. Но чтобы их обработать, необходимо сначала проанализировать корреляции, выбрать нужные данные, выкинуть ненужные и так далее. Для подобных целей часто используется визуализация с помощью библиотеки matplotlib.

Встретимся «внутри»!

Настройка

Запустите следующий код для настройки. Отдельные диаграммы, впрочем, переопределяют свои настройки сами.

# !pip install brewer2mpl

import numpy as np

import pandas as pd

import matplotlib as mpl

import matplotlib.pyplot as plt

import seaborn as sns

import warnings; warnings.filterwarnings(action='once')

large = 22; med = 16; small = 12

params = {'axes.titlesize': large,

'legend.fontsize': med,

'figure.figsize': (16, 10),

'axes.labelsize': med,

'axes.titlesize': med,

'xtick.labelsize': med,

'ytick.labelsize': med,

'figure.titlesize': large}

plt.rcParams.update(params)

plt.style.use('seaborn-whitegrid')

sns.set_style("white")

%matplotlib inline

# Version

print(mpl.__version__) #> 3.0.0

print(sns.__version__) #> 0.9.0

Корреляция

Графики корреляции используются для визуализации взаимосвязи между 2 или более переменными. То есть, как одна переменная изменяется по отношению к другой.

1. Точечный график

Scatteplot — это классический и фундаментальный вид диаграммы, используемый для изучения взаимосвязи между двумя переменными. Если у вас есть несколько групп в ваших данных, вы можете визуализировать каждую группу в другом цвете. В matplotlib вы можете легко сделать это, используя plt.scatterplot().

Показать код

# Import dataset

midwest = pd.read_csv("https://raw.githubusercontent.com/selva86/datasets/master/midwest_filter.csv")

# Prepare Data

# Create as many colors as there are unique midwest['category']

categories = np.unique(midwest['category'])

colors = [plt.cm.tab10(i/float(len(categories)-1)) for i in range(len(categories))]

# Draw Plot for Each Category

plt.figure(figsize=(16, 10), dpi= 80, facecolor='w', edgecolor='k')

for i, category in enumerate(categories):

plt.scatter('area', 'poptotal',

data=midwest.loc[midwest.category==category, :],

s=20, c=colors[i], label=str(category))

# Decorations

plt.gca().set(xlim=(0.0, 0.1), ylim=(0, 90000),

xlabel='Area', ylabel='Population')

plt.xticks(fontsize=12); plt.yticks(fontsize=12)

plt.title("Scatterplot of Midwest Area vs Population", fontsize=22)

plt.legend(fontsize=12)

plt.show()

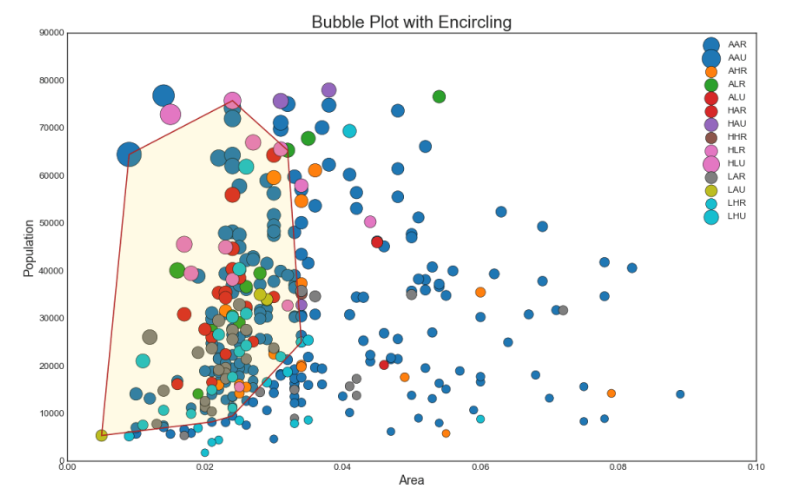

2. Пузырьковая диаграмма с захватом группы

Иногда хочется показать группу точек внутри границы, чтобы подчеркнуть их важность. В этом примере мы получаем записи из фрейма данных, которые должны быть выделены, и передаем их в encircle() описанный в приведенном ниже коде.

Показать код

from matplotlib import patches

from scipy.spatial import ConvexHull

import warnings; warnings.simplefilter('ignore')

sns.set_style("white")

# Step 1: Prepare Data

midwest = pd.read_csv("https://raw.githubusercontent.com/selva86/datasets/master/midwest_filter.csv")

# As many colors as there are unique midwest['category']

categories = np.unique(midwest['category'])

colors = [plt.cm.tab10(i/float(len(categories)-1)) for i in range(len(categories))]

# Step 2: Draw Scatterplot with unique color for each category

fig = plt.figure(figsize=(16, 10), dpi= 80, facecolor='w', edgecolor='k')

for i, category in enumerate(categories):

plt.scatter('area', 'poptotal', data=midwest.loc[midwest.category==category, :], s='dot_size', c=colors[i], label=str(category), edgecolors='black', linewidths=.5)

# Step 3: Encircling

# https://stackoverflow.com/questions/44575681/how-do-i-encircle-different-data-sets-in-scatter-plot

def encircle(x,y, ax=None, **kw):

if not ax: ax=plt.gca()

p = np.c_[x,y]

hull = ConvexHull(p)

poly = plt.Polygon(p[hull.vertices,:], **kw)

ax.add_patch(poly)

# Select data to be encircled

midwest_encircle_data = midwest.loc[midwest.state=='IN', :]

# Draw polygon surrounding vertices

encircle(midwest_encircle_data.area, midwest_encircle_data.poptotal, ec="k", fc="gold", alpha=0.1)

encircle(midwest_encircle_data.area, midwest_encircle_data.poptotal, ec="firebrick", fc="none", linewidth=1.5)

# Step 4: Decorations

plt.gca().set(xlim=(0.0, 0.1), ylim=(0, 90000),

xlabel='Area', ylabel='Population')

plt.xticks(fontsize=12); plt.yticks(fontsize=12)

plt.title("Bubble Plot with Encircling", fontsize=22)

plt.legend(fontsize=12)

plt.show()

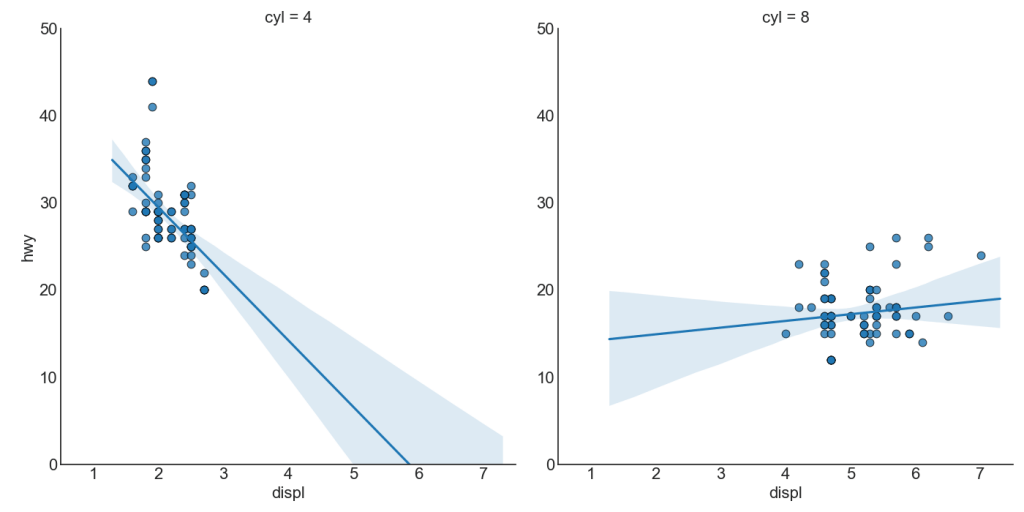

3. График линейной регрессии best fit

Если вы хотите понять, как две переменные изменяются по отношению друг к другу, лучше всего подойдет линия best fit. На графике ниже показано, как best fit отличается среди различных групп данных. Чтобы отключить группировки и просто нарисовать одну линию best fit для всего набора данных, удалите параметр hue=’cyl’ из sns.lmplot() ниже.

Показать код

# Import Data

df = pd.read_csv("https://raw.githubusercontent.com/selva86/datasets/master/mpg_ggplot2.csv")

df_select = df.loc[df.cyl.isin([4,8]), :]

# Plot

sns.set_style("white")

gridobj = sns.lmplot(x="displ", y="hwy", hue="cyl", data=df_select,

height=7, aspect=1.6, robust=True, palette='tab10',

scatter_kws=dict(s=60, linewidths=.7, edgecolors='black'))

# Decorations

gridobj.set(xlim=(0.5, 7.5), ylim=(0, 50))

plt.title("Scatterplot with line of best fit grouped by number of cylinders", fontsize=20)

plt.show()

Каждая строка регрессии в своем собственном столбце

Кроме того, вы можете показать линию best fit для каждой группы в отдельном столбце. Вы хотите сделать это, установив параметр col=groupingcolumn внутри sns.lmplot().

Показать код

# Import Data

df = pd.read_csv("https://raw.githubusercontent.com/selva86/datasets/master/mpg_ggplot2.csv")

df_select = df.loc[df.cyl.isin([4,8]), :]

# Each line in its own column

sns.set_style("white")

gridobj = sns.lmplot(x="displ", y="hwy",

data=df_select,

height=7,

robust=True,

palette='Set1',

col="cyl",

scatter_kws=dict(s=60, linewidths=.7, edgecolors='black'))

# Decorations

gridobj.set(xlim=(0.5, 7.5), ylim=(0, 50))

plt.show()

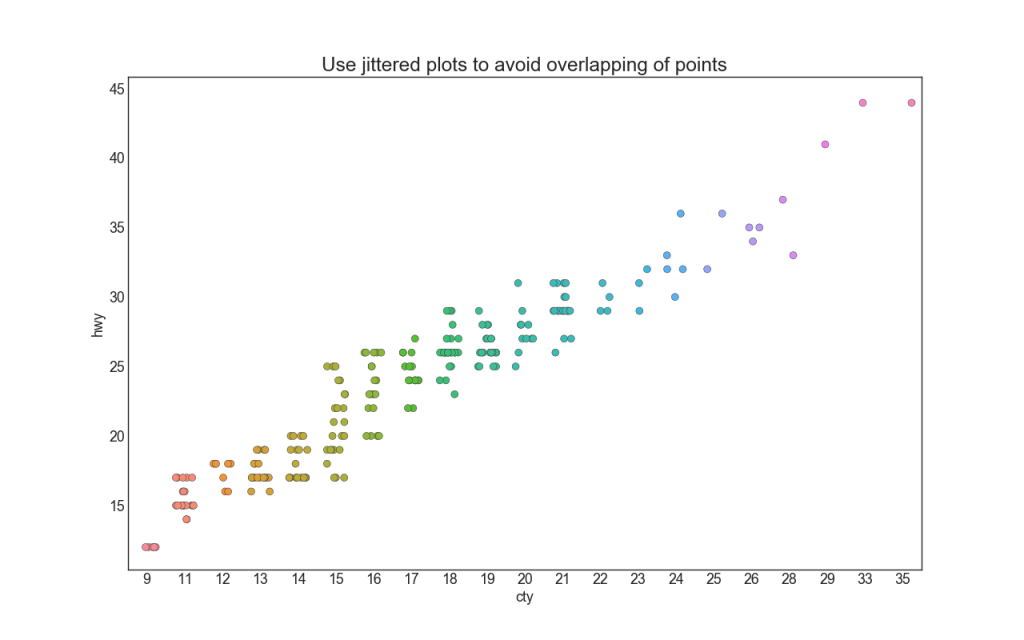

4. Stripplot

Часто несколько точек данных имеют одинаковые значения X и Y. В результате несколько точек наносятся друг на друга и скрываются. Чтобы избежать этого, слегка раздвиньте точки, чтобы вы могли видеть их визуально. Это удобно делать с помощью стрипплота stripplot().

Показать код

# Import Data

df = pd.read_csv("https://raw.githubusercontent.com/selva86/datasets/master/mpg_ggplot2.csv")

# Draw Stripplot

fig, ax = plt.subplots(figsize=(16,10), dpi= 80)

sns.stripplot(df.cty, df.hwy, jitter=0.25, size=8, ax=ax, linewidth=.5)

# Decorations

plt.title('Use jittered plots to avoid overlapping of points', fontsize=22)

plt.show()

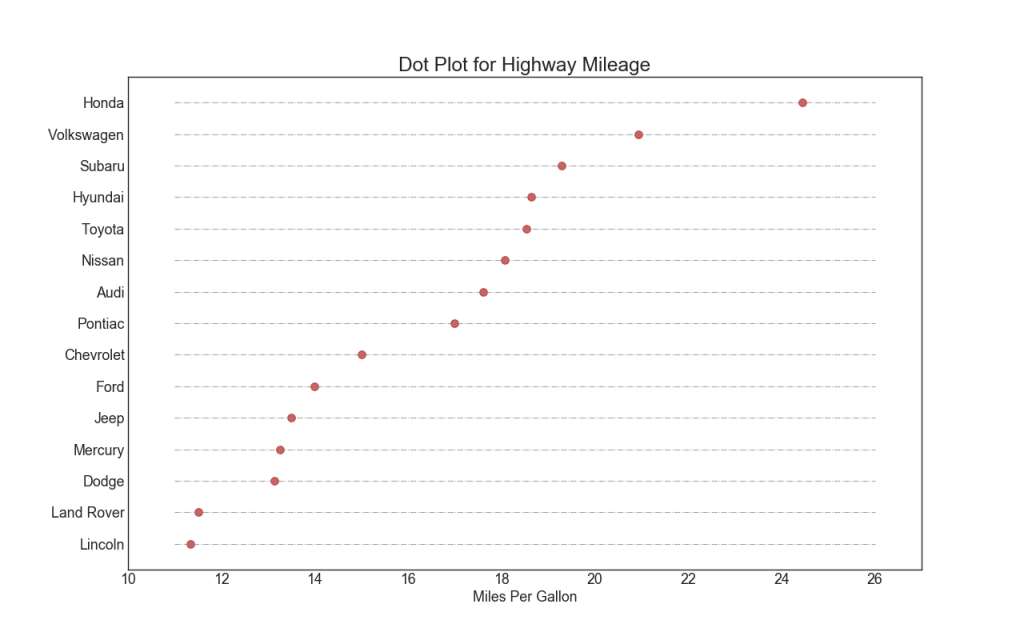

5. График подсчета (Counts Plot)

Другим вариантом, позволяющим избежать проблемы наложения точек, является увеличение размера точки в зависимости от того, сколько точек лежит в этом месте. Таким образом, чем больше размер точки, тем больше концентрация точек вокруг нее.

Показать код

# Import Data

df = pd.read_csv("https://raw.githubusercontent.com/selva86/datasets/master/mpg_ggplot2.csv")

df_counts = df.groupby(['hwy', 'cty']).size().reset_index(name='counts')

# Draw Stripplot

fig, ax = plt.subplots(figsize=(16,10), dpi= 80)

sns.scatterplot(df_counts.cty, df_counts.hwy, size=df_counts.counts*2, ax=ax)

# Decorations

plt.title('Counts Plot - Size of circle is bigger as more points overlap', fontsize=22)

plt.show()

6. Построчная гистограмма

Построчные гистограммы имеют гистограмму вдоль переменных оси X и Y. Это используется для визуализации отношений между X и Y вместе с одномерным распределением X и Y по отдельности. Этот график часто используется в анализе данных (EDA).

Показать код

# Import Data

df = pd.read_csv("https://raw.githubusercontent.com/selva86/datasets/master/mpg_ggplot2.csv")

# Create Fig and gridspec

fig = plt.figure(figsize=(16, 10), dpi= 80)

grid = plt.GridSpec(4, 4, hspace=0.5, wspace=0.2)

# Define the axes

ax_main = fig.add_subplot(grid[:-1, :-1])

ax_right = fig.add_subplot(grid[:-1, -1], xticklabels=[], yticklabels=[])

ax_bottom = fig.add_subplot(grid[-1, 0:-1], xticklabels=[], yticklabels=[])

# Scatterplot on main ax

ax_main.scatter('displ', 'hwy', s=df.cty*4, c=df.manufacturer.astype('category').cat.codes, alpha=.9, data=df, cmap="tab10", edgecolors='gray', linewidths=.5)

# histogram on the right

ax_bottom.hist(df.displ, 40, histtype='stepfilled', orientation='vertical', color='deeppink')

ax_bottom.invert_yaxis()

# histogram in the bottom

ax_right.hist(df.hwy, 40, histtype='stepfilled', orientation='horizontal', color='deeppink')

# Decorations

ax_main.set(title='Scatterplot with Histograms n displ vs hwy', xlabel='displ', ylabel='hwy')

ax_main.title.set_fontsize(20)

for item in ([ax_main.xaxis.label, ax_main.yaxis.label] + ax_main.get_xticklabels() + ax_main.get_yticklabels()):

item.set_fontsize(14)

xlabels = ax_main.get_xticks().tolist()

ax_main.set_xticklabels(xlabels)

plt.show()

7. Boxplot

Boxplot служит той же цели, что и построчная гистограмма. Тем не менее, этот график помогает точно определить медиану, 25-й и 75-й персентили X и Y.

Показать код

# Import Data

df = pd.read_csv("https://raw.githubusercontent.com/selva86/datasets/master/mpg_ggplot2.csv")

# Create Fig and gridspec

fig = plt.figure(figsize=(16, 10), dpi= 80)

grid = plt.GridSpec(4, 4, hspace=0.5, wspace=0.2)

# Define the axes

ax_main = fig.add_subplot(grid[:-1, :-1])

ax_right = fig.add_subplot(grid[:-1, -1], xticklabels=[], yticklabels=[])

ax_bottom = fig.add_subplot(grid[-1, 0:-1], xticklabels=[], yticklabels=[])

# Scatterplot on main ax

ax_main.scatter('displ', 'hwy', s=df.cty*5, c=df.manufacturer.astype('category').cat.codes, alpha=.9, data=df, cmap="Set1", edgecolors='black', linewidths=.5)

# Add a graph in each part

sns.boxplot(df.hwy, ax=ax_right, orient="v")

sns.boxplot(df.displ, ax=ax_bottom, orient="h")

# Decorations ------------------

# Remove x axis name for the boxplot

ax_bottom.set(xlabel='')

ax_right.set(ylabel='')

# Main Title, Xlabel and YLabel

ax_main.set(title='Scatterplot with Histograms n displ vs hwy', xlabel='displ', ylabel='hwy')

# Set font size of different components

ax_main.title.set_fontsize(20)

for item in ([ax_main.xaxis.label, ax_main.yaxis.label] + ax_main.get_xticklabels() + ax_main.get_yticklabels()):

item.set_fontsize(14)

plt.show()

8. Диаграмма корреляции

Диаграмма корреляции используется для визуального просмотра метрики корреляции между всеми возможными парами числовых переменных в данном наборе данных (или двумерном массиве).

Показать код

# Import Dataset

df = pd.read_csv("https://github.com/selva86/datasets/raw/master/mtcars.csv")

# Plot

plt.figure(figsize=(12,10), dpi= 80)

sns.heatmap(df.corr(), xticklabels=df.corr().columns, yticklabels=df.corr().columns, cmap='RdYlGn', center=0, annot=True)

# Decorations

plt.title('Correlogram of mtcars', fontsize=22)

plt.xticks(fontsize=12)

plt.yticks(fontsize=12)

plt.show()

9. Парный график

Часто используется в исследовательском анализе, чтобы понять взаимосвязь между всеми возможными парами числовых переменных. Это обязательный инструмент для двумерного анализа.

Показать код

# Load Dataset

df = sns.load_dataset('iris')

# Plot

plt.figure(figsize=(10,8), dpi= 80)

sns.pairplot(df, kind="scatter", hue="species", plot_kws=dict(s=80, edgecolor="white", linewidth=2.5))

plt.show()

Показать код

# Load Dataset

df = sns.load_dataset('iris')

# Plot

plt.figure(figsize=(10,8), dpi= 80)

sns.pairplot(df, kind="reg", hue="species")

plt.show()

Отклонение

10. Расходящиеся стобцы

Если вы хотите увидеть, как элементы меняются в зависимости от одной метрики, и визуализировать порядок и величину этой дисперсии, расходящиеся стобцы — отличный инструмент. Он помогает быстро дифференцировать производительность групп в ваших данных, является достаточно интуитивным и мгновенно передает смысл.

Показать код

# Prepare Data

df = pd.read_csv("https://github.com/selva86/datasets/raw/master/mtcars.csv")

x = df.loc[:, ['mpg']]

df['mpg_z'] = (x - x.mean())/x.std()

df['colors'] = ['red' if x < 0 else 'green' for x in df['mpg_z']]

df.sort_values('mpg_z', inplace=True)

df.reset_index(inplace=True)

# Draw plot

plt.figure(figsize=(14,10), dpi= 80)

plt.hlines(y=df.index, xmin=0, xmax=df.mpg_z, color=df.colors, alpha=0.4, linewidth=5)

# Decorations

plt.gca().set(ylabel='$Model$', xlabel='$Mileage$')

plt.yticks(df.index, df.cars, fontsize=12)

plt.title('Diverging Bars of Car Mileage', fontdict={'size':20})

plt.grid(linestyle='--', alpha=0.5)

plt.show()

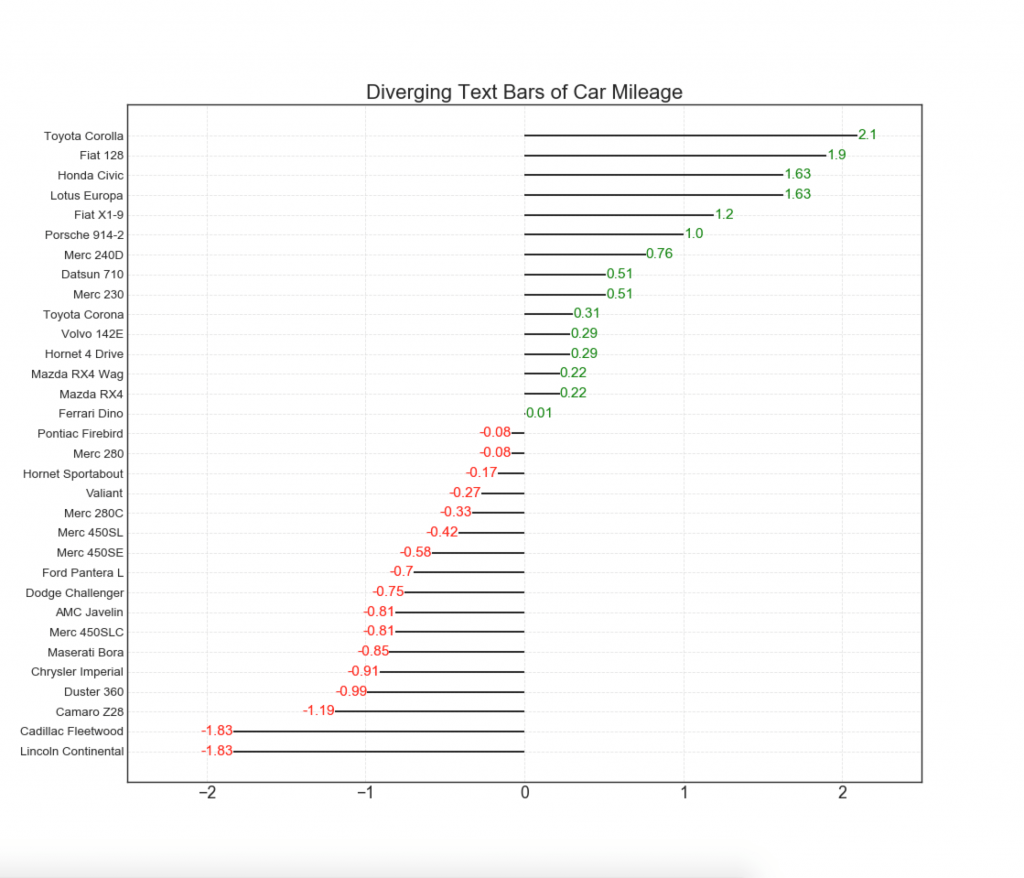

11. Расходящиеся стобцы с текстом

— похожи на расходящиеся столбцы, и это предпочтительнее, если вы хотите показать значимость каждого элемента в диаграмме в хорошем и презентабельном виде.

Показать код

# Prepare Data

df = pd.read_csv("https://github.com/selva86/datasets/raw/master/mtcars.csv")

x = df.loc[:, ['mpg']]

df['mpg_z'] = (x - x.mean())/x.std()

df['colors'] = ['red' if x < 0 else 'green' for x in df['mpg_z']]

df.sort_values('mpg_z', inplace=True)

df.reset_index(inplace=True)

# Draw plot

plt.figure(figsize=(14,14), dpi= 80)

plt.hlines(y=df.index, xmin=0, xmax=df.mpg_z)

for x, y, tex in zip(df.mpg_z, df.index, df.mpg_z):

t = plt.text(x, y, round(tex, 2), horizontalalignment='right' if x < 0 else 'left',

verticalalignment='center', fontdict={'color':'red' if x < 0 else 'green', 'size':14})

# Decorations

plt.yticks(df.index, df.cars, fontsize=12)

plt.title('Diverging Text Bars of Car Mileage', fontdict={'size':20})

plt.grid(linestyle='--', alpha=0.5)

plt.xlim(-2.5, 2.5)

plt.show()

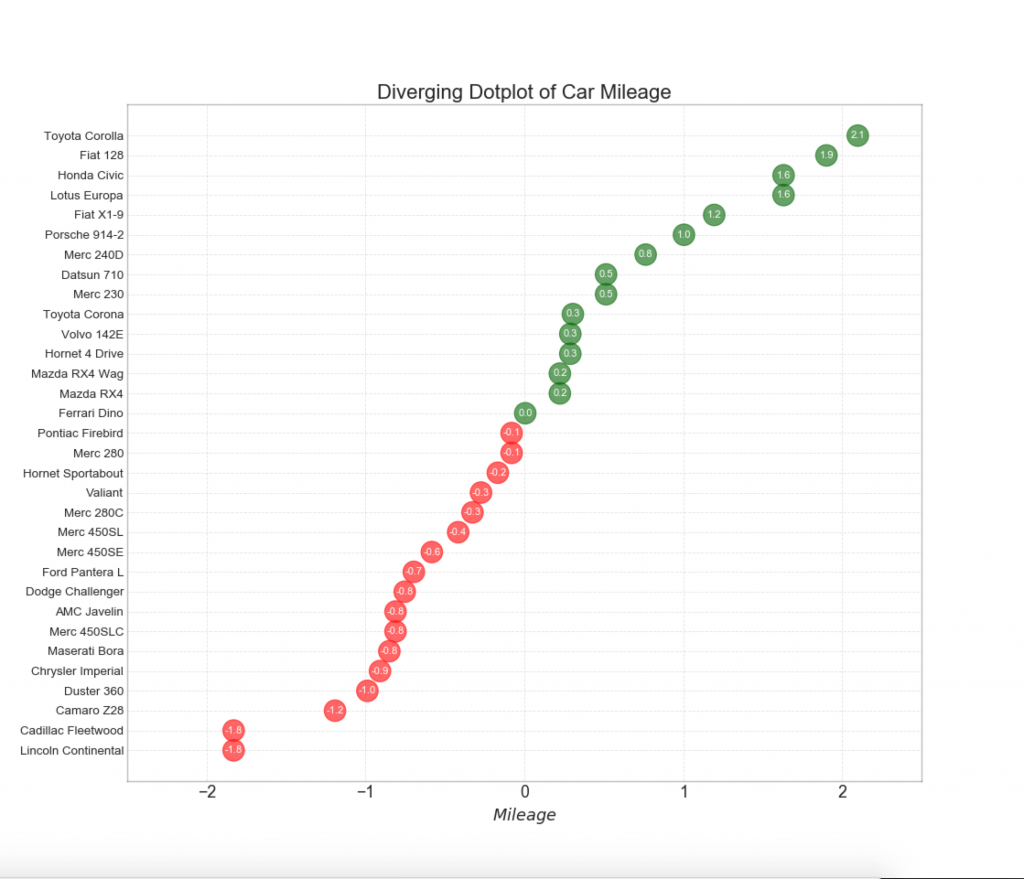

12. Расходящиеся точки

График расходящихся точек также похож на расходящиеся столбцы. Однако по сравнению с расходящимися столбиками, отсутствие столбцов уменьшает степень контрастности и несоответствия между группами.

Показать код

# Prepare Data

df = pd.read_csv("https://github.com/selva86/datasets/raw/master/mtcars.csv")

x = df.loc[:, ['mpg']]

df['mpg_z'] = (x - x.mean())/x.std()

df['colors'] = ['red' if x < 0 else 'darkgreen' for x in df['mpg_z']]

df.sort_values('mpg_z', inplace=True)

df.reset_index(inplace=True)

# Draw plot

plt.figure(figsize=(14,16), dpi= 80)

plt.scatter(df.mpg_z, df.index, s=450, alpha=.6, color=df.colors)

for x, y, tex in zip(df.mpg_z, df.index, df.mpg_z):

t = plt.text(x, y, round(tex, 1), horizontalalignment='center',

verticalalignment='center', fontdict={'color':'white'})

# Decorations

# Lighten borders

plt.gca().spines["top"].set_alpha(.3)

plt.gca().spines["bottom"].set_alpha(.3)

plt.gca().spines["right"].set_alpha(.3)

plt.gca().spines["left"].set_alpha(.3)

plt.yticks(df.index, df.cars)

plt.title('Diverging Dotplot of Car Mileage', fontdict={'size':20})

plt.xlabel('$Mileage$')

plt.grid(linestyle='--', alpha=0.5)

plt.xlim(-2.5, 2.5)

plt.show()

13. Расходящаяся диаграмма Lollipop с маркерами

Lollipop обеспечивает гибкий способ визуализации расхождения, делая акцент на любых значимых точках данных, на которые вы хотите обратить внимание.

Показать код

# Prepare Data

df = pd.read_csv("https://github.com/selva86/datasets/raw/master/mtcars.csv")

x = df.loc[:, ['mpg']]

df['mpg_z'] = (x - x.mean())/x.std()

df['colors'] = 'black'

# color fiat differently

df.loc[df.cars == 'Fiat X1-9', 'colors'] = 'darkorange'

df.sort_values('mpg_z', inplace=True)

df.reset_index(inplace=True)

# Draw plot

import matplotlib.patches as patches

plt.figure(figsize=(14,16), dpi= 80)

plt.hlines(y=df.index, xmin=0, xmax=df.mpg_z, color=df.colors, alpha=0.4, linewidth=1)

plt.scatter(df.mpg_z, df.index, color=df.colors, s=[600 if x == 'Fiat X1-9' else 300 for x in df.cars], alpha=0.6)

plt.yticks(df.index, df.cars)

plt.xticks(fontsize=12)

# Annotate

plt.annotate('Mercedes Models', xy=(0.0, 11.0), xytext=(1.0, 11), xycoords='data',

fontsize=15, ha='center', va='center',

bbox=dict(boxstyle='square', fc='firebrick'),

arrowprops=dict(arrowstyle='-[, widthB=2.0, lengthB=1.5', lw=2.0, color='steelblue'), color='white')

# Add Patches

p1 = patches.Rectangle((-2.0, -1), width=.3, height=3, alpha=.2, facecolor='red')

p2 = patches.Rectangle((1.5, 27), width=.8, height=5, alpha=.2, facecolor='green')